I screwed up big time and lost my ZFS pool ! How can I retrieve it, I need help ?

I’ve been using PVE v8 for few months at home, mainly for windows & macOS VMs and testing new systems, inc Plex & TrueNAS CoreIt was going great and recently added Proxmox Backup Server in order to backup the VMs/LXC containers, but couldn’t get TrueNAS to backup, too much data, too slow, various problems, whatever I tried, it didn’t work!

For a while been researching how to backup TrueNAS and found loads of videos on using ZFS pool on PVE as NAS, as not using or need to use anything else TrueNAS does, just need the basics of NAS & FS

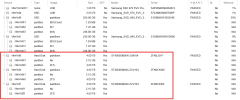

Few weeks back, exported the ZFS from TrueNAS Core -

& imported to PVE just fine, everything present & accessible -

Code:

root@pve:/HomeStorage# zpool status

pool: HomeStorage

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

HomeStorage ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

sde2 ONLINE 0 0 0

sdd2 ONLINE 0 0 0

sdc2 ONLINE 0 0 0

errors: No known data errors

root@pve:/HomeStorage# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

HomeStorage 10.9T 3.87T 7.04T - - 0% 35% 1.00x ONLINE -Then experimented with Turnkey FS in LXC, couldn’t access the SMB shares & didn’t really like it, so switched to Cockpit in LXC, seemed easier to setup

All seemed good, setup shares as before, but win/mac VMs couldn’t access any files or folders…

Spent a couple of weeks looking at ACL issues, trying to strip/reset to get working on PVE/Cockpit, eventually managed to access shares but couldn’t read/exec previous files, but could add/write new when testing

After reading forums for days trying to resolve ACLs, I stupidly

This is how its all gone horribly wrong I messed up, I know

Damn I wish I was still as previous point of ACLs, but I’m not, I cant access ZFS pool at all, it doesn’t exist & seems much worse !!!

So reconnected 3 disks to TrueNAS Core & tried to re-import the ZFS pool – no pool available

Tried to restore TrueNAS config (as had pool), says its offline, suspect TrueNAS couldn’t see any pool info on disks, realised disk structures had changed when imported pool to PVE previously

Assume ZFS pool headers info have gone from the 3 disks, but still has ZFS partitions

As it last worked in PVE, removed disks from TrueNAS, reverted back to import pool back to PVE – but no pool available…

This is where I’m at now, stuck with no ZFS pool appearing on TreuNAS or PVE host, assume data/files etc are still on the 3 drives, as not used for anything else since

Code:

root@pve:/# lsblk

sdd 8:48 0 3.6T 0 disk

├─sdd1 8:49 0 2G 0 part

└─sdd2 8:50 0 3.6T 0 part

sde 8:64 0 3.6T 0 disk

├─sde1 8:65 0 2G 0 part

└─sde2 8:66 0 3.6T 0 part

sdf 8:80 0 3.6T 0 disk

├─sdf1 8:81 0 2G 0 part

└─sdf2 8:82 0 3.6T 0 part

root@pve:~# lsblk -f

sdd

├─sdd1

│

└─sdd2

zfs_me 5000 HomeStorage

4042865439429627384

sde

├─sde1

│

└─sde2

zfs_me 5000 HomeStorage

4042865439429627384

sdf

├─sdf1

│

└─sdf2

zfs_me 5000 HomeStorage

4042865439429627384

root@pve:~# blkid

/dev/sdf2: LABEL="HomeStorage" UUID="4042865439429627384" UUID_SUB="15582875145222524775" BLOCK_SIZE="4096" TYPE="zfs_member" PARTUUID="70fc559f-460f-11ef-bfbf-bc24115dd704"

/dev/sdd2: LABEL="HomeStorage" UUID="4042865439429627384" UUID_SUB="4849601129984592392" BLOCK_SIZE="4096" TYPE="zfs_member" PARTUUID="70efda39-460f-11ef-bfbf-bc24115dd704"

/dev/sde2: LABEL="HomeStorage" UUID="4042865439429627384" UUID_SUB="6251018130718913739" BLOCK_SIZE="4096" TYPE="zfs_member" PARTUUID="70e42c65-460f-11ef-bfbf-bc24115dd704"

root@pve:~# zpool import -f -d /dev/sdd2 -f -d /dev/sde2 -f -d /dev/sde2 -o readonly=on HomeStorage

cannot import 'HomeStorage': no such pool available

root@pve:~# zdb -l /dev/sdd2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'HomeStorage'

state: 2

txg: 236741

pool_guid: 4042865439429627384

errata: 0

hostid: 1691883302

hostname: 'pve'

top_guid: 15071918969507238202

guid: 4849601129984592392

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15071918969507238202

nparity: 1

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 11995904212992

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 15582875145222524775

path: '/dev/sdf2'

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 6251018130718913739

path: '/dev/sde2'

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 4849601129984592392

path: '/dev/sdd2'

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@pve:~# zdb -l /dev/sde2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'HomeStorage'

state: 2

txg: 236741

pool_guid: 4042865439429627384

errata: 0

hostid: 1691883302

hostname: 'pve'

top_guid: 15071918969507238202

guid: 6251018130718913739

vdev_children: 1

vdev_tree:

type: 'raidz'

id: 0

guid: 15071918969507238202

nparity: 1

metaslab_array: 65

metaslab_shift: 34

ashift: 12

asize: 11995904212992

is_log: 0

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 15582875145222524775

path: '/dev/sdf2'

create_txg: 4

children[1]:

type: 'disk'

id: 1

guid: 6251018130718913739

path: '/dev/sde2'

create_txg: 4

children[2]:

type: 'disk'

id: 2

guid: 4849601129984592392

path: '/dev/sdd2'

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3

root@pve:~# zdb -l /dev/sdf2

------------------------------------

LABEL 0

------------------------------------

version: 5000

name: 'HomeStorage'

state: 2

txg: 17455

pool_guid: 4474347643534269129

errata: 0

hostid: 1691883302

hostname: 'pve'

top_guid: 9355695702525660699

guid: 9355695702525660699

vdev_children: 1

vdev_tree:

type: 'disk'

id: 0

guid: 9355695702525660699

path: '/dev/sdf2'

whole_disk: 0

metaslab_array: 256

metaslab_shift: 34

ashift: 12

asize: 3998634737664

is_log: 0

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 0 1 2 3Even though the pool still appears to be on the 3 drives, I cannot import or recreate

Code:

root@pve:~# zdb -b -e -p /dev/sdd2 HomeStorage

Traversing all blocks to verify nothing leaked ...

loading concrete vdev 0, metaslab 697 of 698 ...

3.87T completed (12695MB/s) estimated time remaining: 0hr 00min 00sec

No leaks (block sum matches space maps exactly)

bp count: 23373824

ganged count: 0

bp logical: 3036445863424 avg: 129907

bp physical: 2826388418048 avg: 120921 compression: 1.07

bp allocated: 4252095021056 avg: 181916 compression: 0.71

bp deduped: 0 ref>1: 0 deduplication: 1.00

bp cloned: 0 count: 0

Normal class: 4252087648256 used: 35.51%

Embedded log class 0 used: 0.00%

additional, non-pointer bps of type 0: 17990

Dittoed blocks on same vdev: 166251

root@pve:~# zdb -b -e -p /dev/sde2 HomeStorage

Traversing all blocks to verify nothing leaked ...

loading concrete vdev 0, metaslab 697 of 698 ...

3.87T completed (12728MB/s) estimated time remaining: 0hr 00min 00sec

No leaks (block sum matches space maps exactly)

bp count: 23373824

ganged count: 0

bp logical: 3036445863424 avg: 129907

bp physical: 2826388418048 avg: 120921 compression: 1.07

bp allocated: 4252095021056 avg: 181916 compression: 0.71

bp deduped: 0 ref>1: 0 deduplication: 1.00

bp cloned: 0 count: 0

Normal class: 4252087648256 used: 35.51%

Embedded log class 0 used: 0.00%

additional, non-pointer bps of type 0: 17990

Dittoed blocks on same vdev: 166251

root@pve:~# zdb -b -e -p /dev/sdf2 HomeStorage

Traversing all blocks to verify nothing leaked ...

loading concrete vdev 0, metaslab 231 of 232 ...

No leaks (block sum matches space maps exactly)

bp count: 79

ganged count: 0

bp logical: 4077568 avg: 51614

bp physical: 201728 avg: 2553 compression: 20.21

bp allocated: 614400 avg: 7777 compression: 6.64

bp deduped: 0 ref>1: 0 deduplication: 1.00

bp cloned: 0 count: 0

Normal class: 294912 used: 0.00%

Embedded log class 0 used: 0.00%

additional, non-pointer bps of type 0: 26

Dittoed blocks on same vdev: 53Would appear as though the last drive sdf2 is not in sync with the other 2 drives, which appear to have a fair amount of data (as expected) for the pool HomeStorage???

Code:

root@pve:~# zpool list

no pools available

root@pve:~# zpool status

no pools available

root@pve:~# zfs list

no datasets available

root@pve:~# zpool import

no pools available to importAre datasets/files still there or not?

How can I retrieve them, even readonly to copy elsewhere?

Whats a safe way to recover, attempt in PVE or TrueNAS or via a VM or some disk recovery software?

Accept I screwed this up like a retard

Most of the stuff isn’t important, some of it is backed up n safe, BUT some of it is personal stuff that I don’t want to lose, so need help to get them back ???

Is there any way to recover the files off the 3 drives, which seem intact even though ZFS pool isn’t available – HELP PLEASE

Last edited: