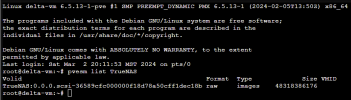

I've been trying to use one of the Proxmox Terraform providers to provision a VM image backed by a direct iSCSI LUN but was not successful. The provider is currently unable to do this so I started peeking at the REST API on how this might be done.

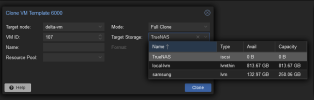

Perhaps I am not looking in the right places but I can't find a straightforward way of doing this. What UI is doing is basically swapping out the Disk Size combo for Disk Image upon selecting an iSCSI storage (see screenshots).

I've summarised my investigation here - curious if there is way do this via REST API?

EDIT: I started experimenting with possible workarounds but what I've seen doesn't look great. For example, trying to clone a VM template (cloud-init) from a local storage to an iSCSI storage seems like a dead-end from UI as well. You can select the new target storage but how does one select a LUN?

Perhaps I am not looking in the right places but I can't find a straightforward way of doing this. What UI is doing is basically swapping out the Disk Size combo for Disk Image upon selecting an iSCSI storage (see screenshots).

I've summarised my investigation here - curious if there is way do this via REST API?

EDIT: I started experimenting with possible workarounds but what I've seen doesn't look great. For example, trying to clone a VM template (cloud-init) from a local storage to an iSCSI storage seems like a dead-end from UI as well. You can select the new target storage but how does one select a LUN?

Last edited: