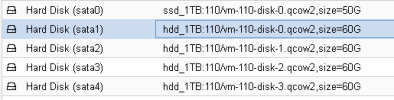

I have created a vm with 5 disks, 4 of them are the same size.

on proxmox vm hardware they show up like this

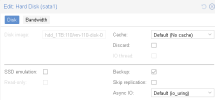

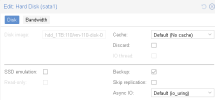

the details look like this ( highlighted disk )

on my vm using

ls -l /dev/disk/by-id/ i get this

but I can't see a way to map QEMU_HARDDISK_QM00005 -> ../../sda to a specific hard disk as defined in proxmox.

how can I do this?

thanks

-GF

on proxmox vm hardware they show up like this

the details look like this ( highlighted disk )

on my vm using

ls -l /dev/disk/by-id/ i get this

Code:

root@openmediavault:~# ls -l /dev/disk/by-id/

total 0

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_DVD-ROM_QM00003 -> ../../sr0

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_HARDDISK_QM00005 -> ../../sda

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00005-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00005-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00005-part5 -> ../../sda5

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_HARDDISK_QM00007 -> ../../sdb

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00007-part1 -> ../../sdb1

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00007-part9 -> ../../sdb9

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_HARDDISK_QM00009 -> ../../sdc

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00009-part1 -> ../../sdc1

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00009-part9 -> ../../sdc9

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_HARDDISK_QM00011 -> ../../sdd

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00011-part1 -> ../../sdd1

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00011-part9 -> ../../sdd9

lrwxrwxrwx 1 root root 9 Apr 26 16:38 ata-QEMU_HARDDISK_QM00013 -> ../../sde

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00013-part1 -> ../../sde1

lrwxrwxrwx 1 root root 10 Apr 26 16:38 ata-QEMU_HARDDISK_QM00013-part9 -> ../../sde9but I can't see a way to map QEMU_HARDDISK_QM00005 -> ../../sda to a specific hard disk as defined in proxmox.

how can I do this?

thanks

-GF