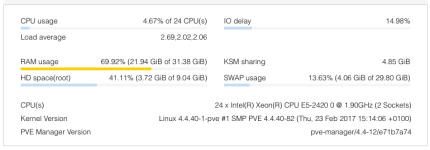

I have 32 gb of memory which I shared in two CT's:

1. 2gb of RAM - Centos 6 for haproxy and nginx.

2. 29 gb of RAM - Centos 7 for Percona Cluster database.

I've noticed high swapping on 29 gb VM in decided to stop it. And then I completely removed it from the system.

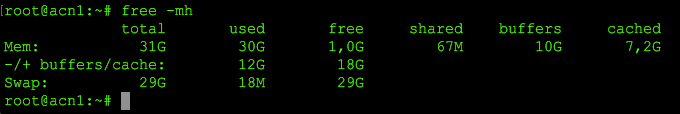

When I SSH'ed into the Proxmox host, it showed me that 12 GB of RAM are still used somewhere.

It's impossible, because the only virtual machine that is left has a 2GB RAM limit, and uses probably only 700 MB of it.

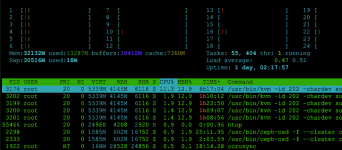

I ran a bash script to count total RAM usage from all processes and it came to 833 Megabytes:

So I'm wondering, where did other 11 Gigabytes go to? Is there a leak?

1. 2gb of RAM - Centos 6 for haproxy and nginx.

2. 29 gb of RAM - Centos 7 for Percona Cluster database.

I've noticed high swapping on 29 gb VM in decided to stop it. And then I completely removed it from the system.

When I SSH'ed into the Proxmox host, it showed me that 12 GB of RAM are still used somewhere.

Code:

root@pve:~# free -mh

total used free shared buffers cached

Mem: 31G 12G 18G 91M 36M 421M

-/+ buffers/cache: 12G 19G

Swap: 8.0G 842M 7.2GIt's impossible, because the only virtual machine that is left has a 2GB RAM limit, and uses probably only 700 MB of it.

I ran a bash script to count total RAM usage from all processes and it came to 833 Megabytes:

Code:

ps aux | awk '{sum+=$6} END {print sum / 1024}'

833.605So I'm wondering, where did other 11 Gigabytes go to? Is there a leak?

Last edited: