Hello,

for a long time (personal home lab, machine from 2016, I don't know how long the problem exist, I just ignored it until now), I have the problem, that one single VM on my system is able to let the load explode to >40 and let other VMs crash. Just with disk operations.

I used fio to test this:

With the following results inside of a KVM guest:

On this test, the load avg explodes to 42.

And the following results on a zfs filesystem on the proxmox host:

On this test, the load avg has a maximum of just 8.5.

Machine Info:

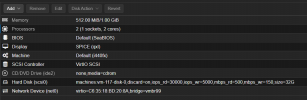

Configuration for the machine which executed fio (I added the disk limits later to workaround es issue):

Cache is set to "Default (No Cache)".

Other tasks like vzdump exhausting the IO as well. I reduced the worker count to 1 to "solve" this.

IO Scheduler is "mq-deadline" on all disks. Only alternative available would be "none".

Kernel:

Linux eisenbart 6.2.9-1-pve #1 SMP PREEMPT_DYNAMIC PVE 6.2.9-1 (2023-03-31T10:48Z) x86_64 GNU/Linux

SMART values from all disks are ok.

Most of the VMs have VirtIO SCSI as controller configured, two have LSI53C895A as controller because I missed to change that on VM creation. These two crash on high load. Does it make sense to switch to SCSI single + iothread?

I would expect that the Kernel/Scheduler takes care that one process not breaks the whole system. But this is not the case. I added now limits to all VM disks like in the screenshot.

Is there a better solution?

Or should I think about an hardware upgrade?

Regards

Christian

for a long time (personal home lab, machine from 2016, I don't know how long the problem exist, I just ignored it until now), I have the problem, that one single VM on my system is able to let the load explode to >40 and let other VMs crash. Just with disk operations.

I used fio to test this:

Bash:

#!/bin/bash

# commands stolen from https://www.thomas-krenn.com/de/wiki/Fio_Grundlagen#Beispiele

cd "$(dirname "$0")"

FILE=testfio

echo "#-> IOPS write"

fio --rw=randwrite --name=IOPS-write --bs=4k --direct=1 --filename=$FILE --numjobs=4 --ioengine=libaio --iodepth=32 --refill_buffers --group_reporting --runtime=60 --time_based

echo "#-> IOPS read"

fio --rw=randread --name=IOPS-read --bs=4k --direct=1 --filename=$FILE --numjobs=4 --ioengine=libaio --iodepth=32 --refill_buffers --group_reporting --runtime=60 --time_based

echo "#-> BW write"

fio --rw=write --name=IOPS-write --bs=1024k --direct=1 --filename=$FILE --numjobs=4 --ioengine=libaio --iodepth=32 --refill_buffers --group_reporting --runtime=60 --time_based

echo "#-> BW read"

fio --rw=read --name=IOPS-read --bs=1024k --direct=1 --filename=$FILE --numjobs=4 --ioengine=libaio --iodepth=32 --refill_buffers --group_reporting --runtime=60 --time_basedWith the following results inside of a KVM guest:

Code:

#-> IOPS write

write: IOPS=8441, BW=32.0MiB/s (34.6MB/s)(1982MiB/60117msec); 0 zone resets

bw ( KiB/s): min= 4744, max=66656, per=100.00%, avg=33880.76, stdev=4181.09, samples=477

iops : min= 1186, max=16664, avg=8470.16, stdev=1045.28, samples=477

#-> IOPS read

read: IOPS=47.0k, BW=184MiB/s (193MB/s)(10.8GiB/60006msec)

bw ( KiB/s): min=14864, max=493763, per=99.33%, avg=186782.31, stdev=37957.10, samples=476

iops : min= 3716, max=123440, avg=46695.52, stdev=9489.25, samples=476

#-> BW write

write: IOPS=258, BW=258MiB/s (271MB/s)(15.4GiB/60966msec); 0 zone resets

bw ( KiB/s): min= 8177, max=4812813, per=100.00%, avg=461513.17, stdev=258221.06, samples=278

iops : min= 5, max= 4699, avg=450.52, stdev=252.13, samples=278

#-> BW read

read: IOPS=1748, BW=1749MiB/s (1834MB/s)(103GiB/60021msec)

bw ( MiB/s): min= 32, max= 6756, per=98.80%, avg=1727.89, stdev=563.99, samples=476

iops : min= 32, max= 6755, avg=1727.36, stdev=563.81, samples=476On this test, the load avg explodes to 42.

And the following results on a zfs filesystem on the proxmox host:

Code:

#-> IOPS write

write: IOPS=276, BW=1108KiB/s (1135kB/s)(64.0MiB/60034msec); 0 zone resets

bw ( KiB/s): min= 48, max= 2208, per=100.00%, avg=1109.63, stdev=135.10, samples=473

iops : min= 12, max= 552, avg=277.41, stdev=33.78, samples=473

#-> IOPS read

read: IOPS=904, BW=3620KiB/s (3707kB/s)(212MiB/60008msec)

bw ( KiB/s): min= 536, max=132408, per=99.78%, avg=3612.91, stdev=2972.37, samples=476

iops : min= 134, max=33102, avg=903.23, stdev=743.09, samples=476

#-> BW write

write: IOPS=677, BW=677MiB/s (710MB/s)(39.7GiB/60007msec); 0 zone resets

bw ( KiB/s): min=368640, max=6230016, per=100.00%, avg=693682.65, stdev=225160.16, samples=476

iops : min= 360, max= 6084, avg=677.40, stdev=219.88, samples=476

#-> BW read

read: IOPS=8674, BW=8674MiB/s (9095MB/s)(508GiB/60001msec)

bw ( MiB/s): min= 416, max=14756, per=100.00%, avg=8683.59, stdev=611.46, samples=476

iops : min= 416, max=14756, avg=8683.61, stdev=611.48, samples=476On this test, the load avg has a maximum of just 8.5.

Machine Info:

- Proxmox 7.3-3

- Intel Xeon E3-1260 (8 CPU)

- 64GB memory

- 2x 6TB mirror + 2x 8TB mirror in one ZFS pool, 8k block size, thin provisioning active

- All WD Red Pro Disks, ashift=12

Configuration for the machine which executed fio (I added the disk limits later to workaround es issue):

Cache is set to "Default (No Cache)".

Other tasks like vzdump exhausting the IO as well. I reduced the worker count to 1 to "solve" this.

IO Scheduler is "mq-deadline" on all disks. Only alternative available would be "none".

Kernel:

Linux eisenbart 6.2.9-1-pve #1 SMP PREEMPT_DYNAMIC PVE 6.2.9-1 (2023-03-31T10:48Z) x86_64 GNU/Linux

SMART values from all disks are ok.

Most of the VMs have VirtIO SCSI as controller configured, two have LSI53C895A as controller because I missed to change that on VM creation. These two crash on high load. Does it make sense to switch to SCSI single + iothread?

I would expect that the Kernel/Scheduler takes care that one process not breaks the whole system. But this is not the case. I added now limits to all VM disks like in the screenshot.

Is there a better solution?

Or should I think about an hardware upgrade?

Regards

Christian