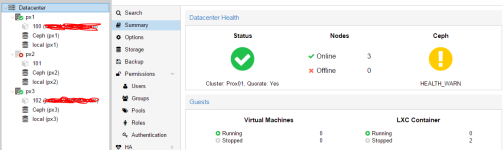

My PX/Ceph HA cluster with three nodes (PX1 PX2 PX3) needed a RAM upgrade so I shut down PX1, did my work, and powered it back up. Back at my desk I took a look at the management GUI and saw "Nodes online: 3" despite PX2 and PX3's icons in the left-hand panel showing "offline". That's odd, I thought, so I logged into the GUI from PX2 to see if it was because PX1 hadn't synced yet. Looked the same, but Ceph was showing PX2 and PX3s OSDs were offline. Odd that it would show PX2s OSDs were offline when that's the computer I was on. The VM that was on PX1 was now on PX3, as I expected, but now when I try to start any VMs the task log just shows "CT 100 - Start" for infinity and my ability to manage the other nodes goes away. Most confusingly, logging into the GUI on node PX2 and trying to manage PX2, the very server I'm on in the first place, results in time out errors. I tried rebooting all three physical machines as well as "pvestatd restart" but I can't get everything to sync again.

HA cluster completely broken after server maintenence

- Thread starter 100percentjake

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,My PX/Ceph HA cluster with three nodes (PX1 PX2 PX3) needed a RAM upgrade so I shut down PX1, did my work, and powered it back up. Back at my desk I took a look at the management GUI and saw "Nodes online: 3" despite PX2 and PX3's icons in the left-hand panel showing "offline". That's odd, I thought, so I logged into the GUI from PX2 to see if it was because PX1 hadn't synced yet. Looked the same, but Ceph was showing PX2 and PX3s OSDs were offline. Odd that it would show PX2s OSDs were offline when that's the computer I was on. The VM that was on PX1 was now on PX3, as I expected, but now when I try to start any VMs the task log just shows "CT 100 - Start" for infinity and my ability to manage the other nodes goes away. Most confusingly, logging into the GUI on node PX2 and trying to manage PX2, the very server I'm on in the first place, results in time out errors. I tried rebooting all three physical machines as well as "pvestatd restart" but I can't get everything to sync again.

perhaps the time on PX1 was wrong, so it's can't join the pve and ceph-cluster?

"I can't get everything to sync again" mean pve-cluster or ceph-cluster or both?

Do you changed something in /etc/hosts or DNS about the nodenames?

Is the time on all nodes right?

How looks "pvecm status" on all nodes?

Do you use different MTUs for the cluster communication?

What is the output of "ceph -s"?

Run the thre ceph-Mons?

Udo

Hi,

perhaps the time on PX1 was wrong, so it's can't join the pve and ceph-cluster?

"I can't get everything to sync again" mean pve-cluster or ceph-cluster or both?

Do you changed something in /etc/hosts or DNS about the nodenames?

Is the time on all nodes right?

How looks "pvecm status" on all nodes?

Do you use different MTUs for the cluster communication?

What is the output of "ceph -s"?

Run the thre ceph-Mons?

Udo

Just checked and the clock on all three systems is in sync.

Nothing has been changed in any config files since it was last working.

This is the result of pvecm, it is the same on all nodes other than the membership information as to which one is local:

Code:

Quorum information

------------------

Date: Mon Jan 23 10:20:08 2017

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000001

Ring ID: 1/1284

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 10.10.30.220 (local)

0x00000002 1 10.10.30.221

0x00000003 1 10.10.30.222

root@px1:~#All three nodes use eth0 for communication. eth1 is set up for public IPs and VM access.

Code:

root@px1:~# ceph -s

cluster 1945fcfb-35e0-46da-89b3-f7b0ff909786

health HEALTH_WARN

64 pgs down

64 pgs peering

64 pgs stuck inactive

64 pgs stuck unclean

2 requests are blocked > 32 sec

monmap e3: 3 mons at {0=10.10.30.220:6789/0,1=10.10.30.221:6789/0,2=10.10.30.222:6789/0}

election epoch 48, quorum 0,1,2 0,1,2

osdmap e73: 3 osds: 1 up, 1 in

pgmap v208292: 64 pgs, 1 pools, 13496 MB data, 3550 objects

13459 MB used, 801 GB / 814 GB avail

64 down+peeringCame in today and logged into the web GUI for PX3 and it showed all three servers being up and all being hunky-dory so I went to migrate a VM from PX3 to PX1, which appears to have worked, but when I went to start the VMs on PX1 and PX2 I got a 500 error and PX2 dropped offline and Ceph went down and out on PX1 and PX2 and I lost the ability to manage any node.

It seems to be conflicting information as the datacenter dropdown shows PX1 and PX3 online and PX2 offline but it says in the health summary that all three nodes are online.

I ran pveceph start on px1 and px2 and everything came back into sync, my VMs show as running, and all the nodes are working together. But I still don't understand at all how this happened in the first place or how I can fix it.

This also doesn't explain why restarting the nodes didn't get them to sync back up, either, since I assume "pveceph start" is executed on system boot to, well, initialize ceph and according to that code it was already running unless I'm reading that wrong.

The only thing that appeared in the log for PX1 and PX2 when they came back into play was

and the cluster log didn't give a damn that its metaphorical arm and leg were back in action.

Code:

root@px2:~# pveceph start

=== osd.1 ===

create-or-move updated item name 'osd.1' weight 1.45 at location {host=px2,root=default} to crush map

Starting Ceph osd.1 on px2...

Running as unit ceph-osd.1.1485189185.054775388.service.

=== mon.1 ===

Starting Ceph mon.1 on px2...already running

=== osd.1 ===

Starting Ceph osd.1 on px2...already runningThis also doesn't explain why restarting the nodes didn't get them to sync back up, either, since I assume "pveceph start" is executed on system boot to, well, initialize ceph and according to that code it was already running unless I'm reading that wrong.

The only thing that appeared in the log for PX1 and PX2 when they came back into play was

Code:

Jan 23 10:36:23 px2 pmxcfs[1342]: [status] notice: received logand the cluster log didn't give a damn that its metaphorical arm and leg were back in action.

Code:

Jan 23 10:33:45 px1 pmxcfs[1356]: [status] notice: RRD update error /var/lib/rrdcached/db/pve2-storage/px1/local: /var/lib/rrdcached/db/pve2-storage/px1/local: illegal attempt to update using time 1485189225 when last update time is 1485189225 (minimum one second step)This is... odd. The times are the same.

Hi,...

Code:root@px1:~# ceph -s cluster 1945fcfb-35e0-46da-89b3-f7b0ff909786 health HEALTH_WARN 64 pgs down 64 pgs peering 64 pgs stuck inactive 64 pgs stuck unclean 2 requests are blocked > 32 sec monmap e3: 3 mons at {0=10.10.30.220:6789/0,1=10.10.30.221:6789/0,2=10.10.30.222:6789/0} election epoch 48, quorum 0,1,2 0,1,2 osdmap e73: 3 osds: 1 up, 1 in pgmap v208292: 64 pgs, 1 pools, 13496 MB data, 3550 objects 13459 MB used, 801 GB / 814 GB avail 64 down+peering

ok - only 2 of osds aren't running - all other things (status in webfrontend) are not important.

Look with

Code:

ceph osd treeGot to these node (one by one) and start the osd again. If the OSD don't came up, look in the log why not (but normaly they will start).

If you similtanous reboot all nodes, not all osds came up, because the ceph-cluster don't have quorum to this time. If the Mons aranged the quorum, the osds must be restarted.

Udo

osd.0 and osd.1 on PX1 and PX2, respectively, weren't coming up. They come up fine if I start them manually but why do I have to do the manual start? It seems to defeat the purpose of a high-availability cluster if I have to manually re-start the OSDs after a server goes off-line.

A HA cluster should NEVER goes down, you have to build your system that you NEVER loose the quorum.

As long you have quorum, your described issue cannot happen. If you loose the quorum, some manual steps could be needed to bring all back online.

As long you have quorum, your described issue cannot happen. If you loose the quorum, some manual steps could be needed to bring all back online.

Hi,osd.0 and osd.1 on PX1 and PX2, respectively, weren't coming up. They come up fine if I start them manually but why do I have to do the manual start? It seems to defeat the purpose of a high-availability cluster if I have to manually re-start the OSDs after a server goes off-line.

to be sure, all is running fine again?

Like I wrote before - without ceph-quorum the osd will not start. What happens during your first boot is the question - perhaps you can find the answer in the logfiles (mon, osd).

Udo

If you loose the quorum

So if I have four+ systems in my cluster I will be able to take down a system for maintenance and the cluster will remain functional?

Yes, @udo, all is well now that I restarted the osds. I'm still learning the fundamentals of how these clusters are supposed to operate and didn't realize they required quorum for all functions.

Hi,So if I have four+ systems in my cluster I will be able to take down a system for maintenance and the cluster will remain functional?

Yes, @udo, all is well now that I restarted the osds. I'm still learning the fundamentals of how these clusters are supposed to operate and didn't realize they required quorum for all functions.

also with three nodes you can get one down for maintenance - the remaining two have quorum (pve-cluster + ceph-cluster).

If you shut down on ceph-node (Mon + OSDs) it's an good idea to stop the osd first - there is an issue in the init-script, which can produce an hickup if mon+osd stoped at the same time.

Many 3-Node cluster runs without trouble.

Udo

Is there any workaround for that init-script issue? Because if I had a machine unexpectedly go down-- as I was simulating with this maintenence by shutting down PX1 "unexpectedly"-- I appear to lose quorum on the remaining two nodes.