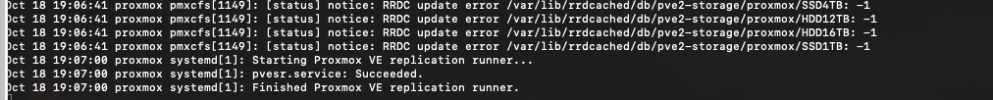

Oct 18 21:52:52 proxmox pmxcfs[1149]: [status] notice: RRD update error /var/lib/rrdcached/db/pve2-storage/proxmox/HDD12TB: /var/lib/rrdcached/db/pve2-storage/proxmox/HDD12TB: illegal attempt to update using time 1634586772 when last update time is 1634586772 (minimum one second step)

Oct 18 21:52:53 proxmox pvedaemon[1187]: <root@pam> successful auth for user 'root@pam'

Oct 18 21:53:00 proxmox kernel: [38103.739643] kvm invoked oom-killer: gfp_mask=0x100cca(GFP_HIGHUSER_MOVABLE), order=0, oom_score_adj=0

Oct 18 21:53:00 proxmox kernel: [38103.739649] CPU: 2 PID: 107607 Comm: kvm Tainted: P O 5.11.22-5-pve #1

Oct 18 21:53:00 proxmox kernel: [38103.739651] Hardware name: Gigabyte Technology Co., Ltd. H170N-WIFI/H170N-WIFI-CF, BIOS F22e 03/09/2018

Oct 18 21:53:00 proxmox kernel: [38103.739652] Call Trace:

Oct 18 21:53:00 proxmox kernel: [38103.739654] dump_stack+0x70/0x8b

Oct 18 21:53:00 proxmox kernel: [38103.739658] dump_header+0x4f/0x1f6

Oct 18 21:53:00 proxmox kernel: [38103.739661] oom_kill_process.cold+0xb/0x10

Oct 18 21:53:00 proxmox kernel: [38103.739663] out_of_memory+0x1cf/0x520

Oct 18 21:53:00 proxmox kernel: [38103.739667] __alloc_pages_slowpath.constprop.0+0xc6d/0xd60

Oct 18 21:53:00 proxmox kernel: [38103.739670] __alloc_pages_nodemask+0x2e0/0x310

Oct 18 21:53:00 proxmox kernel: [38103.739673] alloc_pages_current+0x87/0x110

Oct 18 21:53:00 proxmox kernel: [38103.739676] pagecache_get_page+0x18a/0x3b0

Oct 18 21:53:00 proxmox kernel: [38103.739678] filemap_fault+0x6ce/0xa10

Oct 18 21:53:00 proxmox kernel: [38103.739680] ? alloc_set_pte+0xf6/0x650

Oct 18 21:53:00 proxmox kernel: [38103.739682] ext4_filemap_fault+0x32/0x50

Oct 18 21:53:00 proxmox kernel: [38103.739685] __do_fault+0x3c/0xe0

Oct 18 21:53:00 proxmox kernel: [38103.739688] handle_mm_fault+0x12db/0x1a70

Oct 18 21:53:00 proxmox kernel: [38103.739690] do_user_addr_fault+0x1a0/0x450

Oct 18 21:53:00 proxmox kernel: [38103.739692] ? exit_to_user_mode_prepare+0x75/0x190

Oct 18 21:53:00 proxmox kernel: [38103.739695] exc_page_fault+0x69/0x150

Oct 18 21:53:00 proxmox kernel: [38103.739698] ? asm_exc_page_fault+0x8/0x30

Oct 18 21:53:00 proxmox kernel: [38103.739700] asm_exc_page_fault+0x1e/0x30

Oct 18 21:53:00 proxmox kernel: [38103.739702] RIP: 0033:0x5628fa4a7680

Oct 18 21:53:00 proxmox kernel: [38103.739707] Code: Unable to access opcode bytes at RIP 0x5628fa4a7656.

Oct 18 21:53:00 proxmox kernel: [38103.739708] RSP: 002b:00007ffd0990f5f8 EFLAGS: 00010246

Oct 18 21:53:00 proxmox kernel: [38103.739710] RAX: 0000000000000000 RBX: 00007ffd0990f604 RCX: 0000000000000000

Oct 18 21:53:00 proxmox kernel: [38103.739711] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000000

Oct 18 21:53:00 proxmox kernel: [38103.739712] RBP: 0000000000000000 R08: 0000000000000000 R09: 0000000000000028

Oct 18 21:53:00 proxmox kernel: [38103.739713] R10: 0000000001210b04 R11: 00007ffd099af080 R12: 00005628fa6063d9

Oct 18 21:53:00 proxmox kernel: [38103.739714] R13: 0000000000000000 R14: 0000000000000000 R15: 0000000000000000

Oct 18 21:53:00 proxmox kernel: [38103.739716] Mem-Info:

Oct 18 21:53:00 proxmox kernel: [38103.739717] active_anon:5230116 inactive_anon:2604747 isolated_anon:0

Oct 18 21:53:00 proxmox kernel: [38103.739717] active_file:2823 inactive_file:1088 isolated_file:89

Oct 18 21:53:00 proxmox kernel: [38103.739717] unevictable:3021 dirty:2 writeback:0

Oct 18 21:53:00 proxmox kernel: [38103.739717] slab_reclaimable:37453 slab_unreclaimable:144144

Oct 18 21:53:00 proxmox kernel: [38103.739717] mapped:10103 shmem:12443 pagetables:22190 bounce:0

Oct 18 21:53:00 proxmox kernel: [38103.739717] free:48877 free_pcp:0 free_cma:0

Oct 18 21:53:00 proxmox kernel: [38103.739721] Node 0 active_anon:20920464kB inactive_anon:10418988kB active_file:11292kB inactive_file:4352kB unevictable:12084kB isolated(anon):0kB isolated(file):356kB mapped:40412kB dirty:8kB writeback:0kB shmem:49772kB shmem_thp: 0kB shmem_pmdmapped: 0kB anon_thp: 10883072kB writeback_tmp:0kB kernel_stack:5664kB pagetables:88760kB all_unreclaimable? yes

Oct 18 21:53:00 proxmox kernel: [38103.739724] Node 0 DMA free:11776kB min:32kB low:44kB high:56kB reserved_highatomic:0KB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15988kB managed:15888kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Oct 18 21:53:00 proxmox kernel: [38103.739728] lowmem_reserve[]: 0 2799 31917 31917 31917

Oct 18 21:53:00 proxmox kernel: [38103.739731] Node 0 DMA32 free:122140kB min:5924kB low:8788kB high:11652kB reserved_highatomic:0KB active_anon:2076928kB inactive_anon:699972kB active_file:44kB inactive_file:0kB unevictable:0kB writepending:0kB present:3030420kB managed:2930748kB mlocked:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Oct 18 21:53:00 proxmox kernel: [38103.739735] lowmem_reserve[]: 0 0 29117 29117 29117

Oct 18 21:53:00 proxmox kernel: [38103.739738] Node 0 Normal free:61592kB min:61624kB low:91440kB high:121256kB reserved_highatomic:0KB active_anon:18843244kB inactive_anon:9719228kB active_file:10980kB inactive_file:4256kB unevictable:12084kB writepending:0kB present:30392320kB managed:29816460kB mlocked:11944kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Oct 18 21:53:00 proxmox kernel: [38103.739742] lowmem_reserve[]: 0 0 0 0 0

Oct 18 21:53:00 proxmox kernel: [38103.739744] Node 0 DMA: 4*4kB (U) 2*8kB (U) 2*16kB (U) 0*32kB 3*64kB (U) 2*128kB (U) 0*256kB 0*512kB 1*1024kB (U) 1*2048kB (M) 2*4096kB (M) = 11776kB

Oct 18 21:53:00 proxmox kernel: [38103.739756] Node 0 DMA32: 1869*4kB (UME) 2015*8kB (UME) 720*16kB (UME) 401*32kB (UME) 92*64kB (UME) 56*128kB (UME) 42*256kB (UME) 25*512kB (UME) 38*1024kB (UME) 0*2048kB 0*4096kB = 123468kB

Oct 18 21:53:00 proxmox kernel: [38103.739767] Node 0 Normal: 1987*4kB (UME) 675*8kB (UME) 1233*16kB (UME) 529*32kB (UME) 212*64kB (UME) 0*128kB 0*256kB 0*512kB 0*1024kB 0*2048kB 0*4096kB = 63572kB

Oct 18 21:53:00 proxmox kernel: [38103.739777] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Oct 18 21:53:00 proxmox kernel: [38103.739779] Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Oct 18 21:53:00 proxmox kernel: [38103.739780] 21161 total pagecache pages

Oct 18 21:53:00 proxmox kernel: [38103.739781] 2051 pages in swap cache

Oct 18 21:53:00 proxmox kernel: [38103.739782] Swap cache stats: add 1513965, delete 1511918, find 1459756/1570690

Oct 18 21:53:00 proxmox kernel: [38103.739783] Free swap = 0kB

Oct 18 21:53:00 proxmox kernel: [38103.739783] Total swap = 79996kB

Oct 18 21:53:00 proxmox kernel: [38103.739784] 8359682 pages RAM

Oct 18 21:53:00 proxmox kernel: [38103.739785] 0 pages HighMem/MovableOnly

Oct 18 21:53:00 proxmox kernel: [38103.739785] 168908 pages reserved

Oct 18 21:53:00 proxmox kernel: [38103.739786] 0 pages hwpoisoned

Oct 18 21:53:00 proxmox kernel: [38103.739786] Tasks state (memory values in pages):

.. snip ..

(null),cpuset=qemu.slice,mems_allowed=0,global_oom,task_memcg=/qemu.slice/304.scope,task=kvm,pid=107607,uid=0

Oct 18 21:53:00 proxmox kernel: [38103.739934] Out of memory: Killed process 107607 (kvm) total-vm:16112304kB, anon-rss:11667428kB, file-rss:0kB, shmem-rss:4kB, UID:0 pgtables:24492kB oom_score_adj:0

Oct 18 21:53:00 proxmox kernel: [38104.034560] oom_reaper: reaped process 107607 (kvm), now anon-rss:0kB, file-rss:68kB, shmem-rss:4kB

Oct 18 21:53:00 proxmox kernel: [38104.038998] vmbr2: port 6(tap304i0) entered disabled state

Oct 18 21:53:00 proxmox kernel: [38104.039078] vmbr2: port 6(tap304i0) entered disabled state

Oct 18 21:53:00 proxmox systemd[1]: 304.scope: A process of this unit has been killed by the OOM killer.

Oct 18 21:53:00 proxmox systemd[1]: 304.scope: Succeeded.

Oct 18 21:53:00 proxmox systemd[1]: 304.scope: Consumed 9min 2.782s CPU time.