Bash:

()

INFO: starting new backup job: vzdump 100 --compress zstd --remove 0 --notes-template stable2 --storage local --node pve --mode snapshot

INFO: Starting Backup of VM 100 (qemu)

INFO: Backup started at 2023-05-01 12:30:56

INFO: status = running

INFO: VM Name: windows

INFO: include disk 'scsi0' 'local-zfs:vm-100-disk-1' 80G

INFO: exclude disk 'scsi5' '/dev/disk/by-id/nvme-GIGABYTE_GP-GSM2NE3100TNTD_SN200908905007' (backup=no)

INFO: exclude disk 'scsi6' '/dev/disk/by-id/usb-Seagate_BUP_RD_NA9FPY7F-0:0' (backup=no)

INFO: include disk 'efidisk0' 'local-zfs:vm-100-disk-0' 1M

INFO: include disk 'tpmstate0' 'local-zfs:vm-100-disk-2' 4M

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating vzdump archive '/var/lib/vz/dump/vzdump-qemu-100-2023_05_01-12_30_56.vma.zst'

INFO: attaching TPM drive to QEMU for backup

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

INFO: started backup task 'e4c98bd9-200b-44c9-8bf5-5e4f8978fe46'

INFO: resuming VM again

INFO: 0% (721.4 MiB of 80.0 GiB) in 3s, read: 240.5 MiB/s, write: 224.6 MiB/s

INFO: 1% (1.2 GiB of 80.0 GiB) in 6s, read: 175.2 MiB/s, write: 174.7 MiB/s

INFO: 2% (1.8 GiB of 80.0 GiB) in 9s, read: 200.6 MiB/s, write: 196.6 MiB/s

INFO: 3% (2.5 GiB of 80.0 GiB) in 13s, read: 178.5 MiB/s, write: 178.1 MiB/s

INFO: 3% (2.7 GiB of 80.0 GiB) in 15s, read: 124.3 MiB/s, write: 123.2 MiB/s

ERROR: job failed with err -125 - Operation canceled

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 100 failed - job failed with err -125 - Operation canceled

INFO: Failed at 2023-05-01 12:31:14

INFO: Backup job finished with errors

TASK ERROR: job errorsFrom /var/log/daemon.log

Bash:

May 1 12:45:18 pve pvedaemon[2474]: <root@pam> starting task UPID:pve:0002A71C:00023BE0:644F43DE:vzdump:100:root@pam:

May 1 12:45:18 pve pvedaemon[173852]: INFO: starting new backup job: vzdump 100 --remove 0 --compress zstd --mode snapshot --node pve --storage local --notes-template stable2

May 1 12:45:18 pve pvedaemon[173852]: INFO: Starting Backup of VM 100 (qemu)

May 1 12:45:35 pve zed: eid=15 class=checksum pool='rpool' vdev=nvme-eui.0025385521403c96-part3 algorithm=fletcher4 size=8192 offset=1208656699392 priority=0 err=52 flags=0x380880 bookmark=15585:1:0:389466

May 1 12:45:40 pve zed: eid=16 class=data pool='rpool' priority=0 err=52 flags=0x8881 bookmark=15585:1:0:530503

May 1 12:45:40 pve zed: eid=17 class=checksum pool='rpool' vdev=nvme-eui.0025385521403c96-part3 algorithm=fletcher4 size=8192 offset=1209360121856 priority=0 err=52 flags=0x380880 bookmark=15585:1:0:530503

May 1 12:45:41 pve pvedaemon[173852]: ERROR: Backup of VM 100 failed - job failed with err -125 - Operation canceled

May 1 12:45:41 pve pvedaemon[173852]: INFO: Backup job finished with errors

May 1 12:45:41 pve pvedaemon[173852]: job errors

May 1 12:45:41 pve pvedaemon[2474]: <root@pam> end task UPID:pve:0002A71C:00023BE0:644F43DE:vzdump:100:root@pam: job errors

May 1 12:45:57 pve sniproxy[2069]: Request from [::ffff:193.118.53.210]:39142 did not include a hostnameOne of my backup is failing, where can I get more info about it?

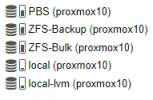

There is plenty of storage as it is a new setup.

Last edited: