Hi devs and users,

Personally, I use proxmox in multiple domains (private and semi-professional).

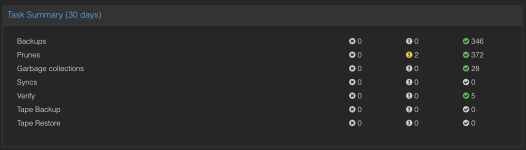

For this reason, I have also two Backup servers running to handle the backups. All servers are doing daily backups, some even more, in total ~5TB with ~200GB changes per week.

One server is running with metadata SSD cached, data HDD ZFS filesystem as a VM. The other is "a bit weird", a cloud instance using remotely mounted storage for financial reasons.

Both of them run really well with a decent speed for backup creation and restore, even the remote storage one.

But one thing came up, the speed of garbage collection.

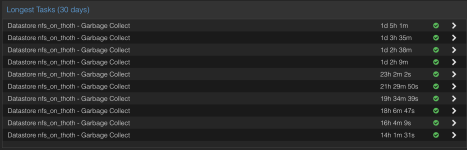

On the remote storage server, one run of garbage collection takes almost 2 days...

On the local storage server, one run takes 10 hours.

I know why this takes so long. PBS is going through all index files, "touches" all chunks assigned to it and later deletes all untouched chunks. This touching (even if you use features like relatime), involves the operating system and filesystem.

The required calls into the OS scale with amount of data aka chunks of data (or even better amount) and the amount of index files.

That means if you want to keep higher frequency backups, that quickly scales into billion touches per garbage collection cycles.

So, for all the users out there: What is your garbage collection duration? Anybody else out there having an "issue" like that?

But I didn't came here without an idea to change this.

I read (actually inside the code) that there were ideas to make this whole process inside memory. But that comes at a decent risk and memory footprint for large deployments.

The idea I have been trying on a replica of my backup server is deduplication of the touch requests.

There is no benefit of touching a chunk twice within one garbage collect. And the benefit is this calculation happens within PBS, no involvement of the OS. That can make this process magnitudes quicker. Especially for non-enterprise SSD deployments. Even for them, the lookup internal seems to be quicker than the touch, even on the NVME SSD I tried it with.

But that means a list of chunks needs to be kept in memory during garbage collection. In numbers 32MB per 1 million chunks. 1 million chunks means 4TB of VM data with default settings. It makes sense to truncate this data if it reaches a certain limit, what doesn't undermine the basic functionality, just slows it a bit down. But maybe that is fair if you have a single VM referencing 100TB+ on a single VM...

For the devs, what about memory footprint? Proxmox guides mention 1GB RAM per 1TB storage. So magnitudes more.

Do you think that such a solution has a chance?

BR

Florian

Personally, I use proxmox in multiple domains (private and semi-professional).

For this reason, I have also two Backup servers running to handle the backups. All servers are doing daily backups, some even more, in total ~5TB with ~200GB changes per week.

One server is running with metadata SSD cached, data HDD ZFS filesystem as a VM. The other is "a bit weird", a cloud instance using remotely mounted storage for financial reasons.

Both of them run really well with a decent speed for backup creation and restore, even the remote storage one.

But one thing came up, the speed of garbage collection.

On the remote storage server, one run of garbage collection takes almost 2 days...

On the local storage server, one run takes 10 hours.

I know why this takes so long. PBS is going through all index files, "touches" all chunks assigned to it and later deletes all untouched chunks. This touching (even if you use features like relatime), involves the operating system and filesystem.

The required calls into the OS scale with amount of data aka chunks of data (or even better amount) and the amount of index files.

That means if you want to keep higher frequency backups, that quickly scales into billion touches per garbage collection cycles.

So, for all the users out there: What is your garbage collection duration? Anybody else out there having an "issue" like that?

But I didn't came here without an idea to change this.

I read (actually inside the code) that there were ideas to make this whole process inside memory. But that comes at a decent risk and memory footprint for large deployments.

The idea I have been trying on a replica of my backup server is deduplication of the touch requests.

There is no benefit of touching a chunk twice within one garbage collect. And the benefit is this calculation happens within PBS, no involvement of the OS. That can make this process magnitudes quicker. Especially for non-enterprise SSD deployments. Even for them, the lookup internal seems to be quicker than the touch, even on the NVME SSD I tried it with.

But that means a list of chunks needs to be kept in memory during garbage collection. In numbers 32MB per 1 million chunks. 1 million chunks means 4TB of VM data with default settings. It makes sense to truncate this data if it reaches a certain limit, what doesn't undermine the basic functionality, just slows it a bit down. But maybe that is fair if you have a single VM referencing 100TB+ on a single VM...

For the devs, what about memory footprint? Proxmox guides mention 1GB RAM per 1TB storage. So magnitudes more.

Do you think that such a solution has a chance?

BR

Florian