Hi,

so I recently got into Packer and created some VM templates with, which all worked fine. During testing, I only used linked clones, so there was no issues. However, now that I'm done with creating the templates and I tried creating a working VM using a full-clone of the template, it crashes my pfSense VM.

I set up qemu-guest-agent on pfSense, fully updated proxmox and tried the solution from here, but with no success.

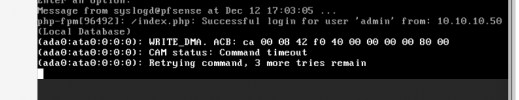

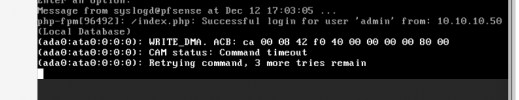

Here is the error from syslog:

The console of the pfSense VM shows the following message:

Networking will stop working some time after and I will have to force stop and restart the VM.

Here is the config of the pfSense VM:

The config of the template I am trying to clone (it's a Kali linux VM, but the same thing happened with Windows Server):

Output of

If you need to look at the packer templates, you can find them here.

I hope you can help me fix this. I know I could just use linked clones, but that just seems like a bad workaround for me, and I don't want to setup my VMs again if I change the template.

so I recently got into Packer and created some VM templates with, which all worked fine. During testing, I only used linked clones, so there was no issues. However, now that I'm done with creating the templates and I tried creating a working VM using a full-clone of the template, it crashes my pfSense VM.

I set up qemu-guest-agent on pfSense, fully updated proxmox and tried the solution from here, but with no success.

Here is the error from syslog:

Code:

Dec 14 11:37:40 proxmox pvestatd[1837]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - got timeout

Dec 14 11:37:41 proxmox pvestatd[1837]: status update time (8.312 seconds)

Dec 14 11:37:50 proxmox pvestatd[1837]: VM 100 qmp command failed - VM 100 qmp command 'query-proxmox-support' failed - unable to connect to VM 100 qmp socket - timeout after 51 retries

Dec 14 11:37:50 proxmox pvestatd[1837]: status update time (8.337 seconds)The console of the pfSense VM shows the following message:

Networking will stop working some time after and I will have to force stop and restart the VM.

Here is the config of the pfSense VM:

Code:

agent: 1

boot: order=ide0;ide2

cores: 2

cpu: host

hostpci0: 0000:85:00

hostpci1: 0000:87:00

hostpci2: 0000:82:00

ide0: local-lvm:vm-100-disk-0,discard=on,size=32G

ide2: local:iso/pfSense-CE-2.6.0-RELEASE-amd64.iso,media=cdrom,size=749476K

memory: 4096

meta: creation-qemu=7.0.0,ctime=1669568663

name: pfsense

net0: virtio=BE:11:48:DE:2B:E5,bridge=vmbr1

numa: 0

onboot: 1

ostype: other

scsihw: virtio-scsi-pci

smbios1: uuid=f596268e-f078-46ae-9444-8a68f04b36f0

sockets: 2

startup: order=0

vga: qxl

vmgenid: 8bb8c2c4-a775-411c-9db0-028a06f90f8dThe config of the template I am trying to clone (it's a Kali linux VM, but the same thing happened with Windows Server):

Code:

agent: 1

boot: order=ide0;ide2

cores: 2

cpu: host

hostpci0: 0000:85:00

hostpci1: 0000:87:00

hostpci2: 0000:82:00

ide0: local-lvm:vm-100-disk-0,discard=on,size=32G

ide2: local:iso/pfSense-CE-2.6.0-RELEASE-amd64.iso,media=cdrom,size=749476K

memory: 4096

meta: creation-qemu=7.0.0,ctime=1669568663

name: pfsense

net0: virtio=BE:11:48:DE:2B:E5,bridge=vmbr1

numa: 0

onboot: 1

ostype: other

scsihw: virtio-scsi-pci

smbios1: uuid=f596268e-f078-46ae-9444-8a68f04b36f0

sockets: 2

startup: order=0

vga: qxl

vmgenid: 8bb8c2c4-a775-411c-9db0-028a06f90f8d

root@proxmox:~# qm config 902

agent: 1

boot: c

cores: 2

cpu: kvm64

description: Kali 2022.4

ide0: local-lvm:vm-902-cloudinit,media=cdrom

ide2: none,media=cdrom

kvm: 1

memory: 8192

meta: creation-qemu=7.1.0,ctime=1670852399

name: kali-2022.4

net0: virtio=6E:1D:09:4D:8A:60,bridge=vmbr1,firewall=0,tag=20

numa: 0

onboot: 0

ostype: l26

scsi0: local-lvm:base-902-disk-0,cache=writeback,iothread=0,size=64G

scsihw: virtio-scsi-pci

smbios1: uuid=56d57d62-3def-4875-a9d8-ee6b48aeaa2f

sockets: 2

tablet: 0

template: 1

vga: type=std,memory=256

vmgenid: a6804438-50a5-4e09-8137-d31532d4f1deOutput of

pveversion -v:

Code:

agent: 1

boot: c

cores: 2

cpu: kvm64

description: Kali 2022.4

ide0: local-lvm:vm-902-cloudinit,media=cdrom

ide2: none,media=cdrom

kvm: 1

memory: 8192

meta: creation-qemu=7.1.0,ctime=1670852399

name: kali-2022.4

net0: virtio=6E:1D:09:4D:8A:60,bridge=vmbr1,firewall=0,tag=20

numa: 0

onboot: 0

ostype: l26

scsi0: local-lvm:base-902-disk-0,cache=writeback,iothread=0,size=64G

scsihw: virtio-scsi-pci

smbios1: uuid=56d57d62-3def-4875-a9d8-ee6b48aeaa2f

sockets: 2

tablet: 0

template: 1

vga: type=std,memory=256

vmgenid: a6804438-50a5-4e09-8137-d31532d4f1de

root@proxmox:~# pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.3-3 (running version: 7.3-3/c3928077)

pve-kernel-5.15: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

openvswitch-switch: 2.15.0+ds1-2+deb11u1

proxmox-backup-client: 2.3.1-1

proxmox-backup-file-restore: 2.3.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-1

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1If you need to look at the packer templates, you can find them here.

I hope you can help me fix this. I know I could just use linked clones, but that just seems like a bad workaround for me, and I don't want to setup my VMs again if I change the template.

Last edited: