Hello,

I'm a beginner in proxmox so maybe I'm doing something wrong or misunderstood.

I have a problem with 2xnodes Fujitsu RX300 S7 with connected 2x Fibre Channel to each node by Emulex Lightpulse to storage Eternus DX90 S2.

I want to do HA cluster - I have on other machine - Dell R620 installed Proxmox - this Dell will be working like a master of this 2xnodes Fujitsu.

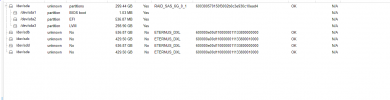

In Proxmox on 2xnodes Fujitsu i can see /dev/sdb, /dev/sdc, /dev/sdd , /dev/sde - each connection of Fibre Channel to nodes but i cant add LVM in Proxmox Web Config.

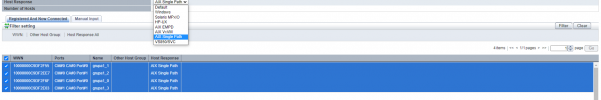

I have in Eternus Dx90 s2 created 2x LUN and i dont know which Host Response need to use(screen).

I'm a beginner in proxmox so maybe I'm doing something wrong or misunderstood.

I have a problem with 2xnodes Fujitsu RX300 S7 with connected 2x Fibre Channel to each node by Emulex Lightpulse to storage Eternus DX90 S2.

I want to do HA cluster - I have on other machine - Dell R620 installed Proxmox - this Dell will be working like a master of this 2xnodes Fujitsu.

In Proxmox on 2xnodes Fujitsu i can see /dev/sdb, /dev/sdc, /dev/sdd , /dev/sde - each connection of Fibre Channel to nodes but i cant add LVM in Proxmox Web Config.

I have in Eternus Dx90 s2 created 2x LUN and i dont know which Host Response need to use(screen).