Hi,

Tried yet again to reinstall PMX 8.1.4 and Ceph 18.4 and have the same identical results.

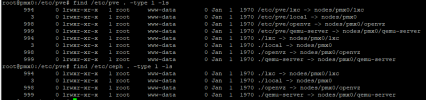

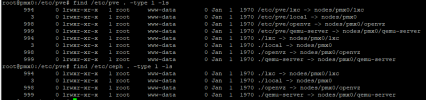

Issues i detect is as follows below in the screenshots, but also seems like symlinks is missing and cannot be created.

Seems this is very buggy release and i cant find a way forward.

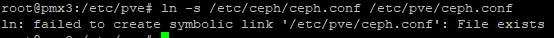

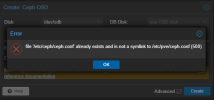

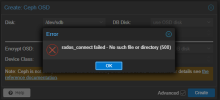

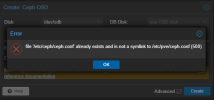

file '/etc/ceph/ceph.conf' already exists and is not a symlink to /etc/pve/ceph.conf (500)

Not possible to add new symlink (testing on the 3rd host) identical issue across all hosts.

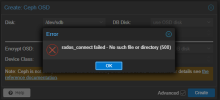

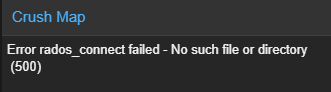

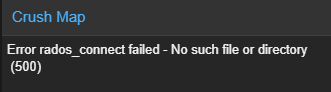

rados_connect failed - No such file or directory (500)

file '/etc/ceph/ceph.conf' already exists and is not a symlink to /etc/pve/ceph.conf (500)

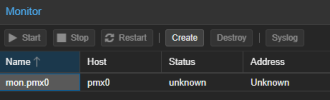

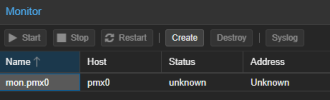

Monitor cannot start

Tried yet again to reinstall PMX 8.1.4 and Ceph 18.4 and have the same identical results.

Issues i detect is as follows below in the screenshots, but also seems like symlinks is missing and cannot be created.

Seems this is very buggy release and i cant find a way forward.

file '/etc/ceph/ceph.conf' already exists and is not a symlink to /etc/pve/ceph.conf (500)

Not possible to add new symlink (testing on the 3rd host) identical issue across all hosts.

rados_connect failed - No such file or directory (500)

file '/etc/ceph/ceph.conf' already exists and is not a symlink to /etc/pve/ceph.conf (500)

Monitor cannot start