as far as PBS is concerned, a datastore is just a directory. I can't tell what state your pool and/or dataset was in without more data (e.g., how did you check the fullness? note that the "free" property of "zpool list" and the available property of "zfs list" are two very different things).

[SOLVED] Force delete of "Pending removals"

- Thread starter lazynooblet

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

hi @fabian thx for your return.

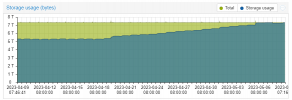

sadly today the PBS Datastore returned full. after running for a solution for multiple days by deleting some vm and the benchmark file i have been able to return 50gb free, i has the Garbage collection .

2 days ago, yesterday and today , CG was suppose to delete around 1TB

it never appened and the UNIT is full again...

it appear that since april 20 or so the Chunk are not removed at all

it there a way to force remove the pending removal manually ,

2023-05-08T03:27:02-04:00: Pending removals: 950.55 GiB (in 281186 chunks)

2023-05-08T03:27:02-04:00: Original data usage: 576.272 TiB

2023-05-08T03:27:02-04:00: On-Disk usage: 5.62 TiB (0.98%)

2023-05-08T03:27:02-04:00: On-Disk chunks: 2774558

2023-05-08T03:27:02-04:00: Deduplication factor: 102.53

2023-05-08T03:27:02-04:00: Average chunk size: 2.124 MiB

2023-05-08T03:27:02-04:00: TASK OK

sadly today the PBS Datastore returned full. after running for a solution for multiple days by deleting some vm and the benchmark file i have been able to return 50gb free, i has the Garbage collection .

2 days ago, yesterday and today , CG was suppose to delete around 1TB

it never appened and the UNIT is full again...

it appear that since april 20 or so the Chunk are not removed at all

it there a way to force remove the pending removal manually ,

2023-05-08T03:27:02-04:00: Pending removals: 950.55 GiB (in 281186 chunks)

2023-05-08T03:27:02-04:00: Original data usage: 576.272 TiB

2023-05-08T03:27:02-04:00: On-Disk usage: 5.62 TiB (0.98%)

2023-05-08T03:27:02-04:00: On-Disk chunks: 2774558

2023-05-08T03:27:02-04:00: Deduplication factor: 102.53

2023-05-08T03:27:02-04:00: Average chunk size: 2.124 MiB

2023-05-08T03:27:02-04:00: TASK OK

Attachments

2023-05-08T03:05:00-04:00: starting garbage collection on store BK_DB1

2023-05-08T03:05:00-04:00: task triggered by schedule '03:05'

2023-05-08T03:05:00-04:00: Start GC phase1 (mark used chunks)

2023-05-08T03:05:02-04:00: marked 1% (25 of 2457 index files)

2023-05-08T03:05:22-04:00: marked 2% (50 of 2457 index files)

2023-05-08T03:05:41-04:00: marked 3% (74 of 2457 index files)

2023-05-08T03:06:13-04:00: marked 4% (99 of 2457 index files)

2023-05-08T03:07:09-04:00: marked 5% (123 of 2457 index files)

2023-05-08T03:07:33-04:00: marked 6% (148 of 2457 index files)

2023-05-08T03:07:45-04:00: marked 7% (172 of 2457 index files)

2023-05-08T03:07:50-04:00: marked 8% (197 of 2457 index files)

2023-05-08T03:07:58-04:00: marked 9% (222 of 2457 index files)

2023-05-08T03:08:08-04:00: marked 10% (246 of 2457 index files)

2023-05-08T03:08:12-04:00: marked 11% (271 of 2457 index files)

2023-05-08T03:08:21-04:00: marked 12% (295 of 2457 index files)

2023-05-08T03:08:26-04:00: marked 13% (320 of 2457 index files)

2023-05-08T03:08:31-04:00: marked 14% (344 of 2457 index files)

2023-05-08T03:08:40-04:00: marked 15% (369 of 2457 index files)

2023-05-08T03:08:45-04:00: marked 16% (394 of 2457 index files)

2023-05-08T03:08:48-04:00: marked 17% (418 of 2457 index files)

2023-05-08T03:08:57-04:00: marked 18% (443 of 2457 index files)

2023-05-08T03:09:09-04:00: marked 19% (467 of 2457 index files)

2023-05-08T03:09:14-04:00: marked 20% (492 of 2457 index files)

2023-05-08T03:09:26-04:00: marked 21% (516 of 2457 index files)

2023-05-08T03:09:39-04:00: marked 22% (541 of 2457 index files)

2023-05-08T03:09:45-04:00: marked 23% (566 of 2457 index files)

2023-05-08T03:09:48-04:00: marked 24% (590 of 2457 index files)

2023-05-08T03:09:51-04:00: marked 25% (615 of 2457 index files)

2023-05-08T03:10:02-04:00: marked 26% (639 of 2457 index files)

2023-05-08T03:10:35-04:00: marked 27% (664 of 2457 index files)

2023-05-08T03:10:37-04:00: marked 28% (688 of 2457 index files)

2023-05-08T03:10:38-04:00: marked 29% (713 of 2457 index files)

2023-05-08T03:10:41-04:00: marked 30% (738 of 2457 index files)

2023-05-08T03:10:44-04:00: marked 31% (762 of 2457 index files)

2023-05-08T03:10:47-04:00: marked 32% (787 of 2457 index files)

2023-05-08T03:10:52-04:00: marked 33% (811 of 2457 index files)

2023-05-08T03:10:57-04:00: marked 34% (836 of 2457 index files)

2023-05-08T03:11:00-04:00: marked 35% (860 of 2457 index files)

2023-05-08T03:11:02-04:00: marked 36% (885 of 2457 index files)

2023-05-08T03:11:25-04:00: marked 37% (910 of 2457 index files)

2023-05-08T03:12:03-04:00: marked 38% (934 of 2457 index files)

2023-05-08T03:12:05-04:00: marked 39% (959 of 2457 index files)

2023-05-08T03:12:10-04:00: marked 40% (983 of 2457 index files)

2023-05-08T03:12:13-04:00: marked 41% (1008 of 2457 index files)

2023-05-08T03:12:21-04:00: marked 42% (1032 of 2457 index files)

2023-05-08T03:12:30-04:00: marked 43% (1057 of 2457 index files)

2023-05-08T03:12:35-04:00: marked 44% (1082 of 2457 index files)

2023-05-08T03:12:40-04:00: marked 45% (1106 of 2457 index files)

2023-05-08T03:12:42-04:00: marked 46% (1131 of 2457 index files)

2023-05-08T03:12:44-04:00: marked 47% (1155 of 2457 index files)

2023-05-08T03:12:49-04:00: marked 48% (1180 of 2457 index files)

2023-05-08T03:12:57-04:00: marked 49% (1204 of 2457 index files)

2023-05-08T03:13:06-04:00: marked 50% (1229 of 2457 index files)

2023-05-08T03:13:09-04:00: marked 51% (1254 of 2457 index files)

2023-05-08T03:13:14-04:00: marked 52% (1278 of 2457 index files)

2023-05-08T03:13:18-04:00: marked 53% (1303 of 2457 index files)

2023-05-08T03:13:23-04:00: marked 54% (1327 of 2457 index files)

2023-05-08T03:13:27-04:00: marked 55% (1352 of 2457 index files)

2023-05-08T03:13:30-04:00: marked 56% (1376 of 2457 index files)

2023-05-08T03:13:34-04:00: marked 57% (1401 of 2457 index files)

2023-05-08T03:13:37-04:00: marked 58% (1426 of 2457 index files)

2023-05-08T03:13:42-04:00: marked 59% (1450 of 2457 index files)

2023-05-08T03:14:06-04:00: marked 60% (1475 of 2457 index files)

2023-05-08T03:14:18-04:00: marked 61% (1499 of 2457 index files)

2023-05-08T03:14:26-04:00: marked 62% (1524 of 2457 index files)

2023-05-08T03:14:35-04:00: marked 63% (1548 of 2457 index files)

2023-05-08T03:14:48-04:00: marked 64% (1573 of 2457 index files)

2023-05-08T03:16:29-04:00: marked 65% (1598 of 2457 index files)

2023-05-08T03:18:05-04:00: marked 66% (1622 of 2457 index files)

2023-05-08T03:18:38-04:00: marked 67% (1647 of 2457 index files)

2023-05-08T03:18:40-04:00: marked 68% (1671 of 2457 index files)

2023-05-08T03:18:42-04:00: marked 69% (1696 of 2457 index files)

2023-05-08T03:18:42-04:00: marked 70% (1720 of 2457 index files)

2023-05-08T03:18:42-04:00: marked 71% (1745 of 2457 index files)

2023-05-08T03:18:53-04:00: marked 72% (1770 of 2457 index files)

2023-05-08T03:19:13-04:00: marked 73% (1794 of 2457 index files)

2023-05-08T03:19:56-04:00: marked 74% (1819 of 2457 index files)

2023-05-08T03:19:56-04:00: marked 75% (1843 of 2457 index files)

2023-05-08T03:19:56-04:00: marked 76% (1868 of 2457 index files)

2023-05-08T03:20:07-04:00: marked 77% (1892 of 2457 index files)

2023-05-08T03:20:24-04:00: marked 78% (1917 of 2457 index files)

2023-05-08T03:20:27-04:00: marked 79% (1942 of 2457 index files)

2023-05-08T03:20:30-04:00: marked 80% (1966 of 2457 index files)

2023-05-08T03:20:30-04:00: marked 81% (1991 of 2457 index files)

2023-05-08T03:20:31-04:00: marked 82% (2015 of 2457 index files)

2023-05-08T03:21:18-04:00: marked 83% (2040 of 2457 index files)

2023-05-08T03:21:58-04:00: marked 84% (2064 of 2457 index files)

2023-05-08T03:22:04-04:00: marked 85% (2089 of 2457 index files)

2023-05-08T03:22:06-04:00: marked 86% (2114 of 2457 index files)

2023-05-08T03:22:11-04:00: marked 87% (2138 of 2457 index files)

2023-05-08T03:23:01-04:00: marked 88% (2163 of 2457 index files)

2023-05-08T03:23:14-04:00: marked 89% (2187 of 2457 index files)

2023-05-08T03:23:30-04:00: marked 90% (2212 of 2457 index files)

2023-05-08T03:23:54-04:00: marked 91% (2236 of 2457 index files)

2023-05-08T03:24:20-04:00: marked 92% (2261 of 2457 index files)

2023-05-08T03:24:22-04:00: marked 93% (2286 of 2457 index files)

2023-05-08T03:24:22-04:00: marked 94% (2310 of 2457 index files)

2023-05-08T03:24:26-04:00: marked 95% (2335 of 2457 index files)

2023-05-08T03:24:26-04:00: marked 96% (2359 of 2457 index files)

2023-05-08T03:24:49-04:00: marked 97% (2384 of 2457 index files)

2023-05-08T03:25:30-04:00: marked 98% (2408 of 2457 index files)

2023-05-08T03:25:39-04:00: marked 99% (2433 of 2457 index files)

2023-05-08T03:26:06-04:00: marked 100% (2457 of 2457 index files)

2023-05-08T03:26:06-04:00: found (and marked) 2457 index files outside of expected directory scheme

2023-05-08T03:26:06-04:00: Start GC phase2 (sweep unused chunks)

2023-05-08T03:26:07-04:00: processed 1% (30881 chunks)

2023-05-08T03:26:07-04:00: processed 2% (61154 chunks)

2023-05-08T03:26:08-04:00: processed 3% (91799 chunks)

2023-05-08T03:26:08-04:00: processed 4% (122290 chunks)

2023-05-08T03:26:09-04:00: processed 5% (152896 chunks)

2023-05-08T03:26:09-04:00: processed 6% (183440 chunks)

2023-05-08T03:26:10-04:00: processed 7% (213872 chunks)

2023-05-08T03:26:10-04:00: processed 8% (244458 chunks)

2023-05-08T03:26:11-04:00: processed 9% (275172 chunks)

2023-05-08T03:26:12-04:00: processed 10% (305535 chunks)

2023-05-08T03:26:12-04:00: processed 11% (335817 chunks)

2023-05-08T03:26:13-04:00: processed 12% (366525 chunks)

2023-05-08T03:26:13-04:00: processed 13% (396891 chunks)

2023-05-08T03:26:14-04:00: processed 14% (427330 chunks)

2023-05-08T03:26:14-04:00: processed 15% (457951 chunks)

2023-05-08T03:26:15-04:00: processed 16% (488495 chunks)

2023-05-08T03:26:15-04:00: processed 17% (518842 chunks)

2023-05-08T03:26:16-04:00: processed 18% (549673 chunks)

2023-05-08T03:26:16-04:00: processed 19% (580168 chunks)

2023-05-08T03:26:17-04:00: processed 20% (610628 chunks)

2023-05-08T03:26:18-04:00: processed 21% (640875 chunks)

2023-05-08T03:26:18-04:00: processed 22% (671207 chunks)

2023-05-08T03:26:19-04:00: processed 23% (701823 chunks)

2023-05-08T03:26:19-04:00: processed 24% (732353 chunks)

2023-05-08T03:26:20-04:00: processed 25% (763051 chunks)

2023-05-08T03:26:20-04:00: processed 26% (793630 chunks)

2023-05-08T03:26:21-04:00: processed 27% (824526 chunks)

2023-05-08T03:26:21-04:00: processed 28% (854859 chunks)

2023-05-08T03:26:22-04:00: processed 29% (885393 chunks)

2023-05-08T03:26:22-04:00: processed 30% (916120 chunks)

2023-05-08T03:26:23-04:00: processed 31% (947020 chunks)

2023-05-08T03:26:24-04:00: processed 32% (977516 chunks)

2023-05-08T03:26:24-04:00: processed 33% (1008235 chunks)

2023-05-08T03:26:25-04:00: processed 34% (1038546 chunks)

2023-05-08T03:26:25-04:00: processed 35% (1068816 chunks)

2023-05-08T03:26:26-04:00: processed 36% (1099252 chunks)

2023-05-08T03:26:26-04:00: processed 37% (1129811 chunks)

2023-05-08T03:26:27-04:00: processed 38% (1160406 chunks)

2023-05-08T03:26:27-04:00: processed 39% (1191096 chunks)

2023-05-08T03:26:28-04:00: processed 40% (1221456 chunks)

2023-05-08T03:26:29-04:00: processed 41% (1251719 chunks)

2023-05-08T03:26:29-04:00: processed 42% (1282224 chunks)

2023-05-08T03:26:30-04:00: processed 43% (1312750 chunks)

2023-05-08T03:26:30-04:00: processed 44% (1343156 chunks)

2023-05-08T03:26:31-04:00: processed 45% (1373741 chunks)

2023-05-08T03:26:31-04:00: processed 46% (1404469 chunks)

2023-05-08T03:26:32-04:00: processed 47% (1434802 chunks)

2023-05-08T03:26:33-04:00: processed 48% (1465687 chunks)

2023-05-08T03:26:33-04:00: processed 49% (1496068 chunks)

2023-05-08T03:26:34-04:00: processed 50% (1526787 chunks)

2023-05-08T03:26:34-04:00: processed 51% (1557703 chunks)

2023-05-08T03:26:35-04:00: processed 52% (1588142 chunks)

2023-05-08T03:26:35-04:00: processed 53% (1618792 chunks)

2023-05-08T03:26:36-04:00: processed 54% (1649363 chunks)

2023-05-08T03:26:37-04:00: processed 55% (1680019 chunks)

2023-05-08T03:26:37-04:00: processed 56% (1710612 chunks)

2023-05-08T03:26:38-04:00: processed 57% (1741374 chunks)

2023-05-08T03:26:38-04:00: processed 58% (1772157 chunks)

2023-05-08T03:26:39-04:00: processed 59% (1802839 chunks)

2023-05-08T03:26:39-04:00: processed 60% (1833461 chunks)

2023-05-08T03:26:40-04:00: processed 61% (1864154 chunks)

2023-05-08T03:26:40-04:00: processed 62% (1894469 chunks)

2023-05-08T03:26:41-04:00: processed 63% (1925056 chunks)

2023-05-08T03:26:42-04:00: processed 64% (1955290 chunks)

2023-05-08T03:26:43-04:00: processed 65% (1985727 chunks)

2023-05-08T03:26:43-04:00: processed 66% (2016288 chunks)

2023-05-08T03:26:44-04:00: processed 67% (2046765 chunks)

2023-05-08T03:26:44-04:00: processed 68% (2077496 chunks)

2023-05-08T03:26:45-04:00: processed 69% (2107903 chunks)

2023-05-08T03:26:45-04:00: processed 70% (2138235 chunks)

2023-05-08T03:26:46-04:00: processed 71% (2168898 chunks)

2023-05-08T03:26:46-04:00: processed 72% (2199631 chunks)

2023-05-08T03:26:47-04:00: processed 73% (2230105 chunks)

2023-05-08T03:26:48-04:00: processed 74% (2260642 chunks)

2023-05-08T03:26:48-04:00: processed 75% (2291536 chunks)

2023-05-08T03:26:49-04:00: processed 76% (2322270 chunks)

2023-05-08T03:26:49-04:00: processed 77% (2353050 chunks)

2023-05-08T03:26:50-04:00: processed 78% (2383572 chunks)

2023-05-08T03:26:50-04:00: processed 79% (2414228 chunks)

2023-05-08T03:26:51-04:00: processed 80% (2445009 chunks)

2023-05-08T03:26:52-04:00: processed 81% (2475444 chunks)

2023-05-08T03:26:52-04:00: processed 82% (2505868 chunks)

2023-05-08T03:26:53-04:00: processed 83% (2536521 chunks)

2023-05-08T03:26:53-04:00: processed 84% (2566961 chunks)

2023-05-08T03:26:54-04:00: processed 85% (2597599 chunks)

2023-05-08T03:26:54-04:00: processed 86% (2628213 chunks)

2023-05-08T03:26:55-04:00: processed 87% (2658691 chunks)

2023-05-08T03:26:55-04:00: processed 88% (2688982 chunks)

2023-05-08T03:26:56-04:00: processed 89% (2719655 chunks)

2023-05-08T03:26:57-04:00: processed 90% (2750486 chunks)

2023-05-08T03:26:57-04:00: processed 91% (2781053 chunks)

2023-05-08T03:26:58-04:00: processed 92% (2811553 chunks)

2023-05-08T03:26:58-04:00: processed 93% (2842216 chunks)

2023-05-08T03:26:59-04:00: processed 94% (2872725 chunks)

2023-05-08T03:26:59-04:00: processed 95% (2903158 chunks)

2023-05-08T03:27:00-04:00: processed 96% (2933761 chunks)

2023-05-08T03:27:00-04:00: processed 97% (2964278 chunks)

2023-05-08T03:27:01-04:00: processed 98% (2995058 chunks)

2023-05-08T03:27:01-04:00: processed 99% (3025345 chunks)

2023-05-08T03:27:02-04:00: Removed garbage: 215.885 MiB

2023-05-08T03:27:02-04:00: Removed chunks: 150

2023-05-08T03:27:02-04:00: Pending removals: 950.55 GiB (in 281186 chunks)

2023-05-08T03:27:02-04:00: Original data usage: 576.272 TiB

2023-05-08T03:27:02-04:00: On-Disk usage: 5.62 TiB (0.98%)

2023-05-08T03:27:02-04:00: On-Disk chunks: 2774558

2023-05-08T03:27:02-04:00: Deduplication factor: 102.53

2023-05-08T03:27:02-04:00: Average chunk size: 2.124 MiB

2023-05-08T03:27:02-04:00: TASK OK

the backups run for fews minutes only .

from 1 to let say 10 min.

the last backup was at 1ham and failed. the CG was at 3am.

the previous days the CG was at at 20pm, ( changed it to run after backup for test )

but it seem it didnt changed anything. everything as been smooth for months i dont know why its doing this now.

so we are again stuck , with nothing we can do to fix the issue except expand the Array , i hope you can find a workaround for us until then.

if you have a idea to get acces to this 264G free on the zfs, pool it might help to at least read the backup.

but i have not find the right command to expand it from 13.7 to 14T , ( its a ZRAID2 )

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

BK_DB1 14.0T 13.7T 264G - - 59% 98% 1.00x ONLINE -

like I said, zpool free is not available space for writing, it's raw disk capacity that is not yet used (there is a certain amount of reserved-for-zfs-itself space on the pool level, there is raidz overhead, etc.pp.). when you want to know "how much more can I write", you need to look at "zfs list -o space" (the available column in particular).

I can imagine two possible causes for "stuck" pending removals:

1. there is some sort of stuck PBS task that makes the GC use a cutoff too far in the past. should be cleared by doing a real restart of the PBS services (not the normal "reload"):

2. there was some issue in the past (time jumps caused by broken NTP/clock, ...) that caused currently unreferenced chunks to have an atime/mtime in the future… they will always be treated as non-removable (since their timestamp is after the cutoff) and you need to manually reset their timestamps. you can use

I can imagine two possible causes for "stuck" pending removals:

1. there is some sort of stuck PBS task that makes the GC use a cutoff too far in the past. should be cleared by doing a real restart of the PBS services (not the normal "reload"):

systemctl restart proxmox-backup proxmox-backup-proxy WARNING: this will kill all currently running tasks!2. there was some issue in the past (time jumps caused by broken NTP/clock, ...) that caused currently unreferenced chunks to have an atime/mtime in the future… they will always be treated as non-removable (since their timestamp is after the cutoff) and you need to manually reset their timestamps. you can use

find with appropriate parameters to both find such chunks and reset their timestamps to "now".thx for the advices.like I said, zpool free is not available space for writing, it's raw disk capacity that is not yet used (there is a certain amount of reserved-for-zfs-itself space on the pool level, there is raidz overhead, etc.pp.). when you want to know "how much more can I write", you need to look at "zfs list -o space" (the available column in particular).

I can imagine two possible causes for "stuck" pending removals:

1. there is some sort of stuck PBS task that makes the GC use a cutoff too far in the past. should be cleared by doing a real restart of the PBS services (not the normal "reload"):systemctl restart proxmox-backup proxmox-backup-proxyWARNING: this will kill all currently running tasks!

2. there was some issue in the past (time jumps caused by broken NTP/clock, ...) that caused currently unreferenced chunks to have an atime/mtime in the future… they will always be treated as non-removable (since their timestamp is after the cutoff) and you need to manually reset their timestamps. you can usefindwith appropriate parameters to both find such chunks and reset their timestamps to "now".

what can we actually do to retrieve this. our support plan only allow to chat here with support from Proxmox. we dont have the knowledge to fix this situation ...

a workaround or commands to issues would be appreciated .

you shoud avoid this from appening to be honnest , in Vmware / Hyper-v / Nutanix , those wall do not appen without fast solution ( deleting 1 or 2 backup ) will fix the full right away.

actually there is no warning anywhere that PBS can end in a Dead end...

@fabian quick update ... we are still stuck .

here is lat update we went to the DC to add a 3.84 TB to the full Zraid2 .

we google every answer possible on google about this issue, nothing allowed us to add the new drive to expand the pool.

here is lat update we went to the DC to add a 3.84 TB to the full Zraid2 .

we google every answer possible on google about this issue, nothing allowed us to add the new drive to expand the pool.

# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

BK_DB1 14.0T 13.7T 264G - - 59% 98% 1.00x ONLINE -

# zpool status

pool: BK_DB1

state: ONLINE

scan: scrub repaired 0B in 02:43:37 with 0 errors on Sun Apr 9 03:07:38 2023

config:

NAME STATE READ WRITE CKSUM

BK_DB1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

wwn-0x5002538c405320a7 ONLINE 0 0 0

wwn-0x5002538c4053212e ONLINE 0 0 0

wwn-0x5002538c40532152 ONLINE 0 0 0

wwn-0x5002538c40532123 ONLINE 0 0 0

errors: No known data errors

# zpool attach BK_DB1 raidz2-0 wwn-0x5002538c4051a136

cannot attach wwn-0x5002538c4051a136 to raidz2-0: can only attach to mirrors and top-level disks

ZFS doesn't allow you to expand a raidz1/2/3 vdev (yet). What you normally would do is buying 4 more disks, create another raidz2 with it and stripe it. Or move all data to another spare pool, destroy it, recreate it with 1 more disk and move data back.

Last edited:

I already gave you two solutions for two possible causesthx for the advices.

what can we actually do to retrieve this. our support plan only allow to chat here with support from Proxmox. we dont have the knowledge to fix this situation ...

a workaround or commands to issues would be appreciated .

you shoud avoid this from appening to be honnest , in Vmware / Hyper-v / Nutanix , those wall do not appen without fast solution ( deleting 1 or 2 backup ) will fix the full right away.

actually there is no warning anywhere that PBS can end in a Dead end...

It appear that its available on zfs since 2022 now and im running the appropriate command.ZFS doesn't allow you to expand a raidz1/2/3 vdev (yet). What you normally would do is buying 4 more disks, create another raidz2 with it and stripe it. Or move all data to another spare pool, destroy it, recreate it with 1 more disk and move data back.

Hi FabianI already gave you two solutions for two possible causessince you likely cannot do any backups at the moment anyway, as long as there is no sync pulling from this PBS restart both services, and restart GC. if that still shows pending data, run find (see "man find") to find all chunks with timestamps in the future, and reset their timestamp to now, wait 24h, re-run GC.

I did reatart the services. I also upgrades to 2.4.1 i also rebooted.

You cannot run a CG on a full proxmox datastore.. just give a try. You cant. And that the entire nightmare niow here that we try to figure out.

it's not - see https://github.com/openzfs/zfs/pull/12225It appear that its available on zfs since 2022 now and im running the appropriate command.

I'll circle back to square one.

if the filesystem behind your datastore is 100% full, you do need to do the following:

- prevent new backups from being made (else it will be full again)

- free up or add additional space

- then run GC

if you just run GC, it will fail, since running GC requires write access to the datastore (for locking, for marking chunks, ..). it doesn't need much space, but enough so that write operations don't fail.

the GC will calculate a cutoff, only chunks older than this cutoff and not referenced by any index are considered for removal. any chunks newer than this cutoff and not referenced are counted as "pending". the cutoff is calculated like this: max(24h+5min, age of oldest running task).

like I said, if you run GC multiple times with long enough gaps in between and your pending doesn't go down, there can be two causes:

- there's a worker running across all the GC runs (if you rebooted the node, that seems unlikely )

)

- the pending chunks have a wrong timestamp that is in the future

the second one is easy to verify, just run find with the appropriate parameters. for example (on a datastore that has no operations running!):

if there are any chunks with atime or mtime in the future, their path will be printed.

another option would be:

- something messes with your chunk store (e.g., by updating the timestamps every hour so that chunks never expire even if they are not referenced)

if you have any scripts/cron jobs/.. that might touch the chunk store, disable them!

if the filesystem behind your datastore is 100% full, you do need to do the following:

- prevent new backups from being made (else it will be full again)

- free up or add additional space

- then run GC

if you just run GC, it will fail, since running GC requires write access to the datastore (for locking, for marking chunks, ..). it doesn't need much space, but enough so that write operations don't fail.

the GC will calculate a cutoff, only chunks older than this cutoff and not referenced by any index are considered for removal. any chunks newer than this cutoff and not referenced are counted as "pending". the cutoff is calculated like this: max(24h+5min, age of oldest running task).

like I said, if you run GC multiple times with long enough gaps in between and your pending doesn't go down, there can be two causes:

- there's a worker running across all the GC runs (if you rebooted the node, that seems unlikely

- the pending chunks have a wrong timestamp that is in the future

the second one is easy to verify, just run find with the appropriate parameters. for example (on a datastore that has no operations running!):

Code:

touch /tmp/reference

find /path/to/datastore/.chunks -type f \( -newermm /tmp/reference -or -neweraa /tmp/reference \)if there are any chunks with atime or mtime in the future, their path will be printed.

another option would be:

- something messes with your chunk store (e.g., by updating the timestamps every hour so that chunks never expire even if they are not referenced)

if you have any scripts/cron jobs/.. that might touch the chunk store, disable them!

Will try to reset time stamp if i can free up space.

As this store is dedicated to backup only There is no sleeping files like logs Etc i can delete.

Question : is there any command you can provide me that can let say isolate the chunks created in the last 3-4 days and delete all of them ?

So even if it broke 1 or 2 last backup it will at least allow us to regain write to the datastore and then reset time stamp and then run the CG than wait for 24 hours and hopefully now free more space to allow us to reactivate the backups jobs....!

And from there i supppse verifiy job will isolate the broken last checkpoint if any

As this store is dedicated to backup only There is no sleeping files like logs Etc i can delete.

Question : is there any command you can provide me that can let say isolate the chunks created in the last 3-4 days and delete all of them ?

So even if it broke 1 or 2 last backup it will at least allow us to regain write to the datastore and then reset time stamp and then run the CG than wait for 24 hours and hopefully now free more space to allow us to reactivate the backups jobs....!

And from there i supppse verifiy job will isolate the broken last checkpoint if any

Last edited:

if you are willing to remove backups to free up space, removing the snapshot metadata of a few snapshots should free up enough space to allow GC - provided you don't attempt to make new backups in parallel. removing random chunks is much more dangerous, and not something I would advise.

if you are willing to remove backups to free up space, removing the snapshot metadata of a few snapshots should free up enough space to allow GC - provided you don't attempt to make new backups in parallel. removing random chunks is much more dangerous, and not something I would advise.

thx fabian for the advice.

do you refer to those files for example:

../ns/NS1/vm/300/2023-04-11T04:30:03Z/drive-scsi0.img.fidx

../ns/NS1/vm/300/2023-04-11T04:30:03Z/fw.conf.blob

../ns/NS1/vm/300/2023-04-11T04:30:03Z/client.log.blob

../ns/NS1/vm/300/2023-04-11T04:30:03Z/index.json.blob

../ns/NS1/vm/300/2023-04-11T04:30:03Z/qemu-server.conf.blob

they are around 0.1M each

yes, each "timestamped" directory is the metadata for one backup snapshot. if you remove that dir, that backup no longer (logically) exists (it is exactly what happens when you prune that backup/hit the trash icon in the GUI) and cannot be restored anymore.

it's not - see https://github.com/openzfs/zfs/pull/12225

multiple place online we now see it available.

https://freebsdfoundation.org/blog/raid-z-expansion-feature-for-zfs/

does it mean the feature is not part of the openZFS version inside the currents distro from proxmox ?

as @Dunuin mention our only work around if we cannot attach a new disk will be to Mirror another 4 SSD entreprise that we dont have on hand.

also @fabian i deleted multiple snapshot but as they are on the ZFS pool , i dont think they are deleted... the usable space remain 0B on proxmox, so anything i delete seem to stick on the ZFS array even if it show 273gb free. as its reserved .

so we are there now ... we have 3 namespace , 1 that can be deleted but it will probably not free anything as the chunks are all in the same folder...

is it possible to attach a single ZFS disk and bind it to the RaidZ2 temporarly ? and detatch that disk later ?

multiple place online we now see it available.

https://freebsdfoundation.org/blog/raid-z-expansion-feature-for-zfs/

"The feature was developed by Matthew Ahrens and is now completed but not yet integrated."

taken straight from the linked article..

does it mean the feature is not part of the openZFS version inside the currents distro from proxmox ?

as @Dunuin mention our only work around if we cannot attach a new disk will be to Mirror another 4 SSD entreprise that we dont have on hand.

like I said, it's not available (yet). OpenZFS is the upstream for all open ZFS implementations - BSD, Solaris, Linux.

also @fabian i deleted multiple snapshot but as they are on the ZFS pool , i dont think they are deleted... the usable space remain 0B on proxmox, so anything i delete seem to stick on the ZFS array even if it show 273gb free. as its reserved .

so we are there now ... we have 3 namespace , 1 that can be deleted but it will probably not free anything as the chunks are all in the same folder...

I am not sure what you did now - please always provide the exact commands/actions you did and what the result was, else nobody will be able to follow you..

is it possible to attach a single ZFS disk and bind it to the RaidZ2 temporarly ? and detatch that disk later ?

no.