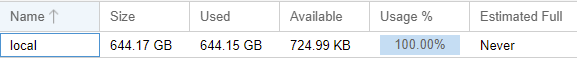

I'm stuck in a situation where backups are no longer functioning because the storage is at 100%.

Pruning malfunctioned, and disk usage began to grow. I was alerted when the free space was at 10%. (The prune job "Next Run" was in the past, telling it to "Run now" resolved this, but the damage was done).

After performing a manual prune and garbage collection, 40% of the existing data was placed in "Pending removals". However my backups are failing.

How do I delete this data? Its been over 24 hours since this data was backed up. The data I want to delete is over 6 days worth of normally pruned backups.

My backups are failing, if I can't fix this I'll have to blow the whole thing away and start again.

ha, estimated full "Never"

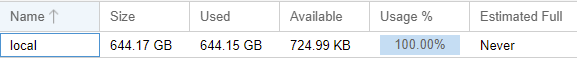

Pruning malfunctioned, and disk usage began to grow. I was alerted when the free space was at 10%. (The prune job "Next Run" was in the past, telling it to "Run now" resolved this, but the damage was done).

After performing a manual prune and garbage collection, 40% of the existing data was placed in "Pending removals". However my backups are failing.

How do I delete this data? Its been over 24 hours since this data was backed up. The data I want to delete is over 6 days worth of normally pruned backups.

My backups are failing, if I can't fix this I'll have to blow the whole thing away and start again.

Code:

Pending removals: 262.697 GiB (in 200114 chunks)

ha, estimated full "Never"