I'm having the "Failed to start Import ZFS pool [pool]" issue on 3 of our nodes. ZFS works fine, but the error is disconcerting. Here's what I found after some testing on a PVE 7.0-11 node that hadn't had ZFS setup on it before. I'm haven't checked PVE 7.2, so not sure if this is still relevant.

Alternatively, you could try to get the static service entry to run before the import-cache service. I tried doing this by adding

Analysis

- The failure message Does Not appear after creating a zpool via command line.

- ZFS relies on zfs-import-cache.service and zfs-import-scan.service to import pools at boot.

- The failure message Does appear after creating a zpool via the Proxmox web ui.

- Proxmox seems to be creating a static service entry (zfs-import@[pool].service) to ensure the pool gets loaded, instead of relying on the zfs-import-cache.service or zfs-import-scan.service. This is similar to what OpenZFS recommends in their Debian Bullseye Root on ZFS guide.

- The problem is that the zfs-import-cache.service runs before the zfs-import@[pool].service. Since the pool is already imported by the cache service, zfs-import@[pool].service fails to import it.

May 17 14:06:35 pve10 zpool[1276]: cannot import 'tank02': pool already exists

- The failure message Continues to appear after the zpool is destroyed.

- You can't destroy a zpool from within the Proxmox web ui.

- Destroying the zpool from the command line has no effect on the static service entry created by Proxmox.

Solution

My preferred solution is to remove the service entry created by Proxmox, and let the ZFS import-cache and import-scan services do their thing.

Bash:

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service zfs-import@tank02.service

root@pve10:~# rm /etc/systemd/system/zfs-import.target.wants/zfs-import@tank02.service

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service

root@pve10:~# reboot

root@pve10:~# systemctl | grep zfs-import

zfs-import-cache.service loaded active exited Import ZFS pools by cache file

zfs-import.target loaded active active ZFS pool import targetAlternatively, you could try to get the static service entry to run before the import-cache service. I tried doing this by adding

Before=zfs-import-cache.service to the static service, but it seems like this prevented import-cache from running. There's probably a better way to do this, as I'm no Linux expert.

Bash:

root@pve10:~# nano /etc/systemd/system/zfs-import.target.wants/zfs-import@tank02.service

[Unit]

Description=Import ZFS pool %i

Documentation=man:zpool(8)

DefaultDependencies=no

After=systemd-udev-settle.service

After=cryptsetup.target

After=multipathd.target

Before=zfs-import.target

Before=zfs-import-cache.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/sbin/zpool import -N -d /dev/disk/by-id -o cachefile=none %I

[Install]

WantedBy=zfs-import.target

root@pve10:~# reboot

root@pve10:~# systemctl | grep zfs-import

zfs-import@tank02.service loaded active exited Import ZFS pool tank02

zfs-import.target loaded active active ZFS pool import targetReference Info

Manually created zpool does not cause error

Bash:

root@pve10:~# zpool status

no pools available

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service

root@pve10:~# zpool create -o ashift=12 tank01 raidz2 sdb sdc sdd sde

root@pve10:~# zpool status

pool: tank01

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

tank01 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service

root@pve10:~# reboot

root@pve10:~# cat /var/log/syslog | grep 'Failed to start Import ZFS pool'

root@pve10:~# zpool status

pool: tank01

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

tank01 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service

root@pve10:~# systemctl | grep zfs-import

zfs-import-cache.service loaded active exited Import ZFS pools by cache file

zfs-import.target loaded active active ZFS pool import target

root@pve10:~# zpool destroy tank01Proxmox created zpool causes error

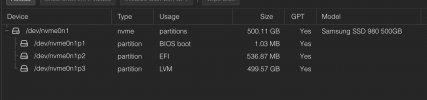

- Login to Proxmox and select node

- Expand "Disks" and select "ZFS"

- Click "Create: ZFS"

- Name: tank02

- RAID Level: RAIDZ2

- Compression: on

- ashift: 12

Bash:

root@pve10:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

tank02 14.5T 1.62M 14.5T - - 0% 0% 1.00x ONLINE -

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service zfs-import@tank02.service

root@pve10:~# systemctl | grep zfs-import

zfs-import-cache.service loaded active exited Import ZFS pools by cache file

zfs-import.target loaded active active ZFS pool import target

root@pve10:~# reboot

root@pve10:~# cat /var/log/syslog | grep 'Failed to start Import ZFS pool'

May 17 14:06:35 pve10 systemd[1]: Failed to start Import ZFS pool tank02.

root@pve10:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

tank02 14.5T 1.62M 14.5T - - 0% 0% 1.00x ONLINE -

root@pve10:~# systemctl | grep zfs-import

zfs-import-cache.service loaded active exited Import ZFS pools by cache file

● zfs-import@tank02.service loaded failed failed Import ZFS pool tank02

zfs-import.target loaded active active ZFS pool import target

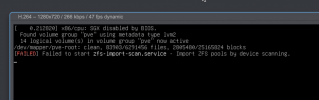

root@pve10:~# systemctl status zfs-import@tank02.service

Warning: The unit file, source configuration file or drop-ins of zfs-import@tank02.service changed on disk. Run 'systemctl daemon-reload' to reload >

● zfs-import@tank02.service - Import ZFS pool tank02

Loaded: loaded (/lib/systemd/system/zfs-import@.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Wed 2023-05-17 14:06:35 EDT; 8min ago

Docs: man:zpool(8)

Process: 1276 ExecStart=/sbin/zpool import -N -d /dev/disk/by-id -o cachefile=none tank02 (code=exited, status=1/FAILURE)

Main PID: 1276 (code=exited, status=1/FAILURE)

CPU: 57ms

May 17 14:06:34 pve10 systemd[1]: Starting Import ZFS pool tank02...

May 17 14:06:35 pve10 zpool[1276]: cannot import 'tank02': pool already exists

May 17 14:06:35 pve10 systemd[1]: zfs-import@tank02.service: Main process exited, code=exited, status=1/FAILURE

May 17 14:06:35 pve10 systemd[1]: zfs-import@tank02.service: Failed with result 'exit-code'.

May 17 14:06:35 pve10 systemd[1]: Failed to start Import ZFS pool tank02.Destroying the zpool has no effect on service entry created by Proxmox

Bash:

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service zfs-import@tank02.service

root@pve10:~# zpool destroy tank02

root@pve10:~# ls /etc/systemd/system/zfs-import.target.wants

zfs-import-cache.service zfs-import-scan.service zfs-import@tank02.service