Hi,

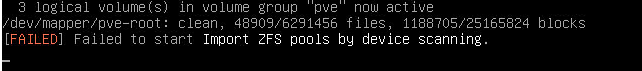

at startup of two fresh installed Proxmox nodes (v7.2.1) i get an error that a ZFS pool could not be imported.

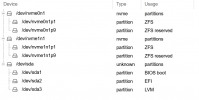

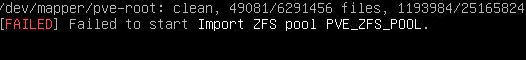

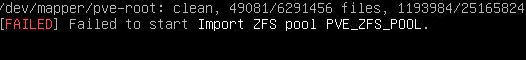

PVE_ZFS_POOL is the name of the pool. Proxmox itself is installed on a hardware raid and not on ZFS. This is a ZFS storage pool.

Proxmox starts up after that message and seems to work. Is this error message something i need to care about? What is the reason for this error?

Thank you so much for help!

at startup of two fresh installed Proxmox nodes (v7.2.1) i get an error that a ZFS pool could not be imported.

PVE_ZFS_POOL is the name of the pool. Proxmox itself is installed on a hardware raid and not on ZFS. This is a ZFS storage pool.

Proxmox starts up after that message and seems to work. Is this error message something i need to care about? What is the reason for this error?

Thank you so much for help!