Hi,

i'm having a strange problem with my bare metal offsite Proxmox Backup system, obviously since the laste update

to the newest kernel 6.8.12-9-pve. After the bootup the zfs pools cannot be imported by the system accordingly to

problem with loading the zfs kernel modules, also the systemd-modules-load.service them to fail during boot process.

The system is an hp Microserver gen8 (Intel Core i5-3470T, 8GB RAM) booting in legacy mode. Looking for key reject

failure articles often points to signing problems in relationship to uefi boot - which for me doesn't fit in this situation.

At the moment I pinned the kernel to 6.8.12-8-pve, which is working without any zfs problems so far.

errors in the logfile with kernel 6.8.12-9-pve:

Trying to load the module manually is reproducing the error

root@pbs-offsite:~# modprobe zfs

modprobe: ERROR: could not insert 'zfs': Key was rejected by service

The systemd-modules-load.service is also in error state after bootup

root@pbs-offsite:~# systemctl status systemd-modules-load.service

× systemd-modules-load.service - Load Kernel Modules

Loaded: loaded (/lib/systemd/system/systemd-modules-load.service; static)

Active: failed (Result: exit-code) since Sat 2025-04-05 08:52:03 CEST; 4min 58s ago

Docs: man:systemd-modules-load.service(8)

man:modules-load.d(5)

Process: 431 ExecStart=/lib/systemd/systemd-modules-load (code=exited, status=1/FAILURE)

Main PID: 431 (code=exited, status=1/FAILURE)

CPU: 53ms

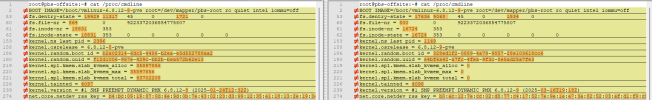

Comparison 6.8.12-8-pve (-b -1) vs. 6.8.12-9-pve (-b):

root@pbs-offsite:~# journalctl -b -1 -k -p err

Apr 05 08:45:56 pbs-offsite kernel: ACPI: SPCR: [Firmware Bug]: Unexpected SPCR Access Width. Defaulting to byte size

Apr 05 08:45:56 pbs-offsite kernel: DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

Apr 05 08:45:56 pbs-offsite kernel: [Firmware Bug]: the BIOS has corrupted hw-PMU resources (MSR 38d is 330)

Apr 05 08:45:56 pbs-offsite kernel: DMAR: DRHD: handling fault status reg 2

Apr 05 08:45:56 pbs-offsite kernel: DMAR: [INTR-REMAP] Request device [01:00.0] fault index 0x17 [fault reason 0x26] Blocked an interrupt request due to source-id verification failure

root@pbs-offsite:~# journalctl -b -k -p err

Apr 05 08:52:02 pbs-offsite kernel: ACPI: SPCR: [Firmware Bug]: Unexpected SPCR Access Width. Defaulting to byte size

Apr 05 08:52:02 pbs-offsite kernel: DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

Apr 05 08:52:02 pbs-offsite kernel: [Firmware Bug]: the BIOS has corrupted hw-PMU resources (MSR 38d is 330)

Apr 05 08:52:03 pbs-offsite kernel: DMAR: DRHD: handling fault status reg 2

Apr 05 08:52:03 pbs-offsite kernel: DMAR: [INTR-REMAP] Request device [01:00.0] fault index 0x14 [fault reason 0x26] Blocked an interrupt request due to source-id verification failure

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

So far i tried the following

- disabling VT-d and VT-x in the BIOS options

- disabling iommu in the default grub options (Apr 05 22:27:02 pbs-offsite kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-9-pve root=/dev/mapper/pbs-root ro quiet intel_iommu=off)

The problem seems to persist, any suggestions on this behavior?

i'm having a strange problem with my bare metal offsite Proxmox Backup system, obviously since the laste update

to the newest kernel 6.8.12-9-pve. After the bootup the zfs pools cannot be imported by the system accordingly to

problem with loading the zfs kernel modules, also the systemd-modules-load.service them to fail during boot process.

The system is an hp Microserver gen8 (Intel Core i5-3470T, 8GB RAM) booting in legacy mode. Looking for key reject

failure articles often points to signing problems in relationship to uefi boot - which for me doesn't fit in this situation.

At the moment I pinned the kernel to 6.8.12-8-pve, which is working without any zfs problems so far.

errors in the logfile with kernel 6.8.12-9-pve:

Code:

...

Apr 05 08:35:14 pbs-offsite systemd-journald[311]: Journal started

Apr 05 08:35:14 pbs-offsite systemd-journald[311]: Runtime Journal (/run/log/journal/98b229f1f0e1463fb3a1a93a9a86966c) is 8.0M, max 78.8M, 70.8M free.

Apr 05 08:35:12 pbs-offsite systemd-modules-load[312]: Failed to insert module 'zfs': Key was rejected by service

Apr 05 08:35:14 pbs-offsite systemd[1]: Started systemd-journald.service - Journal Service.

Apr 05 08:35:12 pbs-offsite systemd-udevd[331]: Using default interface naming scheme 'v252'.

Apr 05 08:35:13 pbs-offsite lvm[379]: PV /dev/sda3 online, VG pbs is complete.

Apr 05 08:35:13 pbs-offsite lvm[379]: VG pbs finished

Apr 05 08:35:14 pbs-offsite kernel: usbcore: registered new interface driver usbmouse

Apr 05 08:35:14 pbs-offsite kernel: usbcore: registered new interface driver usbkbd

Apr 05 08:35:13 pbs-offsite systemd-modules-load[382]: Failed to insert module 'zfs': Key was rejected by service

Apr 05 08:35:13 pbs-offsite (udev-worker)[335]: sdc1: Process '/sbin/modprobe zfs' failed with exit code 1.

Apr 05 08:35:13 pbs-offsite (udev-worker)[345]: sdb1: Process '/sbin/modprobe zfs' failed with exit code 1.

Apr 05 08:35:13 pbs-offsite (udev-worker)[338]: sdd1: Process '/sbin/modprobe zfs' failed with exit code 1.

Apr 05 08:35:14 pbs-offsite lvm[303]: 2 logical volume(s) in volume group "pbs" monitored

Apr 05 08:35:13 pbs-offsite systemd-modules-load[412]: Failed to insert module 'zfs': Key was rejected by service

Apr 05 08:35:13 pbs-offsite systemd-modules-load[444]: Failed to insert module 'zfs': Key was rejected by service

Apr 05 08:35:14 pbs-offsite udevadm[334]: systemd-udev-settle.service is deprecated. Please fix zfs-import-cache.service, zfs-import-scan.service not to pull it in.

Apr 05 08:35:14 pbs-offsite kernel: input: BMC Virtual Keyboard as /devices/pci0000:00/0000:00:1c.7/0000:01:00.4/usb1/1-1/1-1:1.0/0003:03F0:7029.0001/input/input5

Apr 05 08:35:14 pbs-offsite systemd[1]: Starting systemd-journal-flush.service - Flush Journal to Persistent Storage...

Apr 05 08:35:14 pbs-offsite systemd-journald[311]: Time spent on flushing to /var/log/journal/98b229f1f0e1463fb3a1a93a9a86966c is 148.751ms for 1054 entries.

Apr 05 08:35:14 pbs-offsite systemd-journald[311]: System Journal (/var/log/journal/98b229f1f0e1463fb3a1a93a9a86966c) is 74.5M, max 4.0G, 3.9G free.

Apr 05 08:35:14 pbs-offsite systemd-journald[311]: Received client request to flush runtime journal.

Apr 05 08:35:14 pbs-offsite kernel: hid-generic 0003:03F0:7029.0001: input,hidraw0: USB HID v1.01 Keyboard [BMC Virtual Keyboard ] on usb-0000:01:00.4-1/input0

Apr 05 08:35:14 pbs-offsite kernel: input: BMC Virtual Keyboard as /devices/pci0000:00/0000:00:1c.7/0000:01:00.4/usb1/1-1/1-1:1.1/0003:03F0:7029.0002/input/input6

Apr 05 08:35:14 pbs-offsite kernel: hid-generic 0003:03F0:7029.0002: input,hidraw1: USB HID v1.01 Mouse [BMC Virtual Keyboard ] on usb-0000:01:00.4-1/input1

Apr 05 08:35:14 pbs-offsite systemd[1]: Finished systemd-journal-flush.service - Flush Journal to Persistent Storage.

Apr 05 08:35:14 pbs-offsite systemd[1]: Finished ifupdown2-pre.service - Helper to synchronize boot up for ifupdown.

Apr 05 08:35:14 pbs-offsite systemd[1]: Finished systemd-udev-settle.service - Wait for udev To Complete Device Initialization.

Apr 05 08:35:14 pbs-offsite systemd[1]: Starting zfs-import@offsite\x2dzfs.service - Import ZFS pool offsite\x2dzfs...

Apr 05 08:35:14 pbs-offsite zpool[467]: Failed to initialize the libzfs library.

Apr 05 08:35:14 pbs-offsite systemd[1]: zfs-import@offsite\x2dzfs.service: Main process exited, code=exited, status=1/FAILURE

Apr 05 08:35:14 pbs-offsite systemd[1]: zfs-import@offsite\x2dzfs.service: Failed with result 'exit-code'.

Apr 05 08:35:14 pbs-offsite systemd[1]: Failed to start zfs-import@offsite\x2dzfs.service - Import ZFS pool offsite\x2dzfs.

Apr 05 08:35:14 pbs-offsite systemd[1]: zfs-import-cache.service - Import ZFS pools by cache file was skipped because of an unmet condition check (ConditionPathIsDirectory=/sys/module/zfs).

Apr 05 08:35:14 pbs-offsite systemd[1]: zfs-import-scan.service - Import ZFS pools by device scanning was skipped because of an unmet condition check (ConditionPathIsDirectory=/sys/module/zfs).

Apr 05 08:35:14 pbs-offsite systemd[1]: Reached target zfs-import.target - ZFS pool import target.

Apr 05 08:35:14 pbs-offsite systemd[1]: zfs-mount.service - Mount ZFS filesystems was skipped because of an unmet condition check (ConditionPathIsDirectory=/sys/module/zfs).

Apr 05 08:35:14 pbs-offsite systemd[1]: Reached target local-fs.target - Local File Systems.

...Trying to load the module manually is reproducing the error

root@pbs-offsite:~# modprobe zfs

modprobe: ERROR: could not insert 'zfs': Key was rejected by service

The systemd-modules-load.service is also in error state after bootup

root@pbs-offsite:~# systemctl status systemd-modules-load.service

× systemd-modules-load.service - Load Kernel Modules

Loaded: loaded (/lib/systemd/system/systemd-modules-load.service; static)

Active: failed (Result: exit-code) since Sat 2025-04-05 08:52:03 CEST; 4min 58s ago

Docs: man:systemd-modules-load.service(8)

man:modules-load.d(5)

Process: 431 ExecStart=/lib/systemd/systemd-modules-load (code=exited, status=1/FAILURE)

Main PID: 431 (code=exited, status=1/FAILURE)

CPU: 53ms

Comparison 6.8.12-8-pve (-b -1) vs. 6.8.12-9-pve (-b):

root@pbs-offsite:~# journalctl -b -1 -k -p err

Apr 05 08:45:56 pbs-offsite kernel: ACPI: SPCR: [Firmware Bug]: Unexpected SPCR Access Width. Defaulting to byte size

Apr 05 08:45:56 pbs-offsite kernel: DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

Apr 05 08:45:56 pbs-offsite kernel: [Firmware Bug]: the BIOS has corrupted hw-PMU resources (MSR 38d is 330)

Apr 05 08:45:56 pbs-offsite kernel: DMAR: DRHD: handling fault status reg 2

Apr 05 08:45:56 pbs-offsite kernel: DMAR: [INTR-REMAP] Request device [01:00.0] fault index 0x17 [fault reason 0x26] Blocked an interrupt request due to source-id verification failure

root@pbs-offsite:~# journalctl -b -k -p err

Apr 05 08:52:02 pbs-offsite kernel: ACPI: SPCR: [Firmware Bug]: Unexpected SPCR Access Width. Defaulting to byte size

Apr 05 08:52:02 pbs-offsite kernel: DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

Apr 05 08:52:02 pbs-offsite kernel: [Firmware Bug]: the BIOS has corrupted hw-PMU resources (MSR 38d is 330)

Apr 05 08:52:03 pbs-offsite kernel: DMAR: DRHD: handling fault status reg 2

Apr 05 08:52:03 pbs-offsite kernel: DMAR: [INTR-REMAP] Request device [01:00.0] fault index 0x14 [fault reason 0x26] Blocked an interrupt request due to source-id verification failure

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

Apr 05 08:52:04 pbs-offsite systemd[1]: Failed to start systemd-modules-load.service - Load Kernel Modules.

So far i tried the following

- disabling VT-d and VT-x in the BIOS options

- disabling iommu in the default grub options (Apr 05 22:27:02 pbs-offsite kernel: Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-9-pve root=/dev/mapper/pbs-root ro quiet intel_iommu=off)

The problem seems to persist, any suggestions on this behavior?