Hi,

today in the morning all my VMs where not able to find their HDD to boot from, because the .

I'm facing an issue while starting the VM on the proxmox with the below error:

TASK ERROR: can't activate LV '/dev/pve/vm-100-disk-0': Failed to find logical volume "pve/vm-100-disk-0"

In the morning the storage with the VM disks were "Status: unknown", which I solved by readding it to the node. I tried to understand the issue and went through a couple of threads to understand the issue but we are not succeed with it.

Even a restore of a backup is not working and creates the following error:

TASK ERROR: command 'set -o pipefail && zstd -q -d -c /mnt/pve/backup/dump/vzdump-qemu-100-2023_08_05-00_00_02.vma.zst | vma extract -v -r /var/tmp/vzdumptmp27655.fifo - /var/tmp/vzdumptmp27655' failed: lvcreate 'pve/vm-100-disk-0' error: Volume group "pve" has insufficient free space (4094 extents): 17920 required.

So I think the disks are located on the storage, but they won't apear in the VM Disks list.

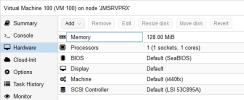

Here are a few information about the system

The content of the

Anyone got an idea, how I can bring up my VMs again?

Thank you very much.

Best regards.

today in the morning all my VMs where not able to find their HDD to boot from, because the .

I'm facing an issue while starting the VM on the proxmox with the below error:

TASK ERROR: can't activate LV '/dev/pve/vm-100-disk-0': Failed to find logical volume "pve/vm-100-disk-0"

In the morning the storage with the VM disks were "Status: unknown", which I solved by readding it to the node. I tried to understand the issue and went through a couple of threads to understand the issue but we are not succeed with it.

Even a restore of a backup is not working and creates the following error:

TASK ERROR: command 'set -o pipefail && zstd -q -d -c /mnt/pve/backup/dump/vzdump-qemu-100-2023_08_05-00_00_02.vma.zst | vma extract -v -r /var/tmp/vzdumptmp27655.fifo - /var/tmp/vzdumptmp27655' failed: lvcreate 'pve/vm-100-disk-0' error: Volume group "pve" has insufficient free space (4094 extents): 17920 required.

So I think the disks are located on the storage, but they won't apear in the VM Disks list.

Here are a few information about the system

lvs:

Code:

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- <181.18g 0.00 0.89

root pve -wi-ao---- 69.50g

swap pve -wi-ao---- 8.00gvgs:

Code:

VG #PV #LV #SN Attr VSize VFree

pve 1 3 0 wz--n- 278.37g 15.99glsblk:

Code:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 278.9G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 278.4G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 69.5G 0 lvm /

├─pve-data_tmeta 253:2 0 1.9G 0 lvm

│ └─pve-data 253:4 0 181.2G 0 lvm

└─pve-data_tdata 253:3 0 181.2G 0 lvm

└─pve-data 253:4 0 181.2G 0 lvm

sdb 8:16 0 817.3G 0 disk

sr0 11:0 1 1024M 0 romThe content of the

/etc/pve/storage.conf:

Code:

dir: local

path /var/lib/vz

content vztmpl,backup,iso

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

nfs: backup

export /volume1/Sicherung

path /mnt/pve/backup

server 10.59.0.15

content backup

prune-backups keep-daily=7,keep-last=3,keep-weekly=4

lvm: guests

vgname pve

content rootdir,images

nodes JMSRVPRX

shared 0lvscan:

Code:

ACTIVE '/dev/pve/swap' [8.00 GiB] inherit

ACTIVE '/dev/pve/root' [69.50 GiB] inherit

ACTIVE '/dev/pve/data' [<181.18 GiB] inheritAnyone got an idea, how I can bring up my VMs again?

Thank you very much.

Best regards.

Last edited: