Extremely Slow PBS Speeds

- Thread starter endlessnesting

- Start date

-

- Tags

- pbs backup smb truenas

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Keep in mind that PBS really needs low latencies. Veeam will use big incremental image files. PBS chops everything in small (some KB and up to a maximum of 4 MiB) chunks. So if you backup a 4TB disk it will need to hash and write atleast 1 million small files. NFS/SMB isn't that great at handling millions of small files.

I'm just now thinking about this, but my PBS vm sits on a shared NVMe VM storage. Couldn't I write the backups locally to disk, then use cron to move the resulting local backup files to a SMB share?

I didn't actually look at the file system to see if PBS stores the VM backups in millions of tiny individual files, or if its just tiny individual files while being transfered from a proxmox host to PBS?

Its stored as millions of small files and the atime is important for pruning/GC, because all backups will get deleted by the GC that were not touched by the prune task. So I guess its problematic to copy files over.

After some modification, this is my benchmark (PBS still in a VM):

I'm about to run some backups to see what the overall speed is, but this is looking much better. Pretty sure it was the cpu switch from kvm to host.

Uploaded 365 chunks in 5 seconds.

Time per request: 13784 microseconds.

TLS speed: 304.27 MB/s

SHA256 speed: 218.28 MB/s

Compression speed: 476.22 MB/s

Decompress speed: 1203.41 MB/s

AES256/GCM speed: 2464.00 MB/s

Verify speed: 339.96 MB/s

┌───────────────────────────────────┬─────────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪═════════════════════╡

│ TLS (maximal backup upload speed) │ 304.27 MB/s (25%) │

├───────────────────────────────────┼─────────────────────┤

│ SHA256 checksum computation speed │ 218.28 MB/s (11%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 compression speed │ 476.22 MB/s (63%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 decompression speed │ 1203.41 MB/s (100%) │

├───────────────────────────────────┼─────────────────────┤

│ Chunk verification speed │ 339.96 MB/s (45%) │

├───────────────────────────────────┼─────────────────────┤

│ AES256 GCM encryption speed │ 2464.00 MB/s (68%) │

└───────────────────────────────────┴─────────────────────┘

I'm about to run some backups to see what the overall speed is, but this is looking much better. Pretty sure it was the cpu switch from kvm to host.

Uploaded 365 chunks in 5 seconds.

Time per request: 13784 microseconds.

TLS speed: 304.27 MB/s

SHA256 speed: 218.28 MB/s

Compression speed: 476.22 MB/s

Decompress speed: 1203.41 MB/s

AES256/GCM speed: 2464.00 MB/s

Verify speed: 339.96 MB/s

┌───────────────────────────────────┬─────────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪═════════════════════╡

│ TLS (maximal backup upload speed) │ 304.27 MB/s (25%) │

├───────────────────────────────────┼─────────────────────┤

│ SHA256 checksum computation speed │ 218.28 MB/s (11%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 compression speed │ 476.22 MB/s (63%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 decompression speed │ 1203.41 MB/s (100%) │

├───────────────────────────────────┼─────────────────────┤

│ Chunk verification speed │ 339.96 MB/s (45%) │

├───────────────────────────────────┼─────────────────────┤

│ AES256 GCM encryption speed │ 2464.00 MB/s (68%) │

└───────────────────────────────────┴─────────────────────┘

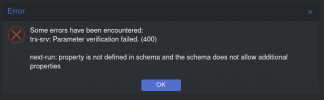

Did you upgrade your PVE from 7.0 to 7.1 recently? I got that error after the upgrade, because I didn'T refreshed the browser cache (CTRL+F5) after the upgrade. Because of that I was using the cached PVE7.0 webui with the PVE 7.1 backend. This resulted in wrong backup configs because PVE switched the b ackup scheduler with PVE 7.1. So best you do a CTRL+F5 and then create that backup task again.

I did an upgrade, but I was already on 7.1.Did you upgrade your PVE from 7.0 to 7.1 recently? I got that error after the upgrade, because I didn'T refreshed the browser cache (CTRL+F5) after the upgrade. Because of that I was using the cached PVE7.0 webui with the PVE 7.1 backend. This resulted in wrong backup configs because PVE switched the b ackup scheduler with PVE 7.1. So best you do a CTRL+F5 and then create that backup task again.

I have tried clearing my browser cache, restarting my computer, and recreating the backup tasks. Same error

Please send your:I have tried clearing my browser cache, restarting my computer, and recreating the backup tasks. Same error

> pveversion -v

The backup job still ran at its normal time, I just cant run it manually.Please send your:

> pveversion -v

# pveversion -v

proxmox-ve: 7.1-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.1-9 (running version: 7.1-9/0740a2bc)

pve-kernel-helper: 7.1-8

pve-kernel-5.13: 7.1-6

pve-kernel-5.13.19-3-pve: 5.13.19-6

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.22-pve2

libproxmox-acme-perl: 1.4.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.1-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.1-2

libpve-guest-common-perl: 4.0-3

libpve-http-server-perl: 4.1-1

libpve-storage-perl: 7.0-15

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.11-1

lxcfs: 4.0.11-pve1

novnc-pve: 1.3.0-1

proxmox-backup-client: 2.1.3-1

proxmox-backup-file-restore: 2.1.3-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.4-5

pve-cluster: 7.1-3

pve-container: 4.1-3

pve-docs: 7.1-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.3-4

pve-ha-manager: 3.3-1

pve-i18n: 2.6-2

pve-qemu-kvm: 6.1.0-3

pve-xtermjs: 4.12.0-1

qemu-server: 7.1-4

smartmontools: 7.2-1

spiceterm: 3.2-2

swtpm: 0.7.0~rc1+2

vncterm: 1.7-1

zfsutils-linux: 2.1.2-pve1

The bug is fixed in 7.1.10pve-manager: 7.1-9 (running version: 7.1-9/0740a2bc)

=> see changelog on http://download.proxmox.com/debian/...ion/binary-amd64/pve-manager_7.1-10.changelog

Thanks for your work in this thread and for all the support everyone here contributed, this was incredibly interesting and informative to read you guys! Has this been documented in the wiki by chance? Might be helpful as a troubleshooting section.It seems the cpu change to host has made the biggest improvement. Backup jobs now run at 500-800Mbit/s.

I can confirm that changing the CPU setting of the PBS VM to "host", I could rise the TLS value from 43 MB/s to 275 MB/s:

Before:

Uploaded 52 chunks in 5 seconds.

Time per request: 98437 microseconds.

TLS speed: 42.61 MB/s

SHA256 speed: 231.30 MB/s

Compression speed: 373.42 MB/s

Decompress speed: 621.15 MB/s

AES256/GCM speed: 1409.60 MB/s

Verify speed: 263.64 MB/s

After:

Uploaded 330 chunks in 5 seconds.

Time per request: 15269 microseconds.

TLS speed: 274.69 MB/s

SHA256 speed: 264.81 MB/s

Compression speed: 384.39 MB/s

Decompress speed: 639.13 MB/s

AES256/GCM speed: 1399.95 MB/s

Verify speed: 266.21 MB/s

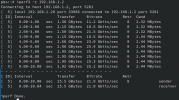

This results in a backup speed of about 180 MByte/s through 2x 10 GBit bonded.

A restore to the same local-zfs (NVMe, where also the PBS VM lives) results into approx 60 MByte/s restore speed.

A restore through 2x 10 GBit bonded to local-zfs of second server (also NVMe) results into approx 72 MByte/s restore speed.

Before:

Uploaded 52 chunks in 5 seconds.

Time per request: 98437 microseconds.

TLS speed: 42.61 MB/s

SHA256 speed: 231.30 MB/s

Compression speed: 373.42 MB/s

Decompress speed: 621.15 MB/s

AES256/GCM speed: 1409.60 MB/s

Verify speed: 263.64 MB/s

After:

Uploaded 330 chunks in 5 seconds.

Time per request: 15269 microseconds.

TLS speed: 274.69 MB/s

SHA256 speed: 264.81 MB/s

Compression speed: 384.39 MB/s

Decompress speed: 639.13 MB/s

AES256/GCM speed: 1399.95 MB/s

Verify speed: 266.21 MB/s

This results in a backup speed of about 180 MByte/s through 2x 10 GBit bonded.

A restore to the same local-zfs (NVMe, where also the PBS VM lives) results into approx 60 MByte/s restore speed.

A restore through 2x 10 GBit bonded to local-zfs of second server (also NVMe) results into approx 72 MByte/s restore speed.

Last edited:

Hmm, something seems off with this.I can confirm that changing the CPU setting of the PBS VM to "host", I could rise the TLS value from 43 MB/s to 275 MB/s:

Before:

Uploaded 52 chunks in 5 seconds.

Time per request: 98437 microseconds.

TLS speed: 42.61 MB/s

SHA256 speed: 231.30 MB/s

Compression speed: 373.42 MB/s

Decompress speed: 621.15 MB/s

AES256/GCM speed: 1409.60 MB/s

Verify speed: 263.64 MB/s

After:

Uploaded 330 chunks in 5 seconds.

Time per request: 15269 microseconds.

TLS speed: 274.69 MB/s

SHA256 speed: 264.81 MB/s

Compression speed: 384.39 MB/s

Decompress speed: 639.13 MB/s

AES256/GCM speed: 1399.95 MB/s

Verify speed: 266.21 MB/s

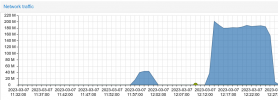

This results in a backup speed of about 180 MByte/s through 2x 10 GBit bonded.

View attachment 47659

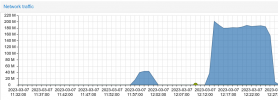

A restore to the same local-zfs (NVMe, where also the PBS VM lives) results into approx 60 MByte/s restore speed.

View attachment 47657

A restore through 2x 10 GBit bonded to local-zfs of second server (also NVMe) results into approx 72 MByte/s restore speed.

- What model of CPU?

- How much memory does PBS and PVE have? (How much is used on both)

- How is the NIC bonded? LACP? Round Robin? etc..

- If you run iperf3 from your PBS host to your PVE hosts, what is the average throughput? (>~18000Mbps is acceptable given a 20Gbps link in LACP)

- When you say NVMe, do you mean consumer QLC, consumer MLC like a Samsung 980 Pro, or enterprise like a Kioxia U2 drive?

- What is the workload on those NVMes? Does the Summary page on your nodes show any io_wait?

- What is the storage configuration? (RAIDzMirror w/ 2 disks or 3 or 4 or 6, RAIDz1-2-3 with how many disks).

Ideally, you want minimum mirror+spare for low i/o requirements, multiple mirror groups + spares in a pool for high i/o requirements.

Cheers,

Tmanok

Last edited:

Dear Tmanok!

each 2x Xeon(R) CPU E5-2673 v4 @ 2.30GHz each 20x cores

8x cores for PBS virtual machine on node #2 although 4x cores would be enough.

PBS: 8 GB although 4 GB would be enough

RAM used is 50% on each, although not productive and only ZFS cache probably

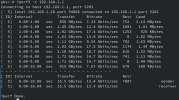

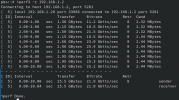

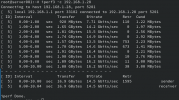

node#1 as iperf3 server / pbs-vm as client on node #2 transfered through bonded 2x 10GBit:

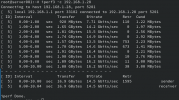

node#2 as iperf3 server / pbs-vm as client on node #2 transfer locally:

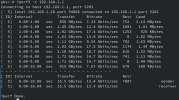

pbs-vm on node #2 as iperf3 server / node#1 as iperf3 client, transfered through bonded 2x 10GBit:

PBS VM with 2 harddrives on zfs-local of node#2, second hdd as zfs-datastore1 (zfs on zfs ... bad idea?)

Thank you!

Falco

I have 2 nodes in cluster with same specs:Hmm, something seems off with this.

- What model of CPU?

each 2x Xeon(R) CPU E5-2673 v4 @ 2.30GHz each 20x cores

8x cores for PBS virtual machine on node #2 although 4x cores would be enough.

PVE: 320 GB each node

- How much memory does PBS and PVE have? (How much is used on both)

PBS: 8 GB although 4 GB would be enough

RAM used is 50% on each, although not productive and only ZFS cache probably

Linux Bond with Round-Robin, works with over 900 MByte/s while migratining a container through "insecure" migration connection.

- How is the NIC bonded? LACP? Round Robin? etc..

- If you run iperf3 from your PBS host to your PVE hosts, what is the average throughput? (>~18000Mbps is acceptable given a 20Gbps link in LACP)

node#1 as iperf3 server / pbs-vm as client on node #2 transfered through bonded 2x 10GBit:

node#2 as iperf3 server / pbs-vm as client on node #2 transfer locally:

pbs-vm on node #2 as iperf3 server / node#1 as iperf3 client, transfered through bonded 2x 10GBit:

One 7,68TB 2,5" Micron 7450 Pro Datacenter Enterprise 24/7 U.3 NVMe 1Mio IOPS 14.000TBW PCIe 4.0 per Proxmox node.

- When you say NVMe, do you mean consumer QLC, consumer MLC like a Samsung 980 Pro, or enterprise like a Kioxia U2 drive?

PBS VM with 2 harddrives on zfs-local of node#2, second hdd as zfs-datastore1 (zfs on zfs ... bad idea?)

Nothing else, only testing, not productive.

- What is the workload on those NVMes? Does the Summary page on your nodes show any io_wait?

Only the single NVMe per node each with Proxmox installed on ZFS-RAID0 through Proxmox ISO installer.

- What is the storage configuration? (RAIDzMirror w/ 2 disks or 3 or 4 or 6, RAIDz1-2-3 with how many disks).

I would be happy to get a restore with about 1 GByte/s as in migration mode.Difficult to tell why you are only getting 180MBps backup and 72MBps restore on a 2500MBps link (20Gbps / 8 = 2.5GBps). Note: You will never reach 2500MBps, more likely, 2250MBps or even 2000MBps given networking, application, and encryption overhead. That's also assuming the link isn't shared and being used by other traffic.

Thank you!

Falco

Hi Falco!

Pardon my delay. Ok there are a few things that are big warning flags to me, but I agree with a few of your thoughts as well. In order, my responses are:

Tmanok

Pardon my delay. Ok there are a few things that are big warning flags to me, but I agree with a few of your thoughts as well. In order, my responses are:

- Thank you for the CPU model. That is a good CPU, despite the slower clock speed, it is fairly ideal for virtualization.

- 2x E5-2673 V4 20 Threads is great for this purpose.

- 8x vCPUs to your PBS VM should not cause any bottleneck unless you witness it running high during tasks.

- 160GB of RAM is allocated to ArcCaching of 2x HDDs?? That's nuts, below is the general guidance and then my suggestion:

- ZFS on PVE requires 8GB of RAM for host, 8GB RAM minimum caching, +1GB per 1TB of RAW storage.

- Your setup is 2x HDD (unknown size), but I assure you it is not 160TB. However, if 160GB are allocating to boosting performance instead of being wasted, I wouldn't make any changes until:

- Host and Guest Memory usage >160GB Memory

- You are noticing zfs arc memory accidentally pushing things into SWAP instead of being reclaimed. This happens from time to time although it shouldn't and generally means your storage is slow (imo).

- You are using ZFS on the host and then again inside the PBS VM? This is pointless, definitely remove your datastore and rebuild it with XFS or EXT4 if it's a virtual disk. If your VM's root OS disk is ZFS too then consider reinstalling fresh or copying /etc/pbs and restoring /etc/pbs to the new VM.

- Your datastore or your guests live on these HDDs? HDDs are unlikely to perform faster than 150MB/s-250MB/s unless you have a large iSCSI disk shelf or a NAS with a fast RAID array. This is your lowest level limiting factor if I understand correctly.

- Woah your networking is very unstable. The minimum is 7.13Gbps (891MBps) and your fastest is 25Gbps (3.125GBps) when PBS communicates to its host hypervisor.

- Please consider LACP on your switch or perhaps direct connect and configure LACP on both bonds. You will see throughput stabilize immediately.

- Those NVMe drives should be quite good, get back to me about your HDD configuration and that double-ZFS setup and if the performance is not increasing (even without network changes) I will read into the dataset of that NVMe model closer.

Tmanok

Hi Tmanok,

thank you for your reply!

I do not have any HDDs, only one NVMe per node holding everything.

I will change PBS from ZFS disk to EXT4 disk for datastore. However I do not understand why writing to PBS is 3 times faster than reading from it while restoring, even if I restore to the same NVMe the backup is coming from.

I do not know why RAM shows 50% used in PVE, perhaps because I deactivated swap. The usage is slowly rising from boot on until 50% RAM, I think that is normal for ZFS on Debian. I should change to an absolute max value of about 10 GB.

Shouldn't I use ZFS at all and use LVM-Thin?

Best regards!

Falco

thank you for your reply!

I do not have any HDDs, only one NVMe per node holding everything.

I will change PBS from ZFS disk to EXT4 disk for datastore. However I do not understand why writing to PBS is 3 times faster than reading from it while restoring, even if I restore to the same NVMe the backup is coming from.

I do not know why RAM shows 50% used in PVE, perhaps because I deactivated swap. The usage is slowly rising from boot on until 50% RAM, I think that is normal for ZFS on Debian. I should change to an absolute max value of about 10 GB.

Shouldn't I use ZFS at all and use LVM-Thin?

Best regards!

Falco

Last edited: