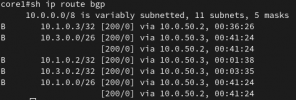

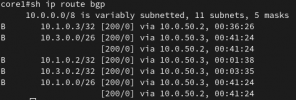

I'm trying to setup an EVPN based SDN across 2 proxmox nodes. I have 2 nodes with EVPN setup (and they have peered and are exchanging prefixes). These nodes also peer over standard BGP using the BGP controller to my switch, which is receiving the subnets. My switch is configured for ECMP, and I can see the multiple paths to each route:

- what is weird is that for the /32 routes, the nexthop is the pve host where the VM is not hosted? i.e: 10.1.0.3/32 should be on 10.0.50.3

- what is weird is that for the /32 routes, the nexthop is the pve host where the VM is not hosted? i.e: 10.1.0.3/32 should be on 10.0.50.3

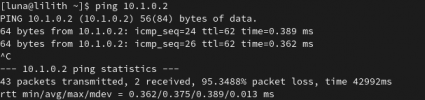

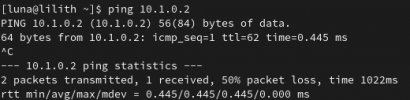

My VMs can ping out to the internet and to anything on my network, but when they are on 2 different nodes they can't ping each other. Also, I can't ping the VMs from my external network. I have made sure to disable the firewall so that isn't an issue. Here is the zone config:

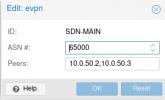

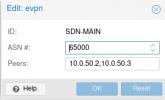

..and here is the EVPN controller config:

..and here is the BGP controller config:

The VNETs are nothing special with source NAT disabled. Not sure where to go from here.

- what is weird is that for the /32 routes, the nexthop is the pve host where the VM is not hosted? i.e: 10.1.0.3/32 should be on 10.0.50.3

- what is weird is that for the /32 routes, the nexthop is the pve host where the VM is not hosted? i.e: 10.1.0.3/32 should be on 10.0.50.3My VMs can ping out to the internet and to anything on my network, but when they are on 2 different nodes they can't ping each other. Also, I can't ping the VMs from my external network. I have made sure to disable the firewall so that isn't an issue. Here is the zone config:

..and here is the EVPN controller config:

..and here is the BGP controller config:

The VNETs are nothing special with source NAT disabled. Not sure where to go from here.