I'm having this issue since a couple weeks.

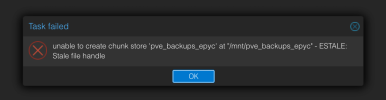

Backup would fail with this error.

It usually juste need to reboot PBS server to fix the issue, but it's very annoying.

Is there something I could do to solve this?

I'm backing up to a Local PBS server, running in a VM and then it's remote sync to another PBS instance running in AWS.

R,

xk3tchuPx

Backup would fail with this error.

It usually juste need to reboot PBS server to fix the issue, but it's very annoying.

Is there something I could do to solve this?

I'm backing up to a Local PBS server, running in a VM and then it's remote sync to another PBS instance running in AWS.

R,

xk3tchuPx