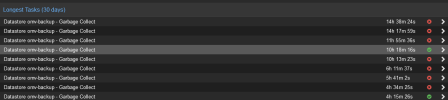

something's definitely wrong here... i have created a new datastore. just creating it took 42 minutes. then i have started a garbage collection job on the newly created empty datastore and its running for an hour already.

the wait IO on the NFS server is constantly high for months, but I dont really know what is causing it. when i look at iotop, there is a 3 MB/s read and write mostly caused by the storj storage node I am hosting. this is definitely not caused by ZFS either, as I have migrated to ZFS only in august, before that it was a mergerFS setup.

running some benchmarks on the NFS share from PBS the performance seems OK:

RANDOM WRITES: WRITE: bw=40.8MiB/s (42.8MB/s), 40.8MiB/s-40.8MiB/s (42.8MB/s-42.8MB/s), io=8192MiB (8590MB), run=200807-200807msec

RANDOM READS: READ: bw=132MiB/s (138MB/s), 132MiB/s-132MiB/s (138MB/s-138MB/s), io=8192MiB (8590MB), run=62053-62053msec

SEQ WRITES: WRITE: bw=214MiB/s (224MB/s), 214MiB/s-214MiB/s (224MB/s-224MB/s), io=30.0GiB (32.2GB), run=143832-143832msec

SEQ READ: READ: bw=283MiB/s (297MB/s), 283MiB/s-283MiB/s (297MB/s-297MB/s), io=30.0GiB (32.2GB), run=108527-108527msec

The sequential speeds indeed feel a tad slow, but there was the garbage collection running on the array