error with cfs lock '****': unable to create image: got lock timeout - aborting command

- Thread starter Kenneth Sean Wirebaugh

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

no, because this is a cluster wide lock where the other nodes and other components besides the perl code assume this timeout of 60s is in place, so if you change it just in the perl code it can lead to multiple things entering the locked section thinking they all have exclusive access to the shared resource.

Yeah but in my case I changed this timeout in every cluster node to match. But I see your point. Still I don't see any other options for me. I have a 3 node system and ceph doesn't seem recommended for that use case. My zfs on spinning disks take a long time to respond.

Last edited:

ProxMox users, developers, et alia:

I am seeing this issue when I try to create a 500GB HD via NFS. Is there a way to see the actual qemu-img command that ProxMox is trying to issue to build the qcow2 file?

Presuming I had the precise command being run and then wanted to run it, how would I then scan and add that device to the appropriate virtual machine?

Stuart

I am seeing this issue when I try to create a 500GB HD via NFS. Is there a way to see the actual qemu-img command that ProxMox is trying to issue to build the qcow2 file?

Presuming I had the precise command being run and then wanted to run it, how would I then scan and add that device to the appropriate virtual machine?

Stuart

Hi,

if you run into timeouts when allocating an image on a network storage, it's recommended to useProxMox users, developers, et alia:

I am seeing this issue when I try to create a 500GB HD via NFS. Is there a way to see the actual qemu-img command that ProxMox is trying to issue to build the qcow2 file?

Presuming I had the precise command being run and then wanted to run it, how would I then scan and add that device to the appropriate virtual machine?

Stuart

pvesm set <storage ID>

--preallocation off. This disables the default preallocation of metadata, thus speeding up the image allocation a lot. For such images, QEMU will then allocate the metadata as needed.Fiona,

Thank you for the response. I will give that a try.

My end state solution was simply to run:

sudo qemu-img create -f qcow2 -o cluster_size=65536 -o extended_l2=off -o preallocation=metadata -o compression_type=zlib -o size=500G -o lazy_refcounts=off -o refcount_bits=16 /mnt/pve/nfs_sata_pool/images/121/vm-121-disk-0.qcow2

There after I just edited the 121.conf file to assure that the new qcow2 file was setup as an available disk therein.

The VM seems to be running fine now and using the new space allocation absent any appreciable anomalies.

Thanks in advance and stay safe and healthy.

Stuart

Thank you for the response. I will give that a try.

My end state solution was simply to run:

sudo qemu-img create -f qcow2 -o cluster_size=65536 -o extended_l2=off -o preallocation=metadata -o compression_type=zlib -o size=500G -o lazy_refcounts=off -o refcount_bits=16 /mnt/pve/nfs_sata_pool/images/121/vm-121-disk-0.qcow2

There after I just edited the 121.conf file to assure that the new qcow2 file was setup as an available disk therein.

The VM seems to be running fine now and using the new space allocation absent any appreciable anomalies.

Thanks in advance and stay safe and healthy.

Stuart

Last edited:

I am having the same issue as OP, when I try "pvesm set <storage ID> --preallocation off", i get failed: unexprected property preallocation. Any suggestions?Hi,

if you run into timeouts when allocating an image on a network storage, it's recommended to usepvesm set <storage ID> --preallocation off. This disables the default preallocation of metadata, thus speeding up the image allocation a lot. For such images, QEMU will then allocate the metadata as needed.

Attachments

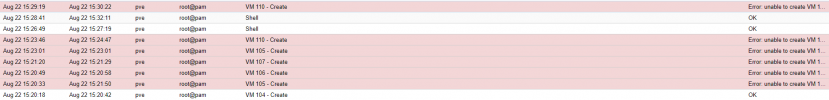

Meu caso foi testar um servidor proxmox 8.0.3 criando vms.

Em uma unidade compartilhada com Cifs e ocorreu o seguinte erro

TASK ERROR: incapaz de criar VM 110 - incapaz de criar imagem: comando 'storage-Data'-locked expirou - abortando Não

encontrei nenhuma evidência do que poderia estar acontecendo .

Após o erro ao criar o vm. nenhum outro criado no mesmo armazenamento funcionou.

Fui olhar o host físico e vi o seguinte erro que está no anexo.

Em uma unidade compartilhada com Cifs e ocorreu o seguinte erro

TASK ERROR: incapaz de criar VM 110 - incapaz de criar imagem: comando 'storage-Data'-locked expirou - abortando Não

encontrei nenhuma evidência do que poderia estar acontecendo .

Após o erro ao criar o vm. nenhum outro criado no mesmo armazenamento funcionou.

Fui olhar o host físico e vi o seguinte erro que está no anexo.

Attachments

After a few hours of conducting tests trying to identify the error and how I could fix it, I managed to do so today.Meu caso foi testar um servidor proxmox 8.0.3 criando vms.

Em uma unidade compartilhada com Cifs e ocorreu o seguinte erro

TASK ERROR: incapaz de criar VM 110 - incapaz de criar imagem: comando 'storage-Data'-locked expirou - abortando Não

encontrei nenhuma evidência do que poderia estar acontecendo .

Após o erro ao criar o vm. nenhum outro criado no mesmo armazenamento funcionou.

Fui olhar o host físico e vi o seguinte erro que está no anexo.

The procedure carried out involved selecting the storage that was experiencing a failure and editing it, enabling the advanced option, changing the preallocation mode to off, and saving it. Then, I edited it again and left it on Metadata.

I noticed that many people referred to the "Preallocation" parameter but didn't specify where it should be edited directly in the storage, as this option wasn't available for modification when creating a virtual machine through the interface.

i got this error as wellHi,

if you run into timeouts when allocating an image on a network storage, it's recommended to usepvesm set <storage ID>

--preallocation off. This disables the default preallocation of metadata, thus speeding up the image allocation a lot. For such images, QEMU will then allocate the metadata as needed.

trying to acquire csf lock 'storage-ceph-pool'

during destroy the VM.

is that the same solution using option --preallocation off ?

any solution for ceph storage? do u ever face similar issue in ceph got disk locked like this?preallocation only has an effect for directory based storages (dir, nfs, cifs, ..)

have u check this similar issue: https://bugzilla.proxmox.com/show_bug.cgi?id=1962no, it sounds like there is some issue with the performance of your ceph storage..

do u think this is issue with ceph itself? maybe using latest ceph version will be improved and no more locked like that?I'm aware of that one, but I don't think anybody started working on implementing that enhancement yet.

or maybe there is another think that i also dont know.

no, the locking is on our side, and it is currently a global lock per shared storage for certain tasks - the linked issue is about improving that by reducing the scope of the lock.

there are basically two (related) causes/issues:

- lock contention/failure to acquire the lock at all (too many tasks trying to lock the same storage -> solved by reducing the scope of the lock)

- lock timeout/failure to release the lock in time (tasked in locked context takes too long -> this can only be solved by making the storage faster or doing less things in locked context)

there are basically two (related) causes/issues:

- lock contention/failure to acquire the lock at all (too many tasks trying to lock the same storage -> solved by reducing the scope of the lock)

- lock timeout/failure to release the lock in time (tasked in locked context takes too long -> this can only be solved by making the storage faster or doing less things in locked context)