Hi!

I'm just learning, so I created a test server.

I didn’t find any information about the error when cloning and moving, so I created a post

There is a server HP ML150G6 2xE5520

12x2GB RAM

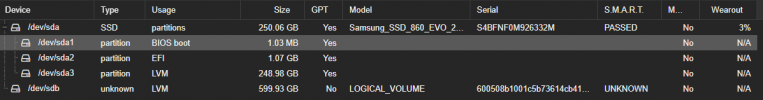

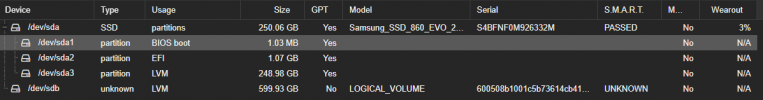

Hardware raid 4x300GB and 1xSSD 250GB

Proxmox was installed on the SSD, during installation the default file system was selected

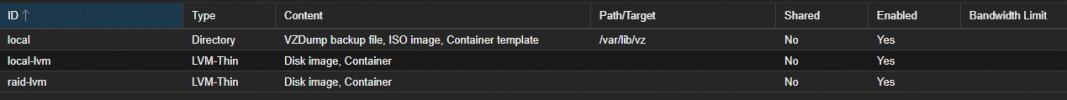

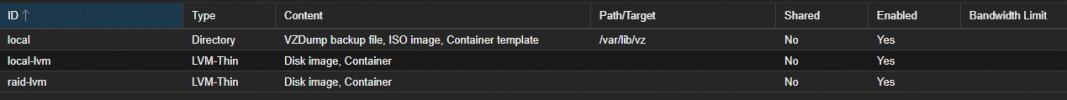

local and local-lvm were created automatically

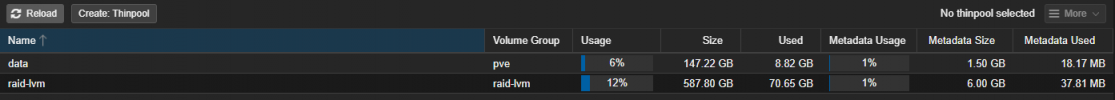

raid-lvm I created

Problem

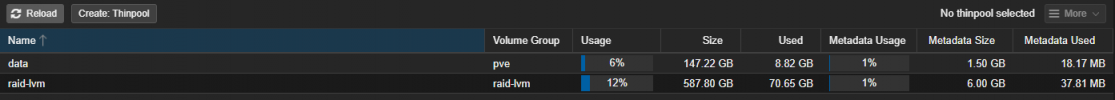

I can create a new VM on local lvm

I can clone a VM from local-lvm to raid-lvm, I can also transfer a VM from local-lvm to raid-lvm, but I cannot clone a VM from raid-lvm to local-lvm, and I also cannot transfer a VM from raid-lvm to local-lvmI freed up the local lvm completely, but cloning or moving still doesn't work

Also, before I tried to clone the VM, the local-lvm disk was almost completely occupied and I received an error during cloning that the total volume of the VM disks was greater than the total volume of the local-lvm partition.

After that I completely cleared the local-lvm

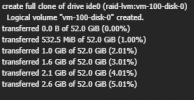

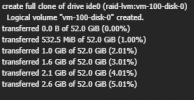

Now, when cloning or moving a VM to local-lvm, the process hangs at about 2.5-3GB

the process stays in this state until I cancel it

Syslog:

the errors are the same when cloning and when moving to local-lvm

But I can still create a VM on local-lvm without problems

I reinstalled proxmox, still have the problem, now cloning freezes on 6GB transferred

I also discovered an IO delay of 60%

there is not a single VM running on the server

Edit 15.09.2023 15:58***********

Only that I tried to clone a working VM

it worked!

But the question remains, why doesn’t it clone a stopped VM or from a template?

I'm just learning, so I created a test server.

I didn’t find any information about the error when cloning and moving, so I created a post

There is a server HP ML150G6 2xE5520

12x2GB RAM

Hardware raid 4x300GB and 1xSSD 250GB

Proxmox was installed on the SSD, during installation the default file system was selected

Code:

dir: local

path /var/lib/vz

content iso,vztmpl,backup

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

lvmthin: raid-lvm

thinpool raid-lvm

vgname raid-lvm

content rootdir,imageslocal and local-lvm were created automatically

raid-lvm I created

Problem

I can create a new VM on local lvm

I can clone a VM from local-lvm to raid-lvm, I can also transfer a VM from local-lvm to raid-lvm, but I cannot clone a VM from raid-lvm to local-lvm, and I also cannot transfer a VM from raid-lvm to local-lvmI freed up the local lvm completely, but cloning or moving still doesn't work

Also, before I tried to clone the VM, the local-lvm disk was almost completely occupied and I received an error during cloning that the total volume of the VM disks was greater than the total volume of the local-lvm partition.

After that I completely cleared the local-lvm

Now, when cloning or moving a VM to local-lvm, the process hangs at about 2.5-3GB

the process stays in this state until I cancel it

Syslog:

Code:

Sep 14 13:00:49 vmserv1 pvedaemon[280373]: <root@pam> end task UPID:vmserv1:0005191E:006B7EED:6502D9D1:qmconfig:100:root@pam: OK

Sep 14 13:01:19 vmserv1 pvedaemon[281194]: <root@pam> starting task UPID:vmserv1:000519BD:006B8AE6:6502D9EF:qmmove:100:root@pam:

Sep 14 13:01:19 vmserv1 pvedaemon[334269]: <root@pam> move disk VM 100: move --disk ide0 --storage local-lvm

Sep 14 13:01:20 vmserv1 pvedaemon[1198]: worker 277623 finished

Sep 14 13:01:20 vmserv1 pvedaemon[1198]: starting 1 worker(s)

Sep 14 13:01:20 vmserv1 pvedaemon[1198]: worker 334280 started

Sep 14 13:01:21 vmserv1 pvedaemon[334278]: got inotify poll request in wrong process - disabling inotify

Sep 14 13:01:22 vmserv1 pvedaemon[334278]: worker exit

Sep 14 13:01:46 vmserv1 pve-firewall[1144]: firewall update time (6.841 seconds)

Sep 14 13:01:56 vmserv1 pve-firewall[1144]: firewall update time (7.143 seconds)

Sep 14 13:02:20 vmserv1 pvedaemon[334521]: starting termproxy UPID:vmserv1:00051AB9:006BA2C2:6502DA2C:vncshell::root@pam:

Sep 14 13:02:20 vmserv1 pvedaemon[334280]: <root@pam> starting task UPID:vmserv1:00051AB9:006BA2C2:6502DA2C:vncshell::root@pam:

Sep 14 13:02:21 vmserv1 pvedaemon[281194]: <root@pam> successful auth for user 'root@pam'

Sep 14 13:02:21 vmserv1 login[334524]: pam_unix(login:session): session opened for user root(uid=0) by root(uid=0)

Sep 14 13:02:21 vmserv1 systemd-logind[824]: New session 54 of user root.

Sep 14 13:02:21 vmserv1 systemd[1]: Started session-54.scope - Session 54 of User root.

Sep 14 13:02:21 vmserv1 login[334529]: ROOT LOGIN on '/dev/pts/0'

Sep 14 13:02:28 vmserv1 systemd[1]: session-54.scope: Deactivated successfully.

Sep 14 13:02:28 vmserv1 systemd-logind[824]: Session 54 logged out. Waiting for processes to exit.

Sep 14 13:02:28 vmserv1 systemd-logind[824]: Removed session 54.

Sep 14 13:02:28 vmserv1 pvedaemon[334280]: <root@pam> end task UPID:vmserv1:00051AB9:006BA2C2:6502DA2C:vncshell::root@pam: OK

Sep 14 13:06:47 vmserv1 pveproxy[329152]: worker exit

Sep 14 13:06:47 vmserv1 pveproxy[1211]: worker 329152 finished

Sep 14 13:06:47 vmserv1 pveproxy[1211]: starting 1 worker(s)

Sep 14 13:06:47 vmserv1 pveproxy[1211]: worker 336094 started

Sep 14 13:07:52 vmserv1 pveproxy[329111]: worker exit

Sep 14 13:07:52 vmserv1 pveproxy[1211]: worker 329111 finished

Sep 14 13:07:52 vmserv1 pveproxy[1211]: starting 1 worker(s)

Sep 14 13:07:52 vmserv1 pveproxy[1211]: worker 336444 startedthe errors are the same when cloning and when moving to local-lvm

But I can still create a VM on local-lvm without problems

Code:

root@vmserv1:~# systemctl status pveproxy

● pveproxy.service - PVE API Proxy Server

Loaded: loaded (/lib/systemd/system/pveproxy.service; enabled; preset: enabled)

Active: active (running) since Wed 2023-09-13 17:26:56 MSK; 20h ago

Process: 1206 ExecStartPre=/usr/bin/pvecm updatecerts --silent (code=exited, status=0/SUCCESS)

Process: 1209 ExecStart=/usr/bin/pveproxy start (code=exited, status=0/SUCCESS)

Process: 111931 ExecReload=/usr/bin/pveproxy restart (code=exited, status=0/SUCCESS)

Main PID: 1211 (pveproxy)

Tasks: 4 (limit: 23953)

Memory: 187.4M

CPU: 8min 34.435s

CGroup: /system.slice/pveproxy.service

├─ 1211 pveproxy

├─345065 "pveproxy worker"

├─345514 "pveproxy worker"

└─348296 "pveproxy worker"

Sep 14 13:48:12 vmserv1 pveproxy[1211]: starting 1 worker(s)

Sep 14 13:48:12 vmserv1 pveproxy[1211]: worker 345065 started

Sep 14 13:50:24 vmserv1 pveproxy[340900]: worker exit

Sep 14 13:50:24 vmserv1 pveproxy[1211]: worker 340900 finished

Sep 14 13:50:24 vmserv1 pveproxy[1211]: starting 1 worker(s)

Sep 14 13:50:24 vmserv1 pveproxy[1211]: worker 345514 started

Sep 14 14:03:44 vmserv1 pveproxy[341425]: worker exit

Sep 14 14:03:44 vmserv1 pveproxy[1211]: worker 341425 finished

Sep 14 14:03:44 vmserv1 pveproxy[1211]: starting 1 worker(s)

Sep 14 14:03:44 vmserv1 pveproxy[1211]: worker 348296 startedI reinstalled proxmox, still have the problem, now cloning freezes on 6GB transferred

I also discovered an IO delay of 60%

there is not a single VM running on the server

Edit 15.09.2023 15:58***********

Only that I tried to clone a working VM

it worked!

But the question remains, why doesn’t it clone a stopped VM or from a template?

Last edited: