You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

First, why do you want this? Having a CoW on a CoW is bad practise.I created a ZFS RAIDZ storage.

What must I do to make this storage support qcow2?

If you still want it, I would create a new dataset for your files and configure it as directory storage in PVE. Have you created the zpool by hand or was it the installert?

If it's the latter, just create a dataset with zfs create rpool/vms and add this to your PVE storage at Datacenter -> Storage -> add -> directory

My reason for COW is to be able to take snapshots. This is a lab environment and I will be testing things out. If there is a more efficient way I would consider it.First, why do you want this? Having a CoW on a CoW is bad practise.

If you still want it, I would create a new dataset for your files and configure it as directory storage in PVE. Have you created the zpool by hand or was it the installert?

If it's the latter, just create a dataset with zfs create rpool/vms and add this to your PVE storage at Datacenter -> Storage -> add -> directory

View attachment 63835

Also why do you say COW on COW? On this ZDF I just initialized it. There is nothing and I can start afresh if my approach is not the right one.

Last edited:

ZFS does already support snapshots, but just linear ones, not tree like. You can only switch to a grand-parent-snapshot if you remove the parent. With COW2, you can switch between any snapshot without destroying anything.My reason for COW is to be able to take snapshots. This is a lab environment and I will be testing things out. If there is a more efficient way I would consider it.

ZFS is CoW and QCow2 is also CoW.Also why do you say COW on COW?

I cannot tell if this is the wrong one, I don't know what you want to do.There is nothing and I can start afresh if my approach is not the right one.

Thanks @LnxBil for your assistance. Greatly appreciated

I am very new to Proxmox and I still struggle to figure out whether an option is under Datacenter or un the PVE itself. I come from VMWare Professional and my familiarity is with snapshots: I want to try something out and if I screw up I can revert back.

My use case is to have a couple of servers (PMS on Ubuntu) and a generic server running stuff like Syncthing running constantly with maybe two more for actual testing/experimenting. I don't have any apps / needs that are critical and will have a backup strategy after I sort out this stage (Synology is for that purpose).

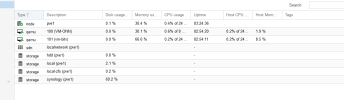

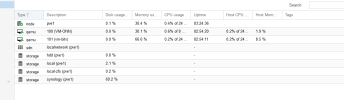

Below is a screen shot of things as they stand now. I installed Proxmox on a mirrored ZFS. This is reflected in local and local-zfs (they were created by proxmox).

Yesterday I discovered the snapshot limitation but after researching the topic I figured out that I could move the machine and enable QCOW2 to gain snapshots.

Today I added a RAID ZFS (reflected as hdd. My idea is to keep the lesser used VMs here. Since I am comfortable with snapshots I gravitate to this functionality.

I will try out the instructions you sent and try it out. When I read the QCOW2 is so strongly supported in Proxmox I would have imagined that this functionality is enabled out of the box.

I am very new to Proxmox and I still struggle to figure out whether an option is under Datacenter or un the PVE itself. I come from VMWare Professional and my familiarity is with snapshots: I want to try something out and if I screw up I can revert back.

My use case is to have a couple of servers (PMS on Ubuntu) and a generic server running stuff like Syncthing running constantly with maybe two more for actual testing/experimenting. I don't have any apps / needs that are critical and will have a backup strategy after I sort out this stage (Synology is for that purpose).

Below is a screen shot of things as they stand now. I installed Proxmox on a mirrored ZFS. This is reflected in local and local-zfs (they were created by proxmox).

Yesterday I discovered the snapshot limitation but after researching the topic I figured out that I could move the machine and enable QCOW2 to gain snapshots.

Today I added a RAID ZFS (reflected as hdd. My idea is to keep the lesser used VMs here. Since I am comfortable with snapshots I gravitate to this functionality.

I will try out the instructions you sent and try it out. When I read the QCOW2 is so strongly supported in Proxmox I would have imagined that this functionality is enabled out of the box.

QCOW2 is the native format of the used hypervisor KVM/QEMU (QCOW2 = QEmu Copy-on-Write), yet it is no the best ... as with everything ... it dependsI will try out the instructions you sent and try it out. When I read the QCOW2 is so strongly supported in Proxmox I would have imagined that this functionality is enabled out of the box.

ZFS is the only way to have a supported software raid in PVE (paid support that is). Therefore this is the way to install PVE on most platforms, which do not com with a hardware controller or where a hardware raid controller is not wanted. An overview about the available and supported storage types is here.

As long as you don't need a tree-like snapshot setup and only want to use linear snapshots, ZFS will work just fine.

What actual problem did you have snapshotting your VM on one of the ZFS pools?

I was finding it difficult to explain my situation well so I devices to video it: Proxmox Video.

Essentially I need to make hdd support a directory to then support QCOW2 (as is the case with rpool). How do I do that?

------

I normally enable snapshotting when I am doing heavy tinkering with the registry. It is quick and dirty.

For example, I have a solution running on a Windows Server 2016 that I want to someday update to Windows Server 2022 It is comprised of multiple components working together. As I make progress I take more snapshots and if something goes wrong I can revert and branch out from where I think was all OK. Performance is not my goal.

Essentially I need to make hdd support a directory to then support QCOW2 (as is the case with rpool). How do I do that?

------

I normally enable snapshotting when I am doing heavy tinkering with the registry. It is quick and dirty.

For example, I have a solution running on a Windows Server 2016 that I want to someday update to Windows Server 2022 It is comprised of multiple components working together. As I make progress I take more snapshots and if something goes wrong I can revert and branch out from where I think was all OK. Performance is not my goal.

Incidentally I found this video that answered my second question: https://youtu.be/m0dY4OJ9FWk?si=ge4aRoy6pzgzqiq1.

Hope it helps others.

In a few days I will start looking at the backup process. On my VMWare it was as simple as transferring the directory with the machine in an off state.

Thanks @LnxBil for your time - You knowledge and depth are about my level sometimes, but your dedication is second to none.

Hope it helps others.

In a few days I will start looking at the backup process. On my VMWare it was as simple as transferring the directory with the machine in an off state.

Thanks @LnxBil for your time - You knowledge and depth are about my level sometimes, but your dedication is second to none.

Hi,You still have not answered my question about the problems you have with ZFS.

Here is a ZFS-based machine I have:

View attachment 63838

and it supports snapshots:

View attachment 63839

The snapshot option on RAW disks was greyed out. This means that I could not create snapshots.

When I researched the topic I figured out that Snapshots on RAW are not possible and that I had (there could have been other formats) to go to QCOW2. On the partition that was created automatically during the proxmox install when I move the partition to a directory the QCOW2 conversion became enabled and once the move was completed I could then perform a snapshop.

My question today was how to enable the DIR functionality on a new ZFS I had just created. The created cluster did not have DIR functionality at inception.

The YouTube video I shared in my post before this one demonstrated how to do it.

Have a look at the video I took and shared in the post. I annotated it.

Good that ZFS is not raw, it's ZFS, so snapshots are allowed. Always have been.When I researched the topic I figured out that Snapshots on RAW are not possible and that I had (there could have been other formats) to go to QCOW2.

I have no idea what you did and what not, so please share the output in code tags of the command lsblk and cat /etc/pve/storage.cfg.On the partition that was created automatically during the proxmox install when I move the partition to a directory the QCOW2 conversion became enabled and once the move was completed I could then perform a snapshop.

cluster?My question today was how to enable the DIR functionality on a new ZFS I had just created. The created cluster did not have DIR functionality at inception.

I did and I still have questions ... it does not show the relevant parts.The YouTube video I shared in my post before this one demonstrated how to do it.

Have a look at the video I took and shared in the post. I annotated it.

I will try to do a commented video tomorrow. I might not get the terminology correct and am more than happy to share output.

I'm very new to Proxmox and if I can learn from it I'm game .

.

I have a list of questions on my Proxmox todo list

I'm very new to Proxmox and if I can learn from it I'm game

I have a list of questions on my Proxmox todo list

Good that ZFS is not raw, it's ZFS, so snapshots are allowed. Always have been.

I have no idea what you did and what not, so please share the output in code tags of the command lsblk and cat /etc/pve/storage.cfg.

cluster?

I did and I still have questions ... it does not show the relevant parts.

Good that ZFS is not raw, it's ZFS, so snapshots are allowed. Always have been.

I am just discovering this information

I have no idea what you did and what not, so please share the output in code tags of the command lsblk and cat /etc/pve/storage.cfg.

Code:

root@pve1:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 3.6T 0 disk

├─sda1 8:1 0 3.6T 0 part

└─sda9 8:9 0 8M 0 part

sdb 8:16 0 3.6T 0 disk

├─sdb1 8:17 0 3.6T 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 3.6T 0 disk

├─sdc1 8:33 0 3.6T 0 part

└─sdc9 8:41 0 8M 0 part

zd0 230:0 0 4M 0 disk

nvme1n1 259:0 0 1.8T 0 disk

├─nvme1n1p1 259:1 0 1007K 0 part

├─nvme1n1p2 259:2 0 1G 0 part

└─nvme1n1p3 259:3 0 1.8T 0 part

nvme0n1 259:4 0 1.8T 0 disk

├─nvme0n1p1 259:5 0 1007K 0 part

├─nvme0n1p2 259:6 0 1G 0 part

└─nvme0n1p3 259:7 0 1.8T 0 partroot@pve1:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content backup,iso,vztmpl,images

shared 0

zfspool: local-zfs

pool rpool/data

content images,rootdir

sparse 1

cifs: synology

path /mnt/pve/synology

server 192.168.16.253

share proxmox

content rootdir,snippets,iso,backup,vztmpl,images

prune-backups keep-all=1

username hmeservice

zfspool: hdd

pool hdd

content rootdir,images

mountpoint /hdd

nodes pve1

dir: hdd-dir

path /hdd/data

content iso,rootdir,snippets,images,backup,vztmpl

prune-backups keep-all=1

shared 0

[/CODE]

cluster?

I did and I still have questions ... it does not show the relevant parts.

Today both ZFS volumes (the mirrored and the RAID) support snapshots.