I can confirm that in my case, removing iothreads from the kvm config has no effect in preventing vm freeze.

What's more, backing up my NAS vm to PBS worked for two days with no issues. Then suddenly stopped working. So the problem is sporadic.

What's even more interesting is that without using PBS, backing this NAS VM to a NAS used to have the same problem (freeze). But it works now.

So it's totally crap shoot for me. Sometimes backup without PBS works, sometimes backup with PBS works.

Sorry, I should clarify. It's possible my case is different from others.

I am backing up a NAS vm to either

1. a network share from the same NAS VM; or

2. a pbs in LXC that uses a network share from the same NAS VM.

I know, I know, this is not the best practice. But my home lab is not an enterprise solution. I can live with a little downtime.

( I prefer less power consumption with one pve host as long as I have one good backup as the NAS vm rarely changes. )

What I found is that there is a race condition. I'd expect the backup creates a VM snapshot and unfreezes the VM right away, and then begins to backup the snapshot. By then, the VM has been thawed so the backup should proceed without problems.

Instead, I believe what happens is that the backup freezes VM and contacts the PBS or NAS at the same time. If the NAS is frozen and the pbs/or nas is not reachable as a result, the whole backup fails.

However, it doesn't fail all the time. In fact, the backup of NAS VM to NAS directly used to fail all the time but now it doesn't. But the backup of the NAS VM to PBS with the nas backend fails all the time after being okay for 2 days.

I believe the backup process can be improved to eliminate the race condition. The VM should be freezed and unfrozen first, resulting a snapshot ready to be backedup. Then the backup process should contact the NAS directly or via PBS. This is a better design.

BTW, backup the pbs LXC to the same pbs itself never fails. In this case, I believe there is no freeze/unfreez command issued. A snapshot must have been made that doesn't have a race condition with connecting to the pbs LXC.

=================================================

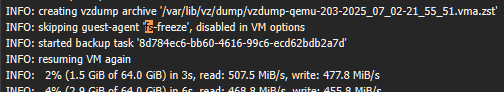

This is what the failed backup job looks like:

INFO: starting new backup job: vzdump 104 --mode snapshot --node pve3 --notes-template '{{guestname}} test' --notification-mode auto --storage pbsRepo --remove 0

INFO: Starting Backup of VM 104 (qemu)

INFO: Backup started at 2024-02-12 09:49:45

INFO: status = running

INFO: VM Name: fs

INFO: include disk 'scsi0' 'local-lvm:vm-104-disk-0' 32G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/104/2024-02-12T14:49:45Z'

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

ERROR: VM 104 qmp command 'backup' failed - backup connect failed: command error: http upgrade request timed out

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 104 failed - VM 104 qmp command 'backup' failed - backup connect failed: command error: http upgrade request timed out

INFO: Failed at 2024-02-12 09:51:45

INFO: Backup job finished with errors

INFO: notified via target `mail-to-root`

TASK ERROR: job errors

This is what the failed job looks like on PBS:

2024-02-12T14:51:45+00:00: starting new backup on datastore 'pbsRepo' from ::ffff:192.168.1.99: "ns/pve3/vm/104/2024-02-12T14:49:45Z"

2024-02-12T14:51:45+00:00: backup failed: connection error: Broken pipe (os error 32)

2024-02-12T14:51:45+00:00: removing failed backup

2024-02-12T14:51:45+00:00: TASK ERROR: connection error: Broken pipe (os error 32)