Hello,

I have created VM template using packer. Template is working properly, if I use PVE GUI, I can use prepared cloud-init config and deploy it perfectly. However, using terraform with Proxmox plugin from Telmate/proxmox version 3.0.1-rc1, I can create full clone without issue but it always results with unsed disk, the original disk, that is attached to clone. Now I fiddled with it around for some time but just cannot seem to get it to work properly - if I manually attach that cloned disk, everything works but - then that is not automation. Any help?

Here is the config:

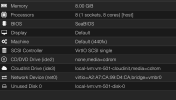

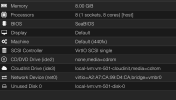

And the result:

I have created VM template using packer. Template is working properly, if I use PVE GUI, I can use prepared cloud-init config and deploy it perfectly. However, using terraform with Proxmox plugin from Telmate/proxmox version 3.0.1-rc1, I can create full clone without issue but it always results with unsed disk, the original disk, that is attached to clone. Now I fiddled with it around for some time but just cannot seem to get it to work properly - if I manually attach that cloned disk, everything works but - then that is not automation. Any help?

Here is the config:

Bash:

# Create the wef VM

resource "proxmox_vm_qemu" "wef" {

name = "wef"

target_node = "pxh1"

tags = "test"

vmid = "501"

# Clone from windows 2k19-cloudinit template

clone = "ubuntu-server-focal-template"

os_type = "cloud-init"

# Cloud init options

cloudinit_cdrom_storage = "local-lvm"

ipconfig0 = "ip=dhcp"

memory = 8192

agent = 1

sockets = 1

cores = 8

# Set the boot disk paramters

bootdisk = "virtio"

scsihw = "virtio-scsi-single"

disk {

slot = 0

size = "40G"

type = "virtio"

storage = "local-lvm"

ssd = 1

discard = "on"

} # end disk

# Set the network

network {

model = "virtio"

bridge = "vmbr0"

} # end first network block

# Ignore changes to the network

## MAC address is generated on every apply, causing

## TF to think this needs to be rebuilt on every apply

lifecycle {

ignore_changes = [

network

]

} # end lifecycle

# Cloud-init params

#ipconfig0 = "ip=192.168.2.100/24,gw=192.168.2.1"

#ssh_user = "ubuntu"

#sshkeys = var.ssh_keys

} # end proxmox_vm_qemu wef resource declarationAnd the result: