Hi everyone,

This is just in case someone stumbles upon the same error while troubleshooting.

I am not asking for help, though I’m open to explanations if anyone has one.

Roughly two years ago, I created a new Proxmox host with VMs.

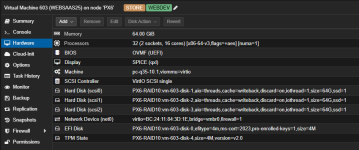

Two weeks ago, I read about CPU types—something I had never looked into before—and realized I had been using the default x86-64-v2.

Since I don’t run a cluster, I thought it was time to switch to the host CPU type to take advantage of that sweet ZFS speed.

Unfortunately, setting the CPU type to host made some Windows 11 VMs extremely slow—clicks in Explorer or network drives became sluggish.

Strangely, a newer Windows 11 VM (created a few months ago) was unaffected.

I didn’t notice any difference in performance for the Linux VMs.

Switching back to x86-64-v2 made everything speedy again.

Setting it to x86-64-v4 didn’t work for my Intel Xeon E-2236 (which seems odd), but x86-64-v3 worked perfectly fine.

So, if your Windows VMs are acting up, try experimenting with different CPU types

BTW: I also just ran some PVE updates that did not ask for a reboot but affected QEMU. No idea if that is somehow related.

This is just in case someone stumbles upon the same error while troubleshooting.

I am not asking for help, though I’m open to explanations if anyone has one.

Roughly two years ago, I created a new Proxmox host with VMs.

Two weeks ago, I read about CPU types—something I had never looked into before—and realized I had been using the default x86-64-v2.

Since I don’t run a cluster, I thought it was time to switch to the host CPU type to take advantage of that sweet ZFS speed.

Unfortunately, setting the CPU type to host made some Windows 11 VMs extremely slow—clicks in Explorer or network drives became sluggish.

Strangely, a newer Windows 11 VM (created a few months ago) was unaffected.

I didn’t notice any difference in performance for the Linux VMs.

Switching back to x86-64-v2 made everything speedy again.

Setting it to x86-64-v4 didn’t work for my Intel Xeon E-2236 (which seems odd), but x86-64-v3 worked perfectly fine.

So, if your Windows VMs are acting up, try experimenting with different CPU types

BTW: I also just ran some PVE updates that did not ask for a reboot but affected QEMU. No idea if that is somehow related.