Ive seen other posts around the internet about people hunting for the culprit and finding memory leaks in various apps. If it's not your tmpfs (and there are other tmpfs users other than systemd-journald, note), then it's something else. Keep looking.Same problem here, all my containers are silently taking more memory in cache until oom-kill kicks in. This happens in all my containers but in pve6. In my case, restarting systemd-journald did not help, however reducing journals log size increased the available memory by the same size I reduced the log. (But the memory keeps decreasing, so it is just a matter of time, I just gained time)

Continuously increasing memory usage until oom-killer kill processes

- Thread starter sal

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

lxcfs doesn't do anything here - this is literally how the kernel reports that kind of memory usage (as shared memory!):This misleading issue is reporting it as buffers/cache instead of in "used". If you google it, there are dozens of posts by others misled by this and trying to flush their caches, which will not help at all. "Used" would be a fairer category to put it in, but Im guessing because of the way kernel structures such things, that may be harder to code. Nonetheless, it's misleading.

https://www.kernel.org/doc/html/latest/filesystems/tmpfs.html

basically the only thing that lxcfs does "special" is to handle total memory and swap according to the version of cgroups employed, the rest comes directly from how the kernel reports memory usage.

see above - you have to set this up inside the container (where the mounting actually happens)Is there a way to restrict tmpfs size in containers?

lxcfs doesn't do anything here - this is literally how the kernel reports that kind of memory usage (as shared memory!):

https://www.kernel.org/doc/html/latest/filesystems/tmpfs.html

basically the only thing that lxcfs does "special" is to handle total memory and swap according to the version of cgroups employed, the rest comes directly from how the kernel reports memory usage.

see above - you have to set this up inside the container (where the mounting actually happens)

I don't understand? How does this fix the memory issues, like this:

https://forum.proxmox.com/threads/lxc-and-tvheadend-continuity-counter-error.97726/#post-449299

The problem exists since Proxmox 7.0, Version 6.4.13 was fine.

The buffer/cache grows and grows while I‘m recording to the harddisk. When free = 0 the recording stops. The tmpfs doesn't increas the it's size, so I think it doesn't help to restrict it?I wrote above what you need to do - restrict the tmpfs sizes where they get mounted (i.e., inside the container). tmpfs ARE memory, so they are accounted as such.

free -m

Code:

root@ct-tvh-02:~# free -m

total used free shared buff/cache available

Mem: 16384 559 0 0 15824 15824

Swap: 512 0 512df -h

Last edited:

I seem to recall that tmpfs is counted as buffers/cache (but I can't find the original reference now). Please try writing a large file to a tmpfs and see if it increases. Apologies for not having the time to try it myself right now.The buffer/cache grows and grows while I‘m recording to the harddisk. When free = 0 the recording stops. The tmpfs doesn't increas the it's size, so I think it doesn't help to restrict it?

well, there are other things accounted as buffered or cached (like, the literal page cache) which might be the culprit in your case.. it might help if you give more details about your setup, such asThe buffer/cache grows and grows while I‘m recording to the harddisk. When free = 0 the recording stops. The tmpfs doesn't increas the it's size, so I think it doesn't help to restrict it?

free -m

Code:root@ct-tvh-02:~# free -m total used free shared buff/cache available Mem: 16384 559 0 0 15824 15824 Swap: 512 0 512

df -h

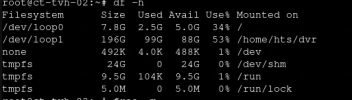

View attachment 39328

- pveversion -v

- storage.cfg

- container config

- what happens in the container

- observed result

- expected result

Hi, sorry for the late response, was very busy the last month.well, there are other things accounted as buffered or cached (like, the literal page cache) which might be the culprit in your case.. it might help if you give more details about your setup, such as

- pveversion -v

- storage.cfg

- container config

- what happens in the container

- observed result

- expected result

Here's the output. Like I've mentioned before the problem exists since I've upgraded to version 7.

1.

Code:

pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.39-1-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-helper: 7.2-10

pve-kernel-5.15: 7.2-6

pve-kernel-5.4: 6.4-12

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.4.162-1-pve: 5.4.162-2

pve-kernel-5.4.106-1-pve: 5.4.106-1

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-8

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-6

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 7.0.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve12.

Code:

cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,vztmpl,backup

#lvmthin: local-lvm

# thinpool data

# vgname pve

# content rootdir,images

dir: datastore1

path /mnt/pve/datastore1

content rootdir,images,iso,vztmpl,backup

is_mountpoint 1

nodes vs-aa13.

Code:

cat /etc/pve/lxc/102.conf

arch: amd64

cores: 8

cpuunits: 4096

hostname: ct-tvh-02

memory: 4096

mp0: datastore1:102/vm-102-disk-2.raw,mp=/home/hts/dvr/,acl=0,mountoptions=noatime;nodev;noexec;nosuid,size=200G

net0: name=eth0,bridge=vmbr0,gw=192.168.0.250,hwaddr=C9:21:9A:B4:84:0E,ip=192.168.0.100/24,type=veth

onboot: 1

ostype: ubuntu

rootfs: datastore1:102/vm-102-disk-0.raw,acl=0,mountoptions=noatime;nosuid,size=8G

searchdomain: aa.local

swap: 512

unprivileged: 1- what happens in the container

I'm using the container as Tvheadend server.

- observed result

- expected result

The container has 4GB Ram allocated.

When I start a recording (or several in parallel) to /home/ the column "buff/cache" increases until "free" is 0 and thus the 4GB are reached. Then I get only write errors, because probably the cache is no longer cleared. With Proxmox 6.4 there were no problems here, because the cache was always released again.

Thanks for your efforts!

Best regards

could you get the following information:

- /proc/meminfo inside the container when you run into the issue

- tvheadend GUI at Configuration ->Debugging -> Memory Information Entries (found via some random googling )

)

it seems there is also the option to adjust how much data tvheadend caches, maybe playing around with those settings might help as well.

- /proc/meminfo inside the container when you run into the issue

- tvheadend GUI at Configuration ->Debugging -> Memory Information Entries (found via some random googling

it seems there is also the option to adjust how much data tvheadend caches, maybe playing around with those settings might help as well.

Ok, to reproduce the issue a bit faster, I've changed the memory of the container to 512 MB.

I'll take a look at some cache options in Tvh, but as I mentioned above, the problem occured for the first time when I updatet to version 7. In version 6 everything was fine.

edit: Ok, I've tried all cache options in the recorder profiles but every profile resulted in running out of memory...

Code:

root@ct-tvh-02:~# cat /proc/meminfo

MemTotal: 524288 kB

MemFree: 208 kB

MemAvailable: 406652 kB

Buffers: 0 kB

Cached: 406444 kB

SwapCached: 0 kB

Active: 53148 kB

Inactive: 438500 kB

Active(anon): 136 kB

Inactive(anon): 85340 kB

Active(file): 53012 kB

Inactive(file): 353160 kB

Unevictable: 0 kB

Mlocked: 11948 kB

SwapTotal: 524288 kB

SwapFree: 524284 kB

Dirty: 0 kB

Writeback: 0 kB

AnonPages: 85360 kB

Mapped: 0 kB

Shmem: 116 kB

KReclaimable: 325996 kB

Slab: 0 kB

SReclaimable: 0 kB

SUnreclaim: 0 kB

KernelStack: 9984 kB

PageTables: 77740 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 28733908 kB

Committed_AS: 53362068 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 114684 kB

VmallocChunk: 0 kB

Percpu: 16832 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

FileHugePages: 0 kB

FilePmdMapped: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 597864 kB

DirectMap2M: 38199296 kB

DirectMap1G: 13631488 kB

I'll take a look at some cache options in Tvh, but as I mentioned above, the problem occured for the first time when I updatet to version 7. In version 6 everything was fine.

edit: Ok, I've tried all cache options in the recorder profiles but every profile resulted in running out of memory...

Last edited:

okay - one more thing that might help shed light on the issue: could you dump /sys/fs/cgroup/lxc/CTID/memory.stat (replace CTID accordingly) on the host when the problem has been triggered?

is anything else outside of the container accessing the recordings/files created by tvheadend?

is anything else outside of the container accessing the recordings/files created by tvheadend?

okay - one more thing that might help shed light on the issue: could you dump /sys/fs/cgroup/lxc/CTID/memory.stat (replace CTID accordingly) on the host when the problem has been triggered?

is anything else outside of the container accessing the recordings/files created by tvheadend?

Okay..

Output:

Code:

cat /sys/fs/cgroup/lxc/102/memory.stat

anon 81432576

file 424726528

kernel_stack 1769472

pagetables 2281472

percpu 1521472

sock 20480

shmem 122880

file_mapped 62537728

file_dirty 7905280

file_writeback 0

swapcached 0

anon_thp 0

file_thp 0

shmem_thp 0

inactive_anon 81444864

active_anon 110592

inactive_file 370253824

active_file 54329344

unevictable 0

slab_reclaimable 20042808

slab_unreclaimable 3527480

slab 23570288

workingset_refault_anon 0

workingset_refault_file 19189

workingset_activate_anon 0

workingset_activate_file 3357

workingset_restore_anon 0

workingset_restore_file 2505

workingset_nodereclaim 0

pgfault 214732

pgmajfault 1020

pgrefill 144

pgscan 28573

pgsteal 25146

pgactivate 16936

pgdeactivate 200

pglazyfree 0

pglazyfreed 0

thp_fault_alloc 0

thp_collapse_alloc 0No, no service ooutside is accessing the files.

Thanks for helping!

thanks!

could you try the following (please don't change any of the memory related settings otherwise while doing the test!)

replace CTID with your container ID again for all commands

this will return the current hard limit for memory usage. now calculate 90% of that, and replace VALUE with the result in the following commands:

this should cause the kernel to start reclaiming memory sooner (once the 90% watermark is reached). I am not sure what tvheadend does differently than other applications/work loads, but I hope it helps. please report back with results in either case!

could you try the following (please don't change any of the memory related settings otherwise while doing the test!)

replace CTID with your container ID again for all commands

Code:

cat /sys/fs/cgroup/lxc/CTID/memory.statthis will return the current hard limit for memory usage. now calculate 90% of that, and replace VALUE with the result in the following commands:

Code:

echo VALUE > /sys/fs/cgroup/lxc/CTID/memory.high

echo VALUE > /sys/fs/cgroup/lxc/CTID/ns/memory.highthis should cause the kernel to start reclaiming memory sooner (once the 90% watermark is reached). I am not sure what tvheadend does differently than other applications/work loads, but I hope it helps. please report back with results in either case!

Now what of all the output values is the current hard limit for memory usage?thanks!

could you try the following (please don't change any of the memory related settings otherwise while doing the test!)

replace CTID with your container ID again for all commands

Code:cat /sys/fs/cgroup/lxc/CTID/memory.stat

this will return the current hard limit for memory usage. now calculate 90% of that, and replace VALUE with the result in the following commands:

Code:echo VALUE > /sys/fs/cgroup/lxc/CTID/memory.high echo VALUE > /sys/fs/cgroup/lxc/CTID/ns/memory.high

this should cause the kernel to start reclaiming memory sooner (once the 90% watermark is reached). I am not sure what tvheadend does differently than other applications/work loads, but I hope it helps. please report back with results in either case!

edit: I guess you meant

cat /sys/fs/cgroup/lxc/CTID/memory.high ?

Used this and calculated 90 percent of the memory, then written to /sys/fs/cgroup/lxc/CTID/memory.high and /sys/fs/cgroup/lxc/CTID/ns/memory.high - it seems to work now!

Can you tell me how to make this change persistent for this container?

Thanks!

Last edited:

Hello,

I'm seeing the same issue so keen to help with diagnosis.

I've run:

where you said:

I'm seeing the same issue so keen to help with diagnosis.

I've run:

Code:

cat /sys/fs/cgroup/lxc/CTID/memory.statApologies if I've not understood but which value from this command am I using to get 90% of for setting memory.high etc?this will return the current hard limit for memory usage.

With tvheadend or with an other application?Hello,

I'm seeing the same issue so keen to help with diagnosis.

I've run:

where you said:Code:cat /sys/fs/cgroup/lxc/CTID/memory.stat

Apologies if I've not understood but which value from this command am I using to get 90% of for setting memory.high etc?

Last edited:

Thanks, can you tell me where I can make this change persisten?sorry, that was a typo (or rather, copy-paste error). the hard limit is in memory.max , not memory.stat

Yeah, this fixes the memory issue - thank you for this. I made a script as a workaround for now.not possible at the moment, that would need to be integrated in pve-containerdoes it improve the situation?