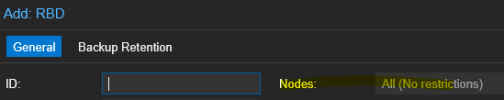

Is there some way to restrict which nodes within a cluster can host containers or VMs? I know that I can simply not place containers or VMs on various nodes, but I would prefer some way to restrict which nodes are shown in dialogs for hosting containers or VMs, such as migration targets.

Classify Cluster Node Roles?

- Thread starter jamin.collins

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Can you elaborate on this? I believe I've used them to some degree in the past, but I don't believe their use removed other non-HA member hosts from the migrate target dialog.You could use HA groups to do this.

Setting HA on each VM seems quite tedious, is there either a scriptable or mass method of assignment?

NVM: found `ha-manager`

NVM: found `ha-manager`

Last edited:

So, HA seems to move the VMs to the desired hosts, but does not appear to alter (or restrict) the migration target drop down in the UI.

That's right but the HA mechanism would immedently remigrate the vm to a desired ha host group member if manually "wrong" migrated and even not back to "first" host when you eg. enabled maintenance mode on it (as reason for a manual migration).So, HA seems to move the VMs to the desired hosts, but does not appear to alter (or restrict) the migration target drop down in the UI.

You could assign the storage for the VMs only to the nodes where they should run. No storage, no images, no VMs.

My use case is (currently) to have two different classifications of proxmox hosts:You could assign the storage for the VMs only to the nodes where they should run. No storage, no images, no VMs.

- CEPH OSD nodes

- Virt nodes

So, storage for the VMs is by definition the CEPH OSD nodes. However, I don't want to have any VMs running on the CEPH OSD nodes.

Interesting, that brings things one step closer. The UI now gives an error when I try to migrate a VM to one of the CEPH nodes.

Would still be better to not even list the invalid or undesired targets.

Would still be better to not even list the invalid or undesired targets.

The challenge is, I think, that the group is not "VM can be here" it is "VM can run here." If you shut down a VM you can move it outside the HA group. If you then start it, it will migrate off to a node that is in the group. So, if the VM is off, all the nodes are valid choices.