Hello everyone,

I recently installed Ceph into my 3 node cluster, which worked out great at first.

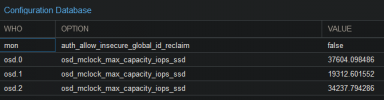

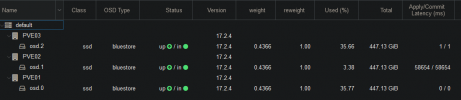

But after a while I noticed that the Ceph Pool would sometimes hang and stutter. Thats when I looked into the Configuration and saw this:

I use 3 exactly the same SSDs, checked if every node uses Sata 6G and so on. Everything should be working fine, but it seems Ceph thinks OSD 1 is using SATA 3 or something.

There probably is a way to manually adjust it, or let Ceph recalculate the values, but I have not seen it anywhere I looked.

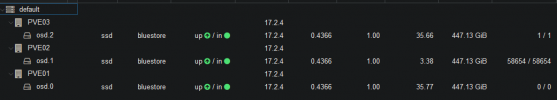

This also happens sometimes, right now I tried to re-add the OSD and see if that works, but now I think I nuked my Ceph Pool:

Might this SSD be dead, although the SMART values are OK?

If anyone could help me here, I would be very grateful!

Thanks!

I recently installed Ceph into my 3 node cluster, which worked out great at first.

But after a while I noticed that the Ceph Pool would sometimes hang and stutter. Thats when I looked into the Configuration and saw this:

I use 3 exactly the same SSDs, checked if every node uses Sata 6G and so on. Everything should be working fine, but it seems Ceph thinks OSD 1 is using SATA 3 or something.

There probably is a way to manually adjust it, or let Ceph recalculate the values, but I have not seen it anywhere I looked.

This also happens sometimes, right now I tried to re-add the OSD and see if that works, but now I think I nuked my Ceph Pool:

Might this SSD be dead, although the SMART values are OK?

If anyone could help me here, I would be very grateful!

Thanks!

Attachments

Last edited: