Hello everyone!

I have a question regarding CEPH on PROXMOX. I have a CEPH cluster in production and would like to rebalance my OSDs since some of them are reaching 90% usage.

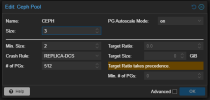

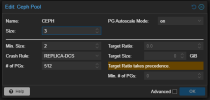

My pool was manually set to 512 PGs with the PG Autoscale option OFF, and now I've changed it to PG Autoscale ON.

I used:

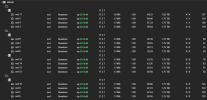

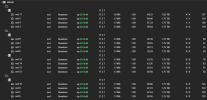

And here are my OSDs:

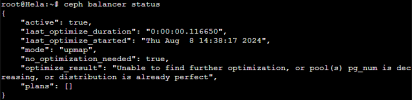

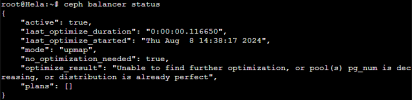

I get this return from:

However, even after making this change, the autoscaling didn't start automatically. PROXMOX suggests that the optimized number of PGs is 256. If I manually change the quantity, would that force the process to start?

I would like to know if there's anything else I need to do to initiate the process?

I have a question regarding CEPH on PROXMOX. I have a CEPH cluster in production and would like to rebalance my OSDs since some of them are reaching 90% usage.

My pool was manually set to 512 PGs with the PG Autoscale option OFF, and now I've changed it to PG Autoscale ON.

I used:

ceph config set global osd_mclock_profile high_client_ops to ensure the action occurs in production without impacting the VMs I have running.And here are my OSDs:

I get this return from:

ceph balancer status

However, even after making this change, the autoscaling didn't start automatically. PROXMOX suggests that the optimized number of PGs is 256. If I manually change the quantity, would that force the process to start?

I would like to know if there's anything else I need to do to initiate the process?