D

Deleted member 33567

Guest

Hi,

Recent updates have made ceph started to act very weird:

we keep loosing one OSD with following from syslog:

There seems to be a issue communicating between the ceph OSDs.

While whenever I want to start osd.6 it will start once when it fails again will give me a:

command '/bin/systemctl start ceph-osd@6' failed: exit code 1

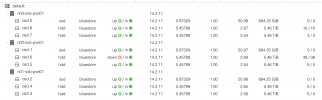

ceph -s

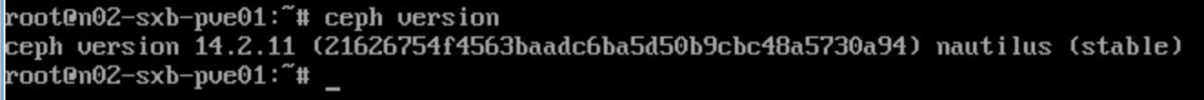

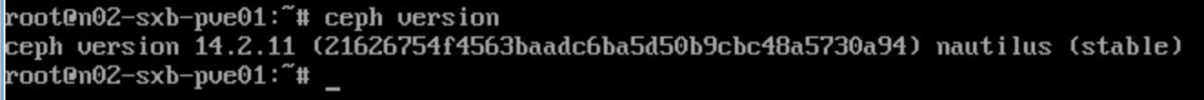

ceph version:

Recent updates have made ceph started to act very weird:

we keep loosing one OSD with following from syslog:

Bash:

2020-10-17 04:28:21.922478 mon.n02-sxb-pve01 (mon.0) 912 : cluster [INF] osd.6 [v2:172.17.1.2:6814/308596,v1:172.17.1.2:6817/308596] boot

2020-10-17 04:28:23.919914 mon.n02-sxb-pve01 (mon.0) 918 : cluster [WRN] Health check update: Degraded data redundancy: 15240/781113 objects degraded (1.951%), 61 pgs degraded (PG_DEGRADED)

2020-10-17 04:28:22.935866 osd.8 (osd.8) 2005 : cluster [INF] 3.18 continuing backfill to osd.6 from (1412'1563816,2102'1566816] MIN to 2102'1566816

2020-10-17 04:28:28.917032 mon.n02-sxb-pve01 (mon.0) 920 : cluster [INF] osd.6 failed (root=default,host=n02-sxb-pve01) (connection refused reported by osd.0)

2020-10-17 04:28:28.934114 mon.n02-sxb-pve01 (mon.0) 928 : cluster [WRN] Health check failed: 1 osds down (OSD_DOWN)

2020-10-17 04:28:29.937247 mon.n02-sxb-pve01 (mon.0) 930 : cluster [WRN] Health check update: Degraded data redundancy: 15233/781113 objects degraded (1.950%), 55 pgs degraded (PG_DEGRADED)

2020-10-17 04:28:34.726454 mon.n02-sxb-pve01 (mon.0) 934 : cluster [INF] osd.8 failed (root=default,host=n03-sxb-pve01) (connection refused reported by osd.3)

2020-10-17 04:28:34.942949 mon.n02-sxb-pve01 (mon.0) 954 : cluster [WRN] Health check update: 2 osds down (OSD_DOWN)

2020-10-17 04:28:37.293519 mon.n02-sxb-pve01 (mon.0) 958 : cluster [WRN] Health check update: Degraded data redundancy: 41466/781113 objects degraded (5.309%), 142 pgs degraded (PG_DEGRADED)

2020-10-17 04:28:39.953418 mon.n02-sxb-pve01 (mon.0) 959 : cluster [WRN] Health check failed: Reduced data availability: 6 pgs inactive (PG_AVAILABILITY)

2020-10-17 04:28:42.007881 mon.n02-sxb-pve01 (mon.0) 962 : cluster [WRN] Health check update: 1 osds down (OSD_DOWN)

2020-10-17 04:28:42.015487 mon.n02-sxb-pve01 (mon.0) 963 : cluster [INF] osd.6 [v2:172.17.1.2:6814/308850,v1:172.17.1.2:6817/308850] boot

2020-10-17 04:28:42.293935 mon.n02-sxb-pve01 (mon.0) 966 : cluster [WRN] Health check update: Degraded data redundancy: 82616/781113 objects degraded (10.577%), 214 pgs degraded (PG_DEGRADED)

2020-10-17 04:28:47.302802 mon.n02-sxb-pve01 (mon.0) 971 : cluster [WRN] Health check update: Degraded data redundancy: 60544/781113 objects degraded (7.751%), 177 pgs degraded (PG_DEGRADED)

2020-10-17 04:28:47.925637 mon.n02-sxb-pve01 (mon.0) 974 : cluster [INF] Health check cleared: PG_AVAILABILITY (was: Reduced data availability: 6 pgs inactive)

2020-10-17 04:28:48.929895 mon.n02-sxb-pve01 (mon.0) 975 : cluster [INF] Health check cleared: OSD_DOWN (was: 1 osds down)

2020-10-17 04:28:48.931768 mon.n02-sxb-pve01 (mon.0) 976 : cluster [INF] osd.8 [v2:172.17.1.3:6818/1402458,v1:172.17.1.3:6819/1402458] boot

2020-10-17 04:28:51.334835 mon.n02-sxb-pve01 (mon.0) 981 : cluster [INF] osd.6 failed (root=default,host=n02-sxb-pve01) (connection refused reported by osd.7)

2020-10-17 04:28:51.934397 mon.n02-sxb-pve01 (mon.0) 1009 : cluster [WRN] Health check failed: 1 osds down (OSD_DOWN)

There seems to be a issue communicating between the ceph OSDs.

While whenever I want to start osd.6 it will start once when it fails again will give me a:

command '/bin/systemctl start ceph-osd@6' failed: exit code 1

ceph -s

ceph version: