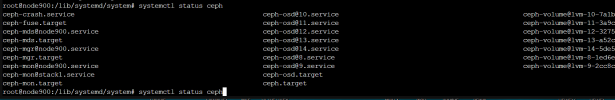

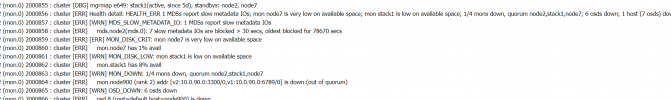

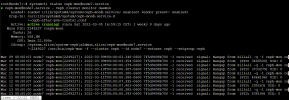

monitor services seem up - but not talking.

I mean maybe they are talking but no MDS or FS there anymore... seems the monitors see same number of tasks... show active... idk.. been deep diving into the ceph boards trying to learn more - seems this has happened a few times to others but no resolution and they end up just scrapping and reinstalling... i would like to save my VM's if at all possible on the CPool1 pool

Last edited: