LACP requires that each connection goes over a single link. This way packet order is ensured and it mimics a normal ethernet link.

In LACP, the "sender" decides which link to send packets based on a hash function taking variables from L2, L3 or L4 or a combination of them (i.e. MAC address, IP address, source and dest port). Then the hash is squashed over the available links with a simple modulus (i.e. Sending link = HASH % NumberOfActiveLinks)

If you only use L2 (the mac address) all connection toward the same mac address (physical machine) will use the same interface. If you add L3 and L4, chances are that different connections running on the same client will be served trough a different link (this is true when the number of connections is large. for fewer connections it's not always the case).

With balance-rr, every packet sent from the bond outgoing queue uses "the next" Ethernet link, regardless of the connection that this packet belongs to. Since you're sending packets "almost in parallel" they travel different routes and different switches, you can break the single link limit. You're also causing the packet reordering of the tcp stack to work harder as packets can be received out of order.

In this setup, it is better to keep the switches separate from each other. This is to prevent possible loops (I know about stp, but that is a source of other issues is buggy on most budged ones). In your case you need three switches, one for each part of the bond.

Edit: I would like to add that in case you're using a single switch to connect a bond, once the packets enter the switch, they loose their parallelism. That is, the switch decides how to send them to the destination server. In case that happens to be another "bond" chances are the switch will use some type of xor/hash policy that will limit the transmission to occur across a single link. This is another good reason to use multiple switches and a single Ethernet link per switch: This way the switch has no decision to make but can only forward the packet via the one channel it has control of.

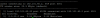

Slightly off-topic: What is the tool you're using to visualize the bond usage? I've never seen it before

Edit: found it... it's nload