I am looking to some guidance to finalize the setup of a 3-nodes Proxmox cluster with Ceph and shared ISCSI storage. While it's working, I am not really happy with the ceph cluster resilienc and I am looking for some guidance.

Each nodes have 2x10GbE ports and 2x480GB SSD dedicated for ceph (+2x256 NVME storage for the system and other things). The NAS has 1 10GbE and 2x5GbE ports (+2 1GbE) . Following some past discussion I have setup for now the following:

The issue with the design above is that while for iSCSI 2 different networks are really used, the CEPH network is only on one switch

Can we have the ceph network working on 2 different network using VLANs for the same OSDs/monitor nodes etc? I didn't find a way to do it in the web UI but maybe on the command line?

Also should I distinct the iscsi network from the ceph network and put them in distinct vlan?

Any hint/feedback is welcome

Each nodes have 2x10GbE ports and 2x480GB SSD dedicated for ceph (+2x256 NVME storage for the system and other things). The NAS has 1 10GbE and 2x5GbE ports (+2 1GbE) . Following some past discussion I have setup for now the following:

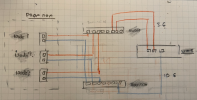

- 2 distincts networks using 2 mikrotik CRS312-4C+8XG-RM switches with a great total non-blocking throughput of 120 Gbps, switching capacity of 240 Gbps and forwarding rate of 178 Mpps

- The ceph d network only goes over 1 switch in 1 subnet

- 2x5Gbe ports of the NAS are connected to 1 switch while the the 10GbE port is connected to the other

- I am using a MULTIPATH session for iSCSI on each nodes.

What is not on the picture is the backup server connected to each switch with 1 10GbE link that is supposed to also use the iSCI storage. Unfortunately I can't grow its storage that much since it has only 2x240GB SSD disks (2"5), except maybe by using USB3.

The issue with the design above is that while for iSCSI 2 different networks are really used, the CEPH network is only on one switch

Can we have the ceph network working on 2 different network using VLANs for the same OSDs/monitor nodes etc? I didn't find a way to do it in the web UI but maybe on the command line?

Also should I distinct the iscsi network from the ceph network and put them in distinct vlan?

Any hint/feedback is welcome

Last edited: