[SOLVED] cannot start ha resource when ceph in health_warn state

- Thread starter rseffner

- Start date

-

- Tags

- ceph ha failover

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

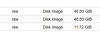

Best, post aI added another 480GB SSD. Now the pool is about 480GB * 4.

ceph df and a ceph df tree.

Code:

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 15 TiB 13 TiB 2.3 TiB 2.3 TiB 15.47

ssd 1.3 TiB 831 GiB 507 GiB 510 GiB 38.03

TOTAL 16 TiB 13 TiB 2.8 TiB 2.8 TiB 17.28

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

ceph-vm-ssd 2 42 GiB 12.29k 126 GiB 40.44 62 GiB

ceph-vm-hdd 3 1.2 TiB 566.12k 2.7 TiB 18.90 5.4 TiB

Code:

ceph df tree

tree not valid: tree not in detail

Invalid command: unused arguments: [u'tree']

df {detail} : show cluster free space stats

Error EINVAL: invalid commandThanks!

Code:

root@pve01sc:~# ceph osd df tree

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS TYPE NAME

-1 16.30057 - 16 TiB 2.1 TiB 2.1 TiB 98 KiB 10 GiB 14 TiB 13.08 1.00 - root default

-3 8.15028 - 8.2 TiB 1.2 TiB 1.2 TiB 51 KiB 5.0 GiB 6.9 TiB 15.08 1.15 - host pve01sc

4 hdd 7.27730 1.00000 7.3 TiB 876 GiB 873 GiB 3 KiB 3.0 GiB 6.4 TiB 11.76 0.90 128 up osd.4

5 hdd 0.43649 1.00000 447 GiB 62 GiB 61 GiB 12 KiB 1024 MiB 385 GiB 13.88 1.06 9 up osd.5

0 ssd 0.43649 1.00000 447 GiB 321 GiB 320 GiB 36 KiB 1.0 GiB 126 GiB 71.71 5.48 128 up osd.0

-5 0.43649 - 447 GiB 47 GiB 46 GiB 28 KiB 1024 MiB 400 GiB 10.62 0.81 - host pve02sc

1 ssd 0.43649 1.00000 447 GiB 47 GiB 46 GiB 28 KiB 1024 MiB 400 GiB 10.62 0.81 135 up osd.1

-7 7.71379 - 7.7 TiB 877 GiB 873 GiB 19 KiB 4.0 GiB 6.9 TiB 11.10 0.85 - host pve03sc

3 hdd 7.27730 1.00000 7.3 TiB 876 GiB 873 GiB 3 KiB 3.0 GiB 6.4 TiB 11.76 0.90 128 up osd.3

2 ssd 0.43649 1.00000 447 GiB 1.0 GiB 2.6 MiB 16 KiB 1024 MiB 446 GiB 0.22 0.02 128 up osd.2

TOTAL 16 TiB 2.1 TiB 2.1 TiB 101 KiB 10 GiB 14 TiB 13.08

MIN/MAX VAR: 0.02/5.48 STDDEV: 24.54The OSD distribution seems very uneven (STDDEV 24.54). What is

What do those command show?

I also very much recommend to read the architecture guide of Ceph. This guide holds vital details to understand Ceph.

https://docs.ceph.com/docs/nautilus/architecture/

ceph -s showing now?

Code:

ceph osd pool get ceph-vm-ssd all

ceph osd pool get ceph-vm-hdd allI also very much recommend to read the architecture guide of Ceph. This guide holds vital details to understand Ceph.

https://docs.ceph.com/docs/nautilus/architecture/

Sorry. I moved away from Ceph all my VMs. Now that is empty, it sticks on an unhealthy state.

I could figure out that the unbalanced state is due by the SSD I added to unlock its frozen state.

I added the SSD with a USB dock and it was recognized by Ceph as HDD.

The performance was awesome, but on what I read over Ceph before, is not so much clear what is the minimal setup. Probably the spot sentence "runs on commodity hardware" is not that true. The minimal setup is a too high cost for me. I will dismiss the third Proxmox cluster host to convert it to Unraid.

I could figure out that the unbalanced state is due by the SSD I added to unlock its frozen state.

I added the SSD with a USB dock and it was recognized by Ceph as HDD.

The performance was awesome, but on what I read over Ceph before, is not so much clear what is the minimal setup. Probably the spot sentence "runs on commodity hardware" is not that true. The minimal setup is a too high cost for me. I will dismiss the third Proxmox cluster host to convert it to Unraid.

The mix-up often is the commodity vs consumer hardware.Probably the spot sentence "runs on commodity hardware" is not that true.

A pity, but understandable. Ceph does need some hardware to start with. Maybe you would like to try the pvesr, it uses ZFS to replicate the VM/CT data to other nodes.The minimal setup is a too high cost for me. I will dismiss the third Proxmox cluster host to convert it to Unraid.

https://pve.proxmox.com/pve-docs/chapter-pvesr.html