Hello and thank you for your time.

TL;DR - Can a Ceph disk be used as a Windows Failover Cluster Shared Volume? If yes, what particular VM configurations need to be made in order to make the WFC accept the disk?

Related forum threads - Support for Windows Failover Clustering, hpe 1060 stroage with 2 hpe server setup, Proxmox HA + MSSQL Failover Cluster

Environment - I have a 5 node PVE 7.14-17 cluster. I have Ceph 17.2.7 co-located on every node. I have 2 AD-joined Windows Server 2022 VMs, File01 and File02.

I am trying to figure out how to create a Ceph disk in Proxmox that can be used as a Windows Failover Cluster (WFC) Shared Volume (CSV). I know this is a Proxmox + Ceph + Windows Server question rather than a strictly Proxmox question, but I figure posting here is more relevant that in a Ceph or Windows Server forum.

Both File server VMs have a separate 50G OS disk in addition to a ~2.5TB shared disk. The shared disk was created by creating a disk on File01 via the Web GUI, then manually inserting it into File02's .conf file. I know attaching multiple VMs to the same disk is not recommended, but that is based on the assumption that the attached VMs are not clustered nor cluster aware. In my case both VMs attached to the disk are joined to a WFC and thus access to the shared disk is controlled.

File01

File02

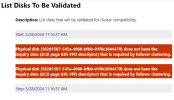

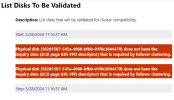

When I run a WFC validation Storage test, I am given the error: Physical disk {UUID} does not have the inquiry data (SCSI page 83h VPD descriptor) that is required by failover clustering

Thus far my troubleshooting (Read: Trial and error) has been focused on the VM's configured disk controller and disk interface. I originally used the VirtIO SCSI Single controller and the SCSI bus/device as is best practice, but since tried (To no success);

- VirtIO SCSI single + SCSI + Discard + Direct Sync + SSD Emulation + native async (My typical VM configuration)

- VirtIO SCSI single + SCSI

- VirtIO SCSI + SCSI + Discard + Direct Sync + SSD Emulation + native async

- VirtIO SCSI + SCSI

- VirtIO SCSI + SATA

- PVSCSI + SCSI

- PVSCSI + SATA

From what (admittedly little) I know about Ceph and Windows Failover Clustering, this should be possible. Is this possible? If it is, I would really appreciate being pointed in the right direction.

Thank you for your time and consideration. Please let me know if any additional information would be useful.

TL;DR - Can a Ceph disk be used as a Windows Failover Cluster Shared Volume? If yes, what particular VM configurations need to be made in order to make the WFC accept the disk?

Related forum threads - Support for Windows Failover Clustering, hpe 1060 stroage with 2 hpe server setup, Proxmox HA + MSSQL Failover Cluster

Environment - I have a 5 node PVE 7.14-17 cluster. I have Ceph 17.2.7 co-located on every node. I have 2 AD-joined Windows Server 2022 VMs, File01 and File02.

I am trying to figure out how to create a Ceph disk in Proxmox that can be used as a Windows Failover Cluster (WFC) Shared Volume (CSV). I know this is a Proxmox + Ceph + Windows Server question rather than a strictly Proxmox question, but I figure posting here is more relevant that in a Ceph or Windows Server forum.

Both File server VMs have a separate 50G OS disk in addition to a ~2.5TB shared disk. The shared disk was created by creating a disk on File01 via the Web GUI, then manually inserting it into File02's .conf file. I know attaching multiple VMs to the same disk is not recommended, but that is based on the assumption that the attached VMs are not clustered nor cluster aware. In my case both VMs attached to the disk are joined to a WFC and thus access to the shared disk is controlled.

File01

Code:

ostype: win11

scsi0: PMVE-RBS:vm-204-disk-1,cache=directsync,discard=on,iothread=1,size=50G,ssd=1

scsi1: PMVE-RBS:vm-204-disk-3,aio=native,size=2621952M

scsihw: virtio-scsi-pciFile02

Code:

ostype: win11

scsi0: PMVE-RBS:vm-205-disk-1,aio=native,cache=directsync,discard=on,size=50G,ssd=1

scsi1: PMVE-RBS:vm-204-disk-3,aio=native,backup=0,size=2621952M

scsihw: virtio-scsi-pciWhen I run a WFC validation Storage test, I am given the error: Physical disk {UUID} does not have the inquiry data (SCSI page 83h VPD descriptor) that is required by failover clustering

Thus far my troubleshooting (Read: Trial and error) has been focused on the VM's configured disk controller and disk interface. I originally used the VirtIO SCSI Single controller and the SCSI bus/device as is best practice, but since tried (To no success);

- VirtIO SCSI single + SCSI + Discard + Direct Sync + SSD Emulation + native async (My typical VM configuration)

- VirtIO SCSI single + SCSI

- VirtIO SCSI + SCSI + Discard + Direct Sync + SSD Emulation + native async

- VirtIO SCSI + SCSI

- VirtIO SCSI + SATA

- PVSCSI + SCSI

- PVSCSI + SATA

From what (admittedly little) I know about Ceph and Windows Failover Clustering, this should be possible. Is this possible? If it is, I would really appreciate being pointed in the right direction.

Thank you for your time and consideration. Please let me know if any additional information would be useful.