Hi all,

I'm trying to wrap my head around an issue that I've been experiencing for a while now, some progress has been made by accident.

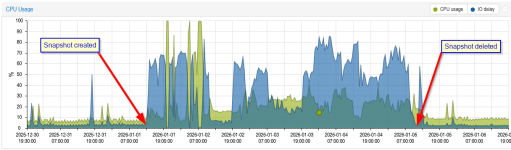

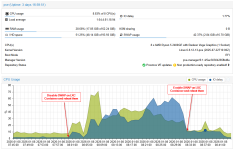

My single-node Proxmox server hosts a TrueNAS Scale VM, which has a HBA passed through to it, with 5 disks attached. This hosts, among other things, an NFS share for the Proxmox host to place backups upon. I've not been able to reliably use this, as every time I do, the host falls over with the biggest symptom being the very high IO delay on the disk which Proxmox is installed upon.

By chance, I connected a run of the mill SSD to the host, and configured it as a Directory on the Proxmox host, and have specified it as a backup repository within the Proxmox UI. I've now been able to successfully backup containers to it. Previously, this would have caused the hypervisor to fall over.

What can I provide to shed some more light into this?

Edit:

Keen to add that from within the Dashboard, I can see that running a backup to the TrueNAS NFS share spikes the following:

- IO Pressure Stall,

- Memory Pressure Stall,

- CPU IO Delay.

I'm trying to wrap my head around an issue that I've been experiencing for a while now, some progress has been made by accident.

My single-node Proxmox server hosts a TrueNAS Scale VM, which has a HBA passed through to it, with 5 disks attached. This hosts, among other things, an NFS share for the Proxmox host to place backups upon. I've not been able to reliably use this, as every time I do, the host falls over with the biggest symptom being the very high IO delay on the disk which Proxmox is installed upon.

By chance, I connected a run of the mill SSD to the host, and configured it as a Directory on the Proxmox host, and have specified it as a backup repository within the Proxmox UI. I've now been able to successfully backup containers to it. Previously, this would have caused the hypervisor to fall over.

What can I provide to shed some more light into this?

Edit:

Keen to add that from within the Dashboard, I can see that running a backup to the TrueNAS NFS share spikes the following:

- IO Pressure Stall,

- Memory Pressure Stall,

- CPU IO Delay.

Last edited: