Hello.

First of all, I'm sorry if my English is not good enough, I'm not a native English speaker but I will try my best to make myself understandable so don't hesitate to ask me to rewrite some explanations if they're not clear enough.

We've been using Hyper-V Core 2016 at work with some more or less sketchy backup solutions (some custom scripts at the beginning, then it became unmanageable and we bought Synology NASes for Active Backup for Business which is working OK right now).

The current Hyper-V Server specifications are these :

The number of running VMs is 29 with 3 different SCADA software.

The average CPU load is about 7-10% (yearly stats collected by Zabbix Server). // MAX 64 % during updates

The average Disk Read Rate is 106 r/s. // Max 4903.

The average Disk Write Rate is 107 w/s. // Max 4063

The average Disk Utilization is 3.7%. // Max 96%

(We do have by government obligation a lot more software than people using it...)

We backup every hour on a Synology NAS with ABB (RS1221RP+ using BTRFS deduplication) on RAID 10 of Exos X16 HDDs with a Raid 1 Kingston DC500R (3.84 Tb) SSD Cache => Takes about 3-5 minutes depending on the number of changes (around 7-10Gb per backup).

We keep every hourly version for 48 hours, then daily for one month.

This server is replicated daily to a second backup server situated in another building and there is a 2 X 10 Gbps private direct fiber link between these buildings (can be upgraded if needed, we do have 8 more fibers available).

I've encountered many problems with this Hypervisor and since I've been using Proxmox VE and Proxmox Backup Server at home and on some smaller projects at work and it made me smile instead of wanting to throw the server in the trash every day like it does with Hyper-V, I'm trying to make a quote in order to replace the existing solution by a Proxmox powered one.

The PVE expected server specifications are these :

The number of VMs might grow to about 45.

The plan is :

1. To get about the same backup plan as the current one 48 hourly versions + daily for a month on two Backup Servers.

2. To be able to backup hourly at a decent speed (about 15 minutes max would be good).

3. To be able to replicate between primary and secondary Backup Server hourly at decent speeds.

The problem is that I can't find enough specific information especially on storage sizing to be able to reliably define a specifications sheet for a Proxmox Backup Server.

If there is a post that I didn't find and that can help me. I would be glad if someone could point me in this direction.

By feeling I would go for at least a 16 Core @3Ghz CPU and 64 Gb of RAM with an EXT4 FS. But could it be less ?

As I understood from the forum posts, HDDs are a no go in production so we're planning to use at least Micron 5400 MAX SSDs or better if budget allows it for PBS.

Is there any formula to calculate how much storage do I need for Proxmox Backup Server depending on how much Gb of Data blocks change every hour or is there a general formula like N GB used in production x Ration (0.5 or 2 or 3 or ?) x Number of backup versions to keep ?

Also would something like Micron 5400 Max be enough for our needs since about same perfoming Kingston DC500R drives are more than enough right now or does Proxmox VE / Backup Server require more IOPS ?

Thank you for your feedback.

First of all, I'm sorry if my English is not good enough, I'm not a native English speaker but I will try my best to make myself understandable so don't hesitate to ask me to rewrite some explanations if they're not clear enough.

We've been using Hyper-V Core 2016 at work with some more or less sketchy backup solutions (some custom scripts at the beginning, then it became unmanageable and we bought Synology NASes for Active Backup for Business which is working OK right now).

The current Hyper-V Server specifications are these :

| CPU | 2 x Xeon Silver 4116 so 24 Physical Cores |

| RAM | 256 Gb |

| Storage | 3.1 Tb used (RAID 10 of 4 x Kingston DC500R 3.84 Tb) |

The average CPU load is about 7-10% (yearly stats collected by Zabbix Server). // MAX 64 % during updates

The average Disk Read Rate is 106 r/s. // Max 4903.

The average Disk Write Rate is 107 w/s. // Max 4063

The average Disk Utilization is 3.7%. // Max 96%

(We do have by government obligation a lot more software than people using it...)

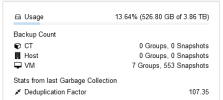

We backup every hour on a Synology NAS with ABB (RS1221RP+ using BTRFS deduplication) on RAID 10 of Exos X16 HDDs with a Raid 1 Kingston DC500R (3.84 Tb) SSD Cache => Takes about 3-5 minutes depending on the number of changes (around 7-10Gb per backup).

We keep every hourly version for 48 hours, then daily for one month.

This server is replicated daily to a second backup server situated in another building and there is a 2 X 10 Gbps private direct fiber link between these buildings (can be upgraded if needed, we do have 8 more fibers available).

I've encountered many problems with this Hypervisor and since I've been using Proxmox VE and Proxmox Backup Server at home and on some smaller projects at work and it made me smile instead of wanting to throw the server in the trash every day like it does with Hyper-V, I'm trying to make a quote in order to replace the existing solution by a Proxmox powered one.

The PVE expected server specifications are these :

| CPU | 32 Cores @3Ghz (or more depending on prices) |

| RAM | 390 Gb (or more depending on prices) |

| Storage | MAX 8 Tb used (We might go for Micron 7450 MAX or Micron 5400 MAX depending on budget) |

The plan is :

1. To get about the same backup plan as the current one 48 hourly versions + daily for a month on two Backup Servers.

2. To be able to backup hourly at a decent speed (about 15 minutes max would be good).

3. To be able to replicate between primary and secondary Backup Server hourly at decent speeds.

The problem is that I can't find enough specific information especially on storage sizing to be able to reliably define a specifications sheet for a Proxmox Backup Server.

If there is a post that I didn't find and that can help me. I would be glad if someone could point me in this direction.

By feeling I would go for at least a 16 Core @3Ghz CPU and 64 Gb of RAM with an EXT4 FS. But could it be less ?

As I understood from the forum posts, HDDs are a no go in production so we're planning to use at least Micron 5400 MAX SSDs or better if budget allows it for PBS.

Is there any formula to calculate how much storage do I need for Proxmox Backup Server depending on how much Gb of Data blocks change every hour or is there a general formula like N GB used in production x Ration (0.5 or 2 or 3 or ?) x Number of backup versions to keep ?

Also would something like Micron 5400 Max be enough for our needs since about same perfoming Kingston DC500R drives are more than enough right now or does Proxmox VE / Backup Server require more IOPS ?

Thank you for your feedback.

Last edited: