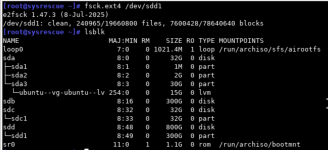

I was trying to increase/decrease the sizes of two LVs in PVE but not succeeding. That is, using the command line with

Then I noticed that the GUI has a a disk action 'resize' for the client VM. So, I tried to increase it there, and this succeeded. However, I made a mistake and increased another one (in another VG too). So, I would like to repair that and decrease its size again. I tried entering a negative number in the GUI, but that did not work.

If I try this on the command line on PVE, I get:

lvresize, I succeeded at decreasing a LV that is mounted inside the PVE host from a VG/LVM on an external LUKS-encrypted) disk, but I did not succeed in increasing another LV that is assigned to a client VM (not running at the moment). I had various problems on the command line e.g. that the LV wasn't activated.Then I noticed that the GUI has a a disk action 'resize' for the client VM. So, I tried to increase it there, and this succeeded. However, I made a mistake and increased another one (in another VG too). So, I would like to repair that and decrease its size again. I tried entering a negative number in the GUI, but that did not work.

If I try this on the command line on PVE, I get:

Code:

root@pve:~# lvresize --size -500GB --resizefs pve/vm-100-disk-2

fsadm: Cannot get FSTYPE of "/dev/mapper/pve-vm--100--disk--2".

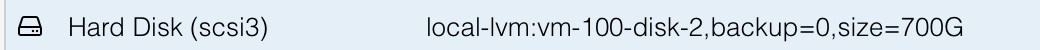

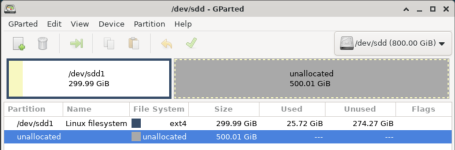

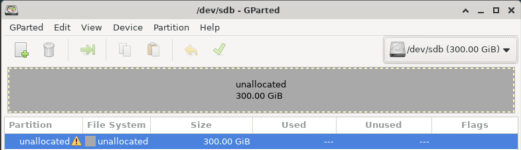

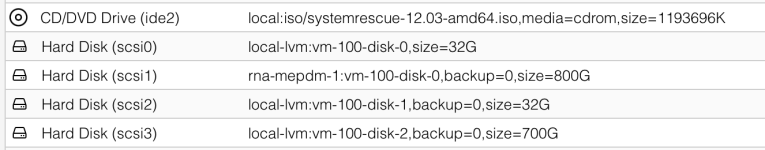

Filesystem check failed.pve/vm-100-disk-2 was 500GB, I accidentally increased it to 700GB, but I would like to shrink it by 300GB, so my current resize wish is -500GB. It is scsi3 (/dev/sdd) in the Ubuntu client. I haven't restarted the VM yet, or rebooted the PVE host.