Question is what are you trading in with Veeam in regards performance and quality of the backups. I have a hard time keeping my PBS busy, but it can backup and restore 21 PVE servers, during the CrowdStrike problem, we restored dozens of Windows machines simultaneously using live-restore. It can also do very fast incremental backups and individual file restores because it doesn’t need to access an entire disk image and it has significant de-duplication which is important both on spinning disk and flash - spinning disk in server has become more expensive in data center because flash is faster and requires less space and energy for the same IOPS.

To me 23 minutes to restore is slow, PBS live restore is measured in seconds to restore, but most of the time the transfer to Ceph is completed in a matter of 10-15 minutes.

Yes, valid points. PBS has very strong points, I agree.

Incremental backups are very fast (well, unless you reboot a VM or a host, then it looses dirty bitmaps and has to re-read every drive -> Veeam's CBT - Changed Block Tracking - is better in that regard).

Live restore is a great feature but, I'm not sure how well that would work if PBS datastore is not all flash. I wouldn't dare using it on my (HDD-backed ZFS datastore) setups.

Deduplication with PBS is... perfect, really. I don't think it gets any better than what they have done.

Parametrizing your infrastructure is also important when you need to know about performance things. It’s slow is not a problem statement when you can’t tell me where your bottlenecks are.

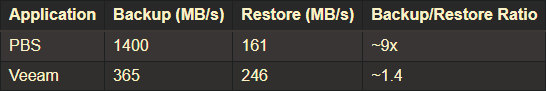

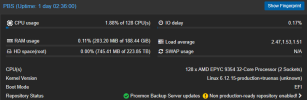

On the contrary, I spent quite some time to prove/explain what I see, the bottlenecks are actually coming from PBS. Please read through my previous posts in this thread. Restore and verify speed is very slow in my case - and hardware is not the bottleneck. I even bought two servers with much newer gen CPUs, only to get about the same results as with the old machines... And 10x slower restores with PBS than with Veeam, on same hardware (actually Veeam was running in a VM compared to PBS that was running on host).

update: benchmarking my setup - backing up is 6-7Gbps on the wire (this is between data-centers ~100km apart with 4x10Gbps in bond), writing to storage fluctuates between a few Mbps to ~2Gbps. I think that has mostly to do with the fact it is compressing and deduplicating (it uses 3-6 cores for this). I guess Veeam compresses and deduplicates on the client side, which would have a signficant performance impact on the hypervisor side.

The same happens in return, it starts pretty high (I am booting live with is barely noticeable as if it were local) then slows down "write" toabout 1-2Gbps to storage but a lot of the disk is 'empty blocks' which is being thin provisioned, but I get the expected wirespeed for a single connection.

Those are nice numbers !

Veeam has several modes of operation. Unless you have a supported SAN (in which case the host doesn't spend any resources), the next best way is to use a proxy VM. In that case host resources are being used.

I believe PBS also uses host resources, as compression and hashing is done on the client side. Deduplication is a simple task, as PBS has implemented it. It just compares the hashes of chunks/blobs and skips saving (and/or sending) the same data to PBS. So processing is mostly: reading the snapshot + hashing. Plus sending through HTTP/2 via TLS is also something that uses CPU (that could be skipped if an option was provided to turn it off in local/securely configured network environments, but the option doesn't exist in PBS).

But all in all: backups are fast, no problem there. It's the restores (and verify operations) that could get some attention.

My biggest problem is restore times, it's the RTO for multi-TB VMs to be precise. Any verify speeds. It just takes way too long, on hardware that can deliver much more. Everything else is solid. I tried with NVMe, but got no better restore speeds than with HDDs... read my previous posts, it's all there.