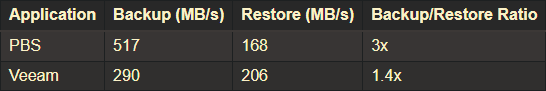

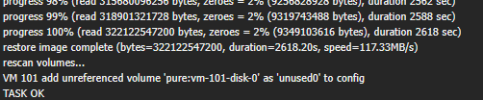

I will try this on a bigger VM, but I'm not really having any issues with PBS restoring moderately sized VMs.

80GB over 54 seconds isn't terrible, but I could see this being quite the issue when you are pulling a couple of TBs.

Code:

new volume ID is 'local-zfs:vm-170-disk-0'

new volume ID is 'local-zfs:vm-170-disk-1'

restore proxmox backup image: /usr/bin/pbs-restore --repository root@pam@10.60.110.155:truenas --ns DER vm/170/2025-03-03T20:21:36Z drive-sata0.img.fidx /dev/zvol/rpool/data/vm-170-disk-0 --verbose --format raw --skip-zero

connecting to repository 'root@pam@10.60.110.155:truenas'

open block backend for target '/dev/zvol/rpool/data/vm-170-disk-0'

starting to restore snapshot 'vm/170/2025-03-03T20:21:36Z'

download and verify backup index

progress 1% (read 12582912 bytes, zeroes = 0% (0 bytes), duration 0 sec)

progress 2% (read 25165824 bytes, zeroes = 0% (0 bytes), duration 0 sec)

progress 3% (read 33554432 bytes, zeroes = 0% (0 bytes), duration 0 sec)

progress 4% (read 46137344 bytes, zeroes = 18% (8388608 bytes), duration 0 sec)

progress 5% (read 54525952 bytes, zeroes = 23% (12582912 bytes), duration 0 sec)

...truncated...

progress 98% (read 1031798784 bytes, zeroes = 12% (130023424 bytes), duration 54 sec)

progress 99% (read 1044381696 bytes, zeroes = 12% (130023424 bytes), duration 54 sec)

progress 100% (read 1052770304 bytes, zeroes = 12% (134217728 bytes), duration 54 sec)

restore image complete (bytes=1052770304, duration=54.83s, speed=18.31MB/s)

restore proxmox backup image: /usr/bin/pbs-restore --repository root@pam@10.60.110.155:truenas --ns DER vm/170/2025-03-03T20:21:36Z drive-sata1.img.fidx /dev/zvol/rpool/data/vm-170-disk-1 --verbose --format raw --skip-zero

connecting to repository 'root@pam@10.60.110.155:truenas'

open block backend for target '/dev/zvol/rpool/data/vm-170-disk-1'

starting to restore snapshot 'vm/170/2025-03-03T20:21:36Z'

download and verify backup index

progress 1% (read 859832320 bytes, zeroes = 13% (117440512 bytes), duration 10 sec)

...truncated...

progress 97% (read 83328237568 bytes, zeroes = 95% (79666610176 bytes), duration 73 sec)

progress 98% (read 84188069888 bytes, zeroes = 95% (80526442496 bytes), duration 73 sec)

progress 99% (read 85047902208 bytes, zeroes = 95% (81386274816 bytes), duration 73 sec)

progress 100% (read 85902491648 bytes, zeroes = 95% (82237718528 bytes), duration 73 sec)

restore image complete (bytes=85902491648, duration=73.93s, speed=1108.06MB/s)

rescan volumes...

TASK OK80GB over 54 seconds isn't terrible, but I could see this being quite the issue when you are pulling a couple of TBs.