Hello everyone, I have configured 5 Proxmox nodes to use FC multipath. The only way to achieve high availability is to mount them as LVM, but LVM does not seem to support QCOW2 format disks. I want thin disks, otherwise disk resources will be consumed quickly, but I also want HA. Is there any way? Thank you

About multipath and lvm

- Thread starter ngwt

- Start date

-

- Tags

- ha cluster lvm storage thin

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

LVM is _block_ not file storage, so you are correct.LVM does not seem to support QCOW2 format disks

If you must have thin, and you are constrained to shared FC, you only option is something similar to OCFS2 cluster filesystem.I want thin disks, otherwise disk resources will be consumed quickly

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

What is OCFS2 cluster file system Do you have any relevant article contentLVM is _block_ not file storage, so you are correct.

If you must have thin, and you are constrained to shared FC, you only option is something similar to OCFS2 cluster filesystem.

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

https://en.wikipedia.org/wiki/OCFS2What is OCFS2 cluster file system Do you have any relevant article content

https://blogs.oracle.com/cloud-infr...s2-using-iscsi-on-oracle-cloud-infrastructure

https://forum.proxmox.com/threads/p...40-sas-partial-howto.57536/page-3#post-577358

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

What is OCFS2 cluster file system Do you have any relevant article content

Thank you, but I have a high probability that I won't adopt it because it's too difficult for me and I don't know the stability. Besides OCFS2, is there no other way to meet my needhttps://en.wikipedia.org/wiki/OCFS2

https://blogs.oracle.com/cloud-infr...s2-using-iscsi-on-oracle-cloud-infrastructure

https://forum.proxmox.com/threads/p...40-sas-partial-howto.57536/page-3#post-577358

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Last edited:

OCFS2 stands for Oracle Cluster File System 2. However it is not really widely used anymore nor do we officially support it.What is OCFS2 cluster file system Do you have any relevant article content

QCOW2 is only supported for file based storage. Fibre Channel is a block based storage. Therefore its QCOW2 disks are not supported on Fiber Channel.

Here is a list of supported storage types.

- NTFS and CIFS would be a shared file based storage (supports QCOW2 disks) but does not work with Fibre Channel.

- Ceph is a shared storage that uses disks in your hosts but also does not work over Fibre Cannel

所以目前无法配置 HA 和精简磁盘,对吧OCFS2 代表 Oracle Cluster File System 2。然而,它不再被广泛使用,我们也没有正式支持它。

QCOW2 仅支持基于文件的存储。光纤通道是一种基于块的存储。因此,光纤通道不支持其 QCOW2 磁盘。

以下是支持的存储类型的列表。

- NTFS 和 CIFS 是基于共享文件的存储(支持 QCOW2 磁盘),但不适用于光纤通道。

- Ceph 是一种共享存储,它使用主机中的磁盘,但也不能通过光纤烛台工作

Not over Fibre Channel, noSo HA and thin disks can't be configured at the moment, right?

So now I'm abandoning ha as long as thin disk has any mode? It's for 5 hosts to use togetherNot over Fibre Channel, no

If you do not share disks between nodes you can use lvm-thin. You will not get qcow files but you can use thin provisioningSo now I'm abandoning ha as long as thin disk has any mode? It's for 5 hosts to use together

So to simplify it you can only have 2 out of the following 3:

- You want to share storage between nodes (needed for HA)

- You want to use FC

- You want to use thin provisioning

Last edited:

ok Thank you very much for your help today!So to simplify it you can only have 2 out of the following 3:

- You want to share storage between nodes (needed for HA)

- You want to use FC

- You want to use thin provisioning

Another way would be to create a storage appliance, as you needed to in the old days of VMware:

Do, normal FC-based LVM with multipath support and create a VM that will be your new ZFS-based storage server. With this you'll have HA and everything you need in order to use it as a second storage in PVE as ZFS-over-iSCSI having thin provisioning and snapshots, yet no fault-tolerant stuff anymore.

Do, normal FC-based LVM with multipath support and create a VM that will be your new ZFS-based storage server. With this you'll have HA and everything you need in order to use it as a second storage in PVE as ZFS-over-iSCSI having thin provisioning and snapshots, yet no fault-tolerant stuff anymore.

oh ! Do you have any related articles ?Another way would be to create a storage appliance, as you needed to in the old days of VMware:

Do, normal FC-based LVM with multipath support and create a VM that will be your new ZFS-based storage server. With this you'll have HA and everything you need in order to use it as a second storage in PVE as ZFS-over-iSCSI having thin provisioning and snapshots, yet no fault-tolerant stuff anymore.

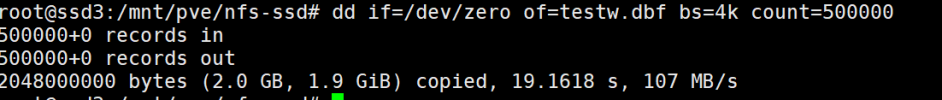

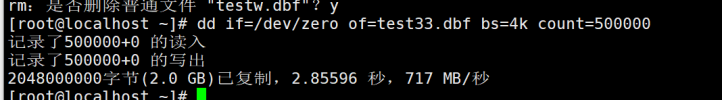

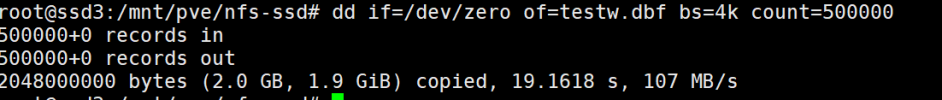

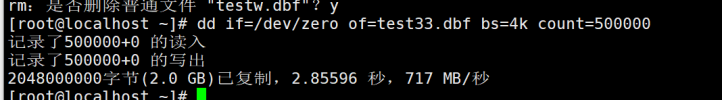

Hello everyone, I have found a very strange way to implement HA and thin disk. I have set up an nfs service on node 1 of FC storage, and then shared the nfs with several other hosts. I know that other hosts will become network access resources instead of FC channels. But the problem occurred, and I ran the dd if command on one of the hosts with an NFS directory mounted to test the disk command, resulting in a rate of 100/mbs. Then I built the virtual machine on the nfs directory and used the qcow2 format disk. Using the dd if command again, the results obtained are indeed

This is the disk read and write rate on the mounted host

This is the disk read and write rate on the mounted host

This is the disk read and write rate on the mounted host

This is the disk read and write rate on the mounted host

You dont mention where the VM is running. Was it co-located on the same host that has FC storage locally? If so, then you are skipping a lot of in-between devices to access it.

To find the bottleneck you need to test each piece, ie local FC, local FC NFS, remote NFS, etc. The problem is likely in your network.

P.S. the screenshots have identical description

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

To find the bottleneck you need to test each piece, ie local FC, local FC NFS, remote NFS, etc. The problem is likely in your network.

P.S. the screenshots have identical description

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

The virtual machine runs on a host mounted with nfs storage, and the storage used is on nfs storage. Of course, this host is also connected to FC storageYou dont mention where the VM is running. Was it co-located on the same host that has FC storage locally? If so, then you are skipping a lot of in-between devices to access it.

To find the bottleneck you need to test each piece, ie local FC, local FC NFS, remote NFS, etc. The problem is likely in your network.

P.S. the screenshots have identical description

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

dd is never a good benchmarking too, just use fio for this.This is the disk read and write rate on the mounted host

View attachment 53838

This is the disk read and write rate on the mounted host

View attachment 53839

Get normal thick shared-LVM working in the first place, create a VM, e.g. with Debian on a small (4-8 G) disk, add another disk fot ZFS. Now, you can read this (german) article how to set the rest up.oh ! Do you have any related articles ?