Hello everyone, I'd like to start by saying that I'm new-ish to ProxMox and especially new to server hardware. I recently got my hands on a HPE ProLiant ML350 Gen9 tower server without storage. The specs of the server are 2x Intel(R) Xeon(R) CPU E5-2620 v4 CPUs, 32gb of ram (16gb per CPU), and a Smart Array P440ar Controller. Before I got this server I was using 2 desktop towers to run 5 VMs. I just transferred all the VMs to the new server recently, and wanted to add a new windows VM to encode DVDs to put on my plex server. (Yes I know this is technically against the rules but the server is for my LAN only, so I'm essentially just making my DVDs easier to watch.)

The VMs are as follows:

100: PFsense Router

101: PiHole DNS Filter (Headless Debian),

102: Lancache for Game downloads (Headless Debian)

103: Plex media server (Debian)

104: Zabbix Monitoring (Headless Debian)

105: Windows 10 21H2

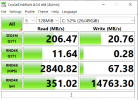

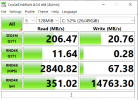

The windows VM is experiencing horrible disk write performance. Took me over an hour to get through the windows install alone. I have the latest Stable Virt-io scsi and Balloon drivers installed. Here is a Crystal Disk Mark Real World performance test from inside the windows VM:

from top to bottom the tests are:

Sequential 1MB Queues=1 Threads=1 (MB/s)

Random 4KB Queues=1 Threads=1 (MB/s)

Random 4KB Queues=1 Threads=1 (IOPS)

Random 4KB Queues=1 Threads=1 (us)

Here is the config of the windows VM105:

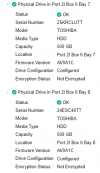

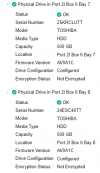

I have 7 2.5 inch 7200RPM 500Gb drives that I installed in the server, and configured them as follows:

Cache is enabled on the P440ar but it is read only, no write.

The P440ar is connected to CPU0 through PCIe.

PVE Boot Drive (RAID 1):

The array is not actually degraded, I am just using 3d Printed caddys so the controller can't verify that the drive is HP genuine.

Cache Storage for VM 102:

Raid 5 for to store my encoded video files for VM 103:

Is there something I've done that is glaringly wrong? Or are two 7200 RPM drives in raid one just have that bad of performance? I know it doesn't give you a write increase, but with a dedicated RAID controller I wouldn't think it would trash the performance that badly?

The VMs are as follows:

100: PFsense Router

101: PiHole DNS Filter (Headless Debian),

102: Lancache for Game downloads (Headless Debian)

103: Plex media server (Debian)

104: Zabbix Monitoring (Headless Debian)

105: Windows 10 21H2

The windows VM is experiencing horrible disk write performance. Took me over an hour to get through the windows install alone. I have the latest Stable Virt-io scsi and Balloon drivers installed. Here is a Crystal Disk Mark Real World performance test from inside the windows VM:

from top to bottom the tests are:

Sequential 1MB Queues=1 Threads=1 (MB/s)

Random 4KB Queues=1 Threads=1 (MB/s)

Random 4KB Queues=1 Threads=1 (IOPS)

Random 4KB Queues=1 Threads=1 (us)

Here is the config of the windows VM105:

Code:

root@pve:~# cat /etc/pve/qemu-server/105.conf

boot: order=scsi0;net0;sata1

cores: 5

hotplug: disk,network,usb

machine: pc-i440fx-6.2

memory: 8192

meta: creation-qemu=6.2.0,ctime=1652318673

name: Encode

net0: e1000=52:C3:0F:45:3E:A8,bridge=vmbr0,firewall=1

numa: 1

ostype: win10

sata1: local:iso/virtio-win-0.1.217.iso,media=cdrom,size=519096K

scsi0: local-lvm:vm-105-disk-0,size=50G

scsihw: virtio-scsi-pci

smbios1: uuid=01aa6ff7-5603-4950-8216-c883febd7193

sockets: 2

vmgenid: 9c3c1f16-34a0-4ed1-8343-820bcf2c4bf9I have 7 2.5 inch 7200RPM 500Gb drives that I installed in the server, and configured them as follows:

Cache is enabled on the P440ar but it is read only, no write.

The P440ar is connected to CPU0 through PCIe.

PVE Boot Drive (RAID 1):

The array is not actually degraded, I am just using 3d Printed caddys so the controller can't verify that the drive is HP genuine.

Cache Storage for VM 102:

Raid 5 for to store my encoded video files for VM 103:

Is there something I've done that is glaringly wrong? Or are two 7200 RPM drives in raid one just have that bad of performance? I know it doesn't give you a write increase, but with a dedicated RAID controller I wouldn't think it would trash the performance that badly?