New installation: System RAID1 how to create SWAP

- Thread starter zeldhaking

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

This is actually a great question, and I'm surprised nobody has posted a solution yet. You have to create a ZFS swap pool. If you try to create a swapfile the old-fashioned way, you'll see the error

Later Edit: before proceeding, see my post below. There is a ZFS bug that can cause your machine to lock up in certain situations if you place your swap on a Zpool.

Here are the steps to creating a ZFS swap pool:

Code:

root@PVE1:~# swapon /swapfile

swapon: /swapfile: skipping - it appears to have holes.Later Edit: before proceeding, see my post below. There is a ZFS bug that can cause your machine to lock up in certain situations if you place your swap on a Zpool.

Here are the steps to creating a ZFS swap pool:

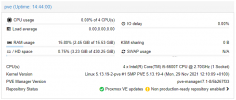

- First and foremost, verify that you have enough space in your root ZFS pool (rpool). This can be done in GUI or CLI

- GUI: Proxmox Host > Disks > ZFS > rpool

- CLI:

zpool list rpool

- Once you have verified that you have free space in your root ZFS pool, you can create a swap pool no larger than the amount of free space you have. In the command below, I am setting a swap size of 8G (adjust to suit your needs):

zfs create -V 8G -b $(getconf PAGESIZE) -o logbias=throughput -o sync=always -o primarycache=metadata -o com.sun:auto-snapshot=false rpool/swapmkswap -f /dev/zvol/rpool/swapswapon /dev/zvol/rpool/swap

- You can verify your swap partition with the command

swapon --show. - Finally, you need to make the swap partition permanent by editing the fstab. Here's a one-liner to do that:

echo '/dev/zvol/rpool/swap none swap discard 0 0' >> /etc/fstab

Last edited:

Are the zfs swap problems already fixed meanwhile? Because previously you shouldn't run swap on ZFS and I think this is also the reason why there is no swap when using ZFS but swap when using LVM.

But when installing PVE with ZFS you can switch to advanced options and tell the PVE installer to keep some diskspace unallocated. You can then manually create a non ZFS swap partition on that unallocated space.

But when installing PVE with ZFS you can switch to advanced options and tell the PVE installer to keep some diskspace unallocated. You can then manually create a non ZFS swap partition on that unallocated space.

Last edited:

No, they're not fixed yet. Per this AskUbuntu post that explains the bug well:

As @Dunuin mentions, setting appropriate swap on install is the most robust way to go for now.

Therefore, use swap on ZFS at your own risk. Based on my personal experience, I've never filled up 8GB of swap, so this isn't a big risk to me. However in a production environment I could see this being a huge issue.Swapping onto a ZFS zvol is possible, but is not a good idea, because of an open bug that can cause your machine to deadlock when low on space.

Swap space is used when the machine is low on memory and trying to free some up by swapping out less-frequently used data.

When ZFS processes writes to a zvol, it can need to allocate new memory inside the kernel to handle updates to various ZFS data structures. If the machine is low on space already, it might need to swap something out to make this space available, but this causes an infinite loop.

So, instead of swapping to ZFS, just allow a small additional partition for swap space. Or for many situations you can just not have swap, and rely on other data being paged out to the filesystem to free up memory.

As @Dunuin mentions, setting appropriate swap on install is the most robust way to go for now.

Last edited:

One alternative is to set the hdsize parameter in the installer (needs a complete reinstall though) - to something smaller than the whole disk size (say 8 GB less) - then you have 8 GB of unpartitioned space in the end of the disk, which you can create a swap-partition on

I personally am running quite happily without swap - but of course YMMV

I personally am running quite happily without swap - but of course YMMV

Can you explain how to create the swap space using the unallocated space? I used 2 240gb ssds to create a raid 1 during install but only allocated 64gb for the host. How do I allocate the rest of this space? The GUI doesn't appear to provide any solutionsOne alternative is to set the hdsize parameter in the installer (needs a complete reinstall though) - to something smaller than the whole disk size (say 8 GB less) - then you have 8 GB of unpartitioned space in the end of the disk, which you can create a swap-partition on

I personally am running quite happily without swap - but of course YMMV

Jup, that only works using the CLI. First you create a new partition (for example usingCan you explain how to create the swap space using the unallocated space? I used 2 240gb ssds to create a raid 1 during install but only allocated 64gb for the host. How do I allocate the rest of this space? The GUI doesn't appear to provide any solutions

fdisk /dev/yourDisk). Then you can create a swap space on it (for example mkswap /dev/yourDiskYourPartition). Then you find out the UUID of that swap partition using the command ls -l /dev/disk/by-uuid. And edit your fstab (nano /etc/fstab) adding a new line there like UUID=YourSwapPartitionsUUID none swap sw 0 0. If you don't want to reboot right now you can also run additionally swapon -U YourSwapPartitionsUUID.But make sure you don't wipe your disk/partition creating it.

Thanks, the part that is confusing me is whether this partition will be mirrored as well or if it only resides on the free space of one disk?Jup, that only works using the CLI. First you create a new partition (for example usingfdisk /dev/yourDisk). Then you can create a swap space on it (for examplemkswap /dev/yourDiskYourPartition). Then you find out the UUID of that swap partition using the commandls -l /dev/disk/by-uuid. And edit your fstab (nano /etc/fstab) adding a new line there likeUUID=YourSwapPartitionsUUID none swap sw 0 0. If you don't want to reboot right now you can also run additionallyswapon -U YourSwapPartitionsUUID.

But make sure you don't wipe your disk/partition creating it.

No not out of the box - you could in theory create a mdraid for swap - however this can lead to (serious) issues in itself - seeThanks, the part that is confusing me is whether this partition will be mirrored as well or if it only resides on the free space of one disk?

https://bugzilla.kernel.org/show_bug.cgi?id=99171

Last time I configured a system with swap - I just created multiple swap-partitions on the drives (and lived with the risk of having the system crash in a situation where swap was used and a disk broke)

Would there be an advantage to having swap partitions on both of the drives in my mirror? Does proxmox just treat them like an extra stick of ram? I assume if that's possible it could speed things up if my system were to start swapping?No not out of the box - you could in theory create a mdraid for swap - however this can lead to (serious) issues in itself - see

https://bugzilla.kernel.org/show_bug.cgi?id=99171

Last time I configured a system with swap - I just created multiple swap-partitions on the drives (and lived with the risk of having the system crash in a situation where swap was used and a disk broke)

Thanks again to you both, got a swap partition added to both of my nodes without any headache

Many thanks for the explanations. I want to ask and verify if I understood correctly: The ZFS bug with swap only applies in situations with low space? I have a dedicated ZFS Mirror for rpool with 250GB, and since Proxmox only consumes 5GB, I have 230GB available. That means having 8GB swap on this pool should not trigger the deadlock, correct? Or, will the bug be triggered already if all of the 8GB are consumed?

Last edited:

@Helmut101 what you're saying isn't quite correct, but I realize my explanation above is misleading. Per the bug report, with zvol swap devices, high memory operations hang indefinitely despite there being a lot of swap space available. So this bug occurs during high memory stress situations, not due to the amount of free swap space per se.

Also worth mentioning per the bug report this does not occur on all Linux kernels. For example, Debian stretch: kernel 4.9.110-3+deb9u4 and zfs 0.6.5.9 is unaffected. The bug is reproducible so if you're dedicated enough you could stress test your system.

Anecdotally, I ran Proxmox on ZFS swap for about about 6 months without issue.

Also worth mentioning per the bug report this does not occur on all Linux kernels. For example, Debian stretch: kernel 4.9.110-3+deb9u4 and zfs 0.6.5.9 is unaffected. The bug is reproducible so if you're dedicated enough you could stress test your system.

Anecdotally, I ran Proxmox on ZFS swap for about about 6 months without issue.

Last edited:

Hi,Anecdotally, I ran Proxmox on ZFS swap for about about 6 months without issue.

Even more .... 3-4 years, without any problem !

Good luck / Bafta !

I realise this is an older post by now, but still one of the top results on Google. Is this still an issue on Proxmox 8.x? I have a couple of hosts that I'd like to add swap to, and a zpool for swap would be the most convenient way to achieve this. That said, I wouldn't want to do it if it is likely to affect stability.

Only a HW raid1 for swap as far as I know. Mdadm or ZFS as software raid still got these problems.

Sorry, a bit confused by this statement. I have 2x 250GB SSDs in a ZFS RAID1 that I am booting from. I'd like to add a swap volume within ZFS. Am I opening myself up to issues? Only using 50GB at the moment so there is plenty of free space.

Yes, swap on top of ZFS shouldn't be done. See my thread discussing mirrored swap: https://forum.proxmox.com/threads/best-way-to-setup-swap-partition.116781/I have 2x 250GB SSDs in a ZFS RAID1 that I am booting from. I'd like to add a swap volume within ZFS. Am I opening myself up to issues? Only using 50GB at the moment so there is plenty of free space.

Option 3.)

Use a zvol as swap partition on an encrypted ZFS mirror. This isn't great because of the feedback loop where swapping will cause ZFS to write more to the RAM, which will increase swapping, which will cause ZFS to write even more to RAM, which will increase swapping ... until you OOM.

See for example here: https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=199189

Yes, swap on top of ZFS shouldn't be done. See my thread discussing mirrored swap: https://forum.proxmox.com/threads/best-way-to-setup-swap-partition.116781/

Does this still apply when setting the zfs_arc_max to a very low value?

Say I have 128GB of RAM and set the zfs_arc_max to 32GB. Would the same warnings for using ZFS with swap still apply?

I don't see why this shouldn't be the case. It's about a racing condition between swap and ARC and this should always happen when the host is running out of RAM, no matter how small your ARC is. Smaller ARC only means you are hitting that race condition later as less RAM will be used.