Hello everyone,

I have a 3-node PVE cluster that I am using for testing and learning on. On this PVE cluster, I recently created a CEPH pool and had 4 VMs residing on it.

My PVE cluster was connected to my Mellanox 40GbE switch but I wanted to explore reconfiguring it for full mesh, which I did using 40GbE cabling from each server to the other.

I followed the guide here and used the Manual method with FRR since I am on PVE 8.4 still.

https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Routed_Setup_(with_fallback)

Since doing so, I cannot access my CEPH pool or access any CEPH settings. CEPH for me was configured for the public network of 192.168.161.0/24 which is the same network as my 40GbE connection

I have tried to turn off the firewall on the nodes and ebtables on the datacenter option but that didn't help.

I don't want to undo my cabling and I'd like to see if I can possible get it to work as it is. The VMs being lost won't impact me as I have copies of them on PBS but I'd like to see if I can salvage my CEPH configuration if its something.

I know the documentation for Full Mesh suggests setting up CEPH afterwards but there was no mention about any impacts to CEPH if it is already configured before changing to full meshed configuration.

I have gone through the documentation again and made sure my configuration in /etc/frr/frr.conf and /etc/network/interfaces are correct by the looks of it.

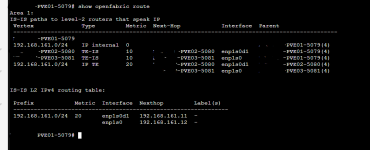

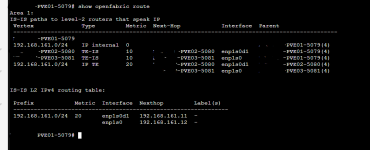

When I run show openfabric route, I do see my servers listed here:

Has anybody ran into this issue before or know what I can look at to fix it?

I have a 3-node PVE cluster that I am using for testing and learning on. On this PVE cluster, I recently created a CEPH pool and had 4 VMs residing on it.

My PVE cluster was connected to my Mellanox 40GbE switch but I wanted to explore reconfiguring it for full mesh, which I did using 40GbE cabling from each server to the other.

I followed the guide here and used the Manual method with FRR since I am on PVE 8.4 still.

https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Routed_Setup_(with_fallback)

Since doing so, I cannot access my CEPH pool or access any CEPH settings. CEPH for me was configured for the public network of 192.168.161.0/24 which is the same network as my 40GbE connection

I have tried to turn off the firewall on the nodes and ebtables on the datacenter option but that didn't help.

I don't want to undo my cabling and I'd like to see if I can possible get it to work as it is. The VMs being lost won't impact me as I have copies of them on PBS but I'd like to see if I can salvage my CEPH configuration if its something.

I know the documentation for Full Mesh suggests setting up CEPH afterwards but there was no mention about any impacts to CEPH if it is already configured before changing to full meshed configuration.

I have gone through the documentation again and made sure my configuration in /etc/frr/frr.conf and /etc/network/interfaces are correct by the looks of it.

When I run show openfabric route, I do see my servers listed here:

Has anybody ran into this issue before or know what I can look at to fix it?

Attachments

Last edited: