Slow memory leak in 6.8.12-13-pve

- Thread starter ewsclass66

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

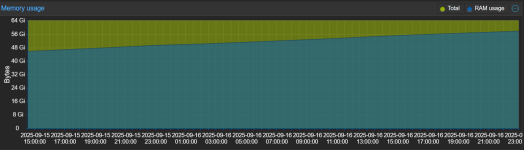

I really feel that the memory problem comes from the combination of ceph-osd + kernel > 6.8.12-11

In the tests I carried out in a virtual environment (three virtualised nodes on a single Proxmox node with an internal bridge), I did not use Intel 810 cards, yet I still observed a memory leak with Ceph 18.2.7 and Ceph 19.2.3.

I had a gradual increase in slab memory.

In the tests I carried out in a virtual environment (three virtualised nodes on a single Proxmox node with an internal bridge), I did not use Intel 810 cards, yet I still observed a memory leak with Ceph 18.2.7 and Ceph 19.2.3.

I had a gradual increase in slab memory.

I installed Linux kernel 6.14.11-2-pve (2025-09-12T09:46Z) and so far it appears stable. The system has been under fairly heavy stress testing for over 24 hours without issues. I’m still cautious about drawing conclusions too early, so I’ll keep monitoring and also wait to see if other users report similar experiences. At the moment it looks promising, but I’m not yet calling it a definitive fix.

Last edited:

Hello everyone,

I have exactly the same problem.

Also CEPH.

Network card: e810-xxvda2 sfp28 for SFPs

"Ethernet controller [0200]: Intel Corporation Ethernet Controller E810-XXV for SFP [8086:159b] (rev 02)

Subsystem: Intel Corporation Ethernet Network Adapter E810-XXV-2 [8086:0003]

Kernel driver in use: ice

Kernel modules: ice"

6.8.12-14-pve

=> Upgraded to -15 today, I hope this version will be an improvement.

I have exactly the same problem.

Also CEPH.

Network card: e810-xxvda2 sfp28 for SFPs

"Ethernet controller [0200]: Intel Corporation Ethernet Controller E810-XXV for SFP [8086:159b] (rev 02)

Subsystem: Intel Corporation Ethernet Network Adapter E810-XXV-2 [8086:0003]

Kernel driver in use: ice

Kernel modules: ice"

6.8.12-14-pve

=> Upgraded to -15 today, I hope this version will be an improvement.

Last edited:

Hello everyone,

I have exactly the same problem.

Also CEPH.

Network card: e810-xxvda2 sfp28 for SFPs

"Ethernet controller [0200]: Intel Corporation Ethernet Controller E810-XXV for SFP [8086:159b] (rev 02)

Subsystem: Intel Corporation Ethernet Network Adapter E810-XXV-2 [8086:0003]

Kernel driver in use: ice

Kernel modules: ice"

6.8.12-14-pve

=> Upgraded to -15 today, I hope this version will be an improvement.

I keep my fingers crossed, but from my experience — though I may be wrong — the last working kernel without the memory leak when using Ceph and Intel E810 was 6.8.12-11.

- Whether you're using Ceph.

Yes, both RBD and FS

- Which NIC is used for Ceph (exact NIC model). If multiple NICs are used (e.g. for public/cluster network, or bondings), please tell us the information for all used NICs.

Intel Corporation Ethernet Controller E810-C for QSFP (2x 100 Gb/s) for the Ceph storage network

- Which driver is used for the NIC (you can find that by checking the output of lspci -nnk and checking "Kernel driver in use").

Kernel driver in use: ice

Kernel modules: ice

Try this to disable TSO/GSO/GRO/LRO:

---

for IF in enp65s0f0np0 enp65s0f1np1; do

ethtool -K $IF tso off gso off gro off lro off

done

---

Then monitor your memory usage over a few hours to see if the leak still occurs.

In a affected cluster we disabled TSO/GSO/GRO/LRO with no resultTry this to disable TSO/GSO/GRO/LRO:

---

for IF in enp65s0f0np0 enp65s0f1np1; do

ethtool -K $IF tso off gso off gro off lro off

done

---

Then monitor your memory usage over a few hours to see if the leak still occurs.

In a affected cluster we disabled TSO/GSO/GRO/LRO with no result

With kernel 6.8.12-11 everything works fine, but the problem starts from 6.8.12-12 and newer. Based on my setup, I strongly believe the cause is related to the ice driver for the Intel E810 NIC.

Is anyone in this thread having the issue without the E810?strongly believe the cause is related to the ice driver for the Intel E810 NIC

I have not seen a memory leak. We use Ceph but don't have that NIC.

Is anyone in this thread having the issue without the E810?

I have not seen a memory leak. We use Ceph but don't have that NIC.

For testing and experimentation I run a small 5-node Ceph cluster equipped with Intel Ethernet Adapter X520-DA2 (dual-port 10 Gb SFP+). On this setup I have never observed any memory leaks – the system stays fully stable even under the heaviest load that a 10 GbE NIC can deliver.

I installed Linux kernel 6.14.11-2-pve (2025-09-12T09:46Z) and so far it appears stable. The system has been under fairly heavy stress testing for over 24 hours without issues. I’m still cautious about drawing conclusions too early, so I’ll keep monitoring and also wait to see if other users report similar experiences. At the moment it looks promising, but I’m not yet calling it a definitive fix.

Quick update on my earlier post: I was mistaken. After longer runtime and additional stress tests, the memory leak does occur on kernel 6.14.11-2-pve as well. Unfortunately this means the issue is still present, and at the moment the only reliable workaround remains to downgrade and pin the kernel to 6.8.12-11-pve.

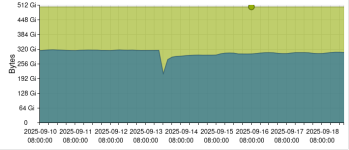

Thanks for sharing this. That aligns with what I’m seeing as well. Given the setup and the fact that it directly impacts the Intel E810 NIC, I also suspect it’s tied to the ice driver changes introduced in those newer kernels. On clusters that not use the E810 we don't see any memory leak. With support we tested kernel 6.14.11-2-ice-fix-1-pve for PVE9 without succes. We pinned all available kernels for PVE9 but all the kernels had the memory leak.

With kernel 6.8.12-11 everything works fine, but the problem starts from 6.8.12-12 and newer. Based on my setup, I strongly believe the cause is related to the ice driver for the Intel E810 NIC.

> Given the setup and the fact that it directly impacts the Intel E810 NIC, I also suspect it’s tied to the ice driver changes introduced in those newer kernels

yes, seems so:

https://securityvulnerability.io/vulnerability/CVE-2025-21981

https://securityvulnerability.io/vulnerability/CVE-2025-38417

maybe disabling aRFS via "ethtool -K <ethX> ntuple off" ( https://github.com/intel/ethernet-linux-ice ) is worth a try on affected system !?

https://lore.kernel.org/all/20250825-jk-ice-fix-rx-mem-leak-v2-1-5afbb654aebb@intel.com/ seems like a likely fix for this memory leak, a test kernel is available here and we'd appreciate feedback on whether it fixes your issues:

http://download.proxmox.com/temp/kernel-6.8-ice-memleak-fix-1/

thanks!

http://download.proxmox.com/temp/kernel-6.8-ice-memleak-fix-1/

thanks!

I upgraded a 3 node cluster last night. So far everything looks good - memory usage is stable. Will report in ~3-4 days.https://lore.kernel.org/all/20250825-jk-ice-fix-rx-mem-leak-v2-1-5afbb654aebb@intel.com/ seems like a likely fix for this memory leak, a test kernel is available here and we'd appreciate feedback on whether it fixes your issues:

http://download.proxmox.com/temp/kernel-6.8-ice-memleak-fix-1/

thanks!

Thanks!

Bgrds,

Finnzi

https://lore.kernel.org/all/20250825-jk-ice-fix-rx-mem-leak-v2-1-5afbb654aebb@intel.com/ seems like a likely fix for this memory leak, a test kernel is available here and we'd appreciate feedback on whether it fixes your issues:

http://download.proxmox.com/temp/kernel-6.8-ice-memleak-fix-1/

thanks!

Installed and pinned kernel 6.8.12-15-ice-fix-1-pve. In Proxmox VE, the system reports as Linux 6.8.12-15-ice-fix-1-pve (unknown). Launched sustained high-I/O stress testing using 12 Windows Server 2025 virtual machines with parallel workloads. Will monitor behavior over the next 2–3 days. Thank you for providing the test build.

Piling in to provide additional details in case it helps. I am seeing the same thing on a fully updated 9.0.9 cluster using dual port E810-CQDA2 in bonded LACP configurations. Ceph RBD in hyperconverged deployment, userspace memory is barely used at all, over about a week of uptime 300GB of RAM is "missing"

NICs

top bits of slabtop if it helps (slabtop -o | head -n 50):

NICs

Code:

81:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-C for QSFP (rev 01)

Subsystem: Intel Corporation Ethernet Network Adapter E810-C-Q2

Flags: bus master, fast devsel, latency 0, IRQ 160, NUMA node 0, IOMMU group 29

Memory at 5244a000000 (64-bit, prefetchable) [size=32M]

Memory at 5244e010000 (64-bit, prefetchable) [size=64K]

Expansion ROM at b2100000 [disabled] [size=1M]

Capabilities: [40] Power Management version 3

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Capabilities: [70] MSI-X: Enable+ Count=1024 Masked-

Capabilities: [a0] Express Endpoint, IntMsgNum 0

Capabilities: [e0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [148] Alternative Routing-ID Interpretation (ARI)

Capabilities: [150] Device Serial Number 6c-fe-54-ff-ff-3c-90-a0

Capabilities: [160] Single Root I/O Virtualization (SR-IOV)

Capabilities: [1a0] Transaction Processing Hints

Capabilities: [1b0] Access Control Services

Capabilities: [1d0] Secondary PCI Express

Capabilities: [200] Data Link Feature <?>

Capabilities: [210] Physical Layer 16.0 GT/s <?>

Capabilities: [250] Lane Margining at the Receiver

Kernel driver in use: ice

Kernel modules: ice

81:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-C for QSFP (rev 01)

Subsystem: Intel Corporation Ethernet Network Adapter E810-C-Q2

Flags: bus master, fast devsel, latency 0, IRQ 160, NUMA node 0, IOMMU group 30

Memory at 52448000000 (64-bit, prefetchable) [size=32M]

Memory at 5244e000000 (64-bit, prefetchable) [size=64K]

Expansion ROM at b2000000 [disabled] [size=1M]

Capabilities: [40] Power Management version 3

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Capabilities: [70] MSI-X: Enable+ Count=1024 Masked-

Capabilities: [a0] Express Endpoint, IntMsgNum 0

Capabilities: [e0] Vital Product Data

Capabilities: [100] Advanced Error Reporting

Capabilities: [148] Alternative Routing-ID Interpretation (ARI)

Capabilities: [150] Device Serial Number 6c-fe-54-ff-ff-3c-90-a0

Capabilities: [160] Single Root I/O Virtualization (SR-IOV)

Capabilities: [1a0] Transaction Processing Hints

Capabilities: [1b0] Access Control Services

Capabilities: [200] Data Link Feature <?>

Kernel driver in use: ice

Kernel modules: icetop bits of slabtop if it helps (slabtop -o | head -n 50):

Code:

Active / Total Objects (% used) : 51014776 / 51175472 (99.7%)

Active / Total Slabs (% used) : 806349 / 806349 (100.0%)

Active / Total Caches (% used) : 402 / 478 (84.1%)

Active / Total Size (% used) : 4218082.10K / 4298029.96K (98.1%)

Minimum / Average / Maximum Object : 0.01K / 0.08K / 16.00K

OBJS ACTIVE USE OBJ SIZE SLABS OBJ/SLAB CACHE SIZE NAME

45432448 45432448 100% 0.06K 709882 64 2839528K dmaengine-unmap-2

436917 436917 100% 0.10K 11203 39 44812K buffer_head

221466 221162 99% 0.19K 5273 42 42184K dentry

166784 38614 23% 0.50K 2606 64 83392K kmalloc-rnd-03-512

152700 152700 100% 0.13K 2545 60 20360K kernfs_node_cache

136192 136024 99% 0.03K 1064 128 4256K kmalloc-rnd-12-32

110080 110080 100% 0.01K 215 512 860K kmalloc-rnd-06-8

102816 99357 96% 0.07K 1836 56 7344K vmap_area

100786 100413 99% 0.09K 2191 46 8764K lsm_inode_cache

87488 85733 97% 1.00K 2734 32 87488K iommu_iova_magazine

79360 79360 100% 0.01K 155 512 620K kmalloc-rnd-01-8

74004 74004 100% 0.09K 1762 42 7048K kmalloc-rcl-96

73728 73728 100% 0.01K 144 512 576K kmalloc-rnd-12-8

71680 71680 100% 0.01K 140 512 560K kmalloc-rnd-09-8

67072 67072 100% 0.01K 131 512 524K kmalloc-rnd-02-8

66560 66560 100% 0.01K 130 512 520K kmalloc-cg-8

65536 65536 100% 0.01K 128 512 512K kmalloc-rnd-10-8

65536 65536 100% 0.01K 128 512 512K kmalloc-8

61952 61952 100% 0.01K 121 512 484K kmalloc-rnd-15-8

60928 60928 100% 0.01K 119 512 476K kmalloc-rnd-04-8

54336 54210 99% 0.50K 849 64 27168K kmalloc-512

52736 52736 100% 0.01K 103 512 412K memdup_user-8

51648 51319 99% 0.25K 807 64 12912K kmalloc-256

50592 50170 99% 0.04K 496 102 1984K vma_lock

49000 37786 77% 0.57K 875 56 28000K radix_tree_node

44032 44032 100% 0.01K 86 512 344K kmalloc-rnd-13-8

42752 42742 99% 0.03K 334 128 1336K kmalloc-rnd-02-32

41888 41608 99% 0.23K 616 68 9856K arc_buf_hdr_t_full

41472 41472 100% 0.02K 162 256 648K kmalloc-rnd-13-16

41216 41027 99% 0.03K 322 128 1288K kmalloc-rcl-32

40832 40432 99% 0.18K 928 44 7424K vm_area_struct

40576 40224 99% 0.06K 634 64 2536K anon_vma_chain

40192 40192 100% 0.02K 157 256 628K kmalloc-rnd-01-16

37248 36675 98% 0.03K 291 128 1164K kmalloc-rnd-09-32

35840 35840 100% 0.01K 70 512 280K kmalloc-rnd-05-8

33994 32966 96% 0.69K 739 46 23648K proc_inode_cache

33792 33792 100% 0.02K 132 256 528K kmalloc-rnd-12-16

33792 33792 100% 0.02K 132 256 528K kmalloc-rnd-05-16

33792 33792 100% 0.02K 132 256 528K kmalloc-rnd-03-16

33792 33792 100% 0.02K 132 256 528K kmalloc-16

33536 33536 100% 0.02K 131 256 524K kmalloc-rnd-09-16

33344 33066 99% 0.06K 521 64 2084K kmalloc-rnd-02-64

33280 33280 100% 0.02K 130 256 520K kmalloc-cg-16