Hello everyone.

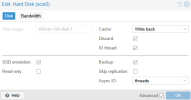

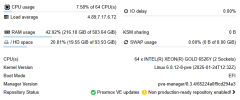

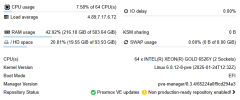

I recently acquired 2 HPE DL380 GEN11 servers. Each node has 512GB of RAM and 4 PCIe5 NVME drives of 3.2TB (HPE, but manufactured by KIOXIA). Given my use case, where the goal was not to have shared storage but rather a cluster with VM replication, I created a logical volume for VMs with the NVME drives (directly attached to the CPU, of course). My boot logical volume consists of two Samsung SSDs... but that's not relevant for this discussion.

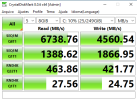

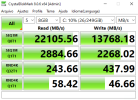

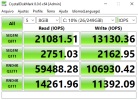

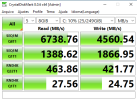

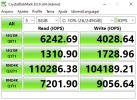

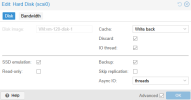

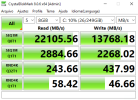

In some tests performed within Windows VMs (deployed using the recommendations from the Proxmox wiki), I’m getting a maximum of around 6500MB/s.

Don’t get me wrong—I am very satisfied with the overall performance of the system, but I feel that, given the hardware I have, I'm facing a significant penalty due to ZFS. Even though I know ZFS is not a filesystem focused on performance, I think we all are always looking for something more My ZFS configuration is default, with ashift 12 and compression enabled. I have atime disabled.

My ZFS configuration is default, with ashift 12 and compression enabled. I have atime disabled.

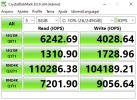

For your reference, HPE states that these disks are capable of:

MAX Seq Reads / Max Seq Writes Throughput (MiB/s): 13,852 / 6,802 MB/s

Read IOPS

Random Read IOPS (4KiB, Q=16) = 215,995

Max Random Read IOPS (4KiB) = 1,159,100 @ Q128

Write IOPS

Random Write IOPS (4KiB, Q=16) = 599,050

Max Random Write IOPS (4KiB) = 649,489 @ Q16, 13,852 / 6,802 MB/s

Any ideas?

EDIT:

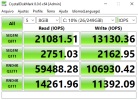

Tested with:

I recently acquired 2 HPE DL380 GEN11 servers. Each node has 512GB of RAM and 4 PCIe5 NVME drives of 3.2TB (HPE, but manufactured by KIOXIA). Given my use case, where the goal was not to have shared storage but rather a cluster with VM replication, I created a logical volume for VMs with the NVME drives (directly attached to the CPU, of course). My boot logical volume consists of two Samsung SSDs... but that's not relevant for this discussion.

In some tests performed within Windows VMs (deployed using the recommendations from the Proxmox wiki), I’m getting a maximum of around 6500MB/s.

Don’t get me wrong—I am very satisfied with the overall performance of the system, but I feel that, given the hardware I have, I'm facing a significant penalty due to ZFS. Even though I know ZFS is not a filesystem focused on performance, I think we all are always looking for something more

For your reference, HPE states that these disks are capable of:

MAX Seq Reads / Max Seq Writes Throughput (MiB/s): 13,852 / 6,802 MB/s

Read IOPS

Random Read IOPS (4KiB, Q=16) = 215,995

Max Random Read IOPS (4KiB) = 1,159,100 @ Q128

Write IOPS

Random Write IOPS (4KiB, Q=16) = 599,050

Max Random Write IOPS (4KiB) = 649,489 @ Q16, 13,852 / 6,802 MB/s

Any ideas?

EDIT:

Tested with:

Last edited: