Hi everybody,

After some research on the web, I think there's no solution with OPNsense but I ask you because perhaps I've missed something.

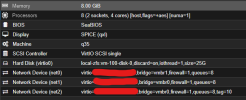

My config :

And so different between NAT and Bridge on my box?

Could OPNsense and Proxmox be optimized to work great together?

It's there a possibility to install something like qemu-guest-agent in OPNsense to have a good network speed?

Thanks in advance for your help and advice.

Best regards,

Benjam

After some research on the web, I think there's no solution with OPNsense but I ask you because perhaps I've missed something.

My config :

- Proxmox 7 (Debian 11)

- OPNsense 21.7

- Virtio network card (queue 8)

- More than 1Gb/s web connexion

- Box directly connected to my desktop Debian : 1250 Mb/s down - 700 Mb/s up.

- Box in NAT connected to OPNsense (VM in Proxmox) : 550 Mb/s down - 330 Mb/s down.

- Box in bridge mode with public IP in OPNsense : 240 Mb/s up and down.

And so different between NAT and Bridge on my box?

Could OPNsense and Proxmox be optimized to work great together?

It's there a possibility to install something like qemu-guest-agent in OPNsense to have a good network speed?

Thanks in advance for your help and advice.

Best regards,

Benjam